Zheng-Meng Zhai

Deficiency of equation-finding approach to data-driven modeling of dynamical systems

Sep 03, 2025Abstract:Finding the governing equations from data by sparse optimization has become a popular approach to deterministic modeling of dynamical systems. Considering the physical situations where the data can be imperfect due to disturbances and measurement errors, we show that for many chaotic systems, widely used sparse-optimization methods for discovering governing equations produce models that depend sensitively on the measurement procedure, yet all such models generate virtually identical chaotic attractors, leading to a striking limitation that challenges the conventional notion of equation-based modeling in complex dynamical systems. Calculating the Koopman spectra, we find that the different sets of equations agree in their large eigenvalues and the differences begin to appear when the eigenvalues are smaller than an equation-dependent threshold. The results suggest that finding the governing equations of the system and attempting to interpret them physically may lead to misleading conclusions. It would be more useful to work directly with the available data using, e.g., machine-learning methods.

Reconstructing dynamics from sparse observations with no training on target system

Oct 28, 2024

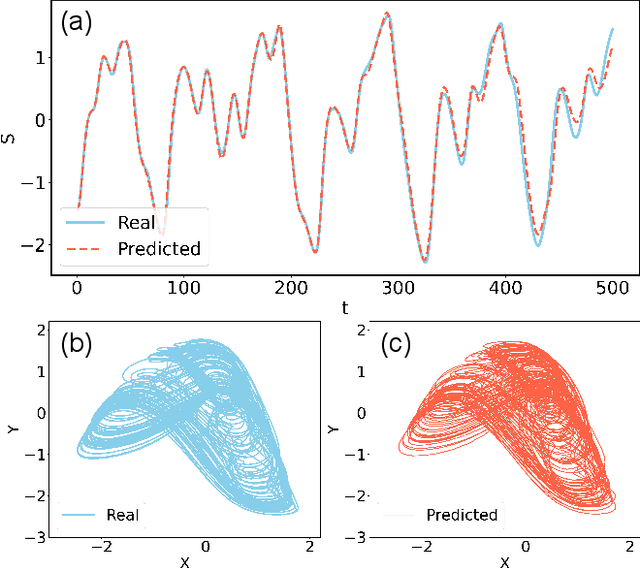

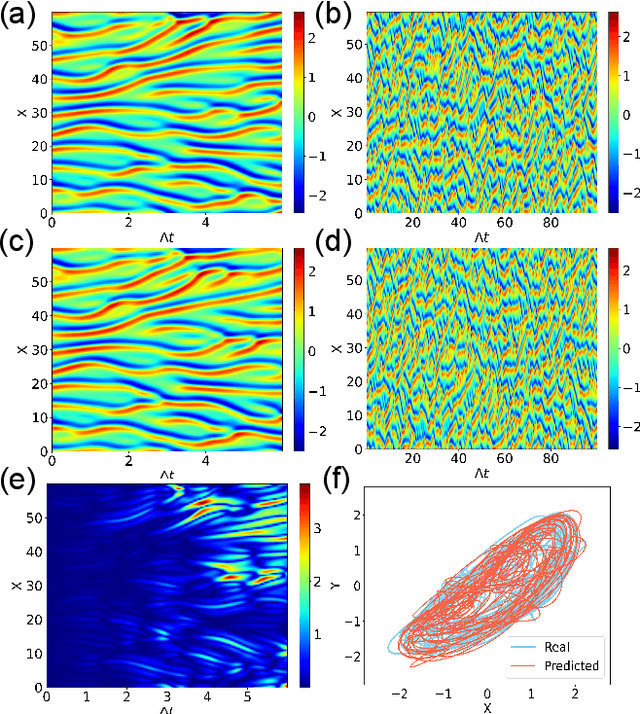

Abstract:In applications, an anticipated situation is where the system of interest has never been encountered before and sparse observations can be made only once. Can the dynamics be faithfully reconstructed from the limited observations without any training data? This problem defies any known traditional methods of nonlinear time-series analysis as well as existing machine-learning methods that typically require extensive data from the target system for training. We address this challenge by developing a hybrid transformer and reservoir-computing machine-learning scheme. The key idea is that, for a complex and nonlinear target system, the training of the transformer can be conducted not using any data from the target system, but with essentially unlimited synthetic data from known chaotic systems. The trained transformer is then tested with the sparse data from the target system. The output of the transformer is further fed into a reservoir computer for predicting the long-term dynamics or the attractor of the target system. The power of the proposed hybrid machine-learning framework is demonstrated using a large number of prototypical nonlinear dynamical systems, with high reconstruction accuracy even when the available data is only 20% of that required to faithfully represent the dynamical behavior of the underlying system. The framework provides a paradigm of reconstructing complex and nonlinear dynamics in the extreme situation where training data does not exist and the observations are random and sparse.

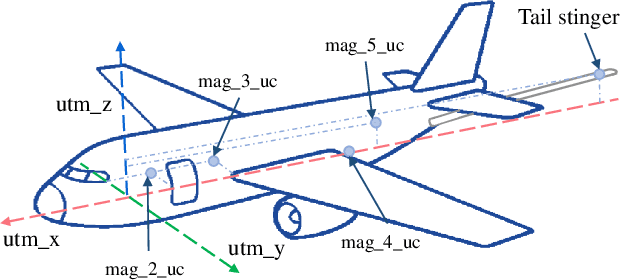

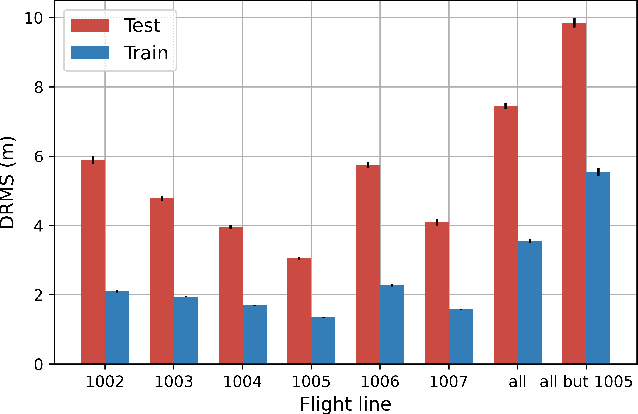

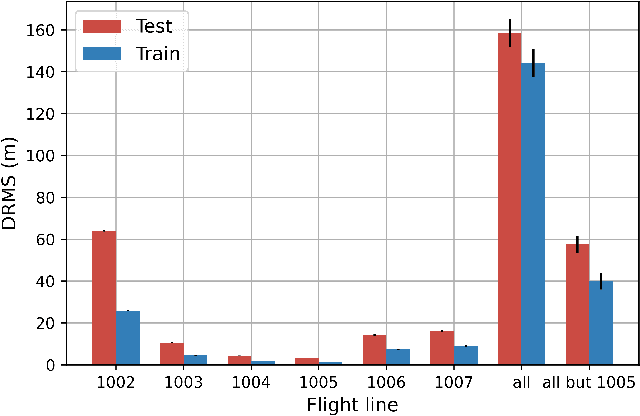

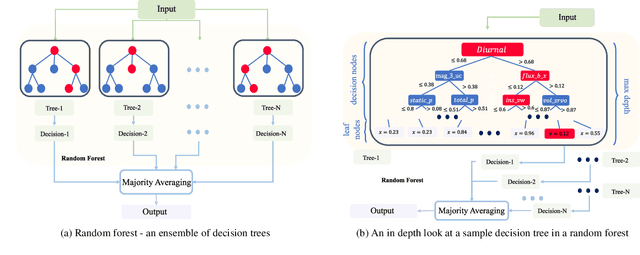

Random forests for detecting weak signals and extracting physical information: a case study of magnetic navigation

Feb 21, 2024

Abstract:It was recently demonstrated that two machine-learning architectures, reservoir computing and time-delayed feed-forward neural networks, can be exploited for detecting the Earth's anomaly magnetic field immersed in overwhelming complex signals for magnetic navigation in a GPS-denied environment. The accuracy of the detected anomaly field corresponds to a positioning accuracy in the range of 10 to 40 meters. To increase the accuracy and reduce the uncertainty of weak signal detection as well as to directly obtain the position information, we exploit the machine-learning model of random forests that combines the output of multiple decision trees to give optimal values of the physical quantities of interest. In particular, from time-series data gathered from the cockpit of a flying airplane during various maneuvering stages, where strong background complex signals are caused by other elements of the Earth's magnetic field and the fields produced by the electronic systems in the cockpit, we demonstrate that the random-forest algorithm performs remarkably well in detecting the weak anomaly field and in filtering the position of the aircraft. With the aid of the conventional inertial navigation system, the positioning error can be reduced to less than 10 meters. We also find that, contrary to the conventional wisdom, the classic Tolles-Lawson model for calibrating and removing the magnetic field generated by the body of the aircraft is not necessary and may even be detrimental for the success of the random-forest method.

Machine-learning prediction of tipping and collapse of the Atlantic Meridional Overturning Circulation

Feb 21, 2024

Abstract:Recent research on the Atlantic Meridional Overturning Circulation (AMOC) raised concern about its potential collapse through a tipping point due to the climate-change caused increase in the freshwater input into the North Atlantic. The predicted time window of collapse is centered about the middle of the century and the earliest possible start is approximately two years from now. More generally, anticipating a tipping point at which the system transitions from one stable steady state to another is relevant to a broad range of fields. We develop a machine-learning approach to predicting tipping in noisy dynamical systems with a time-varying parameter and test it on a number of systems including the AMOC, ecological networks, an electrical power system, and a climate model. For the AMOC, our prediction based on simulated fingerprint data and real data of the sea surface temperature places the time window of a potential collapse between the years 2040 and 2065.

Machine-learning parameter tracking with partial state observation

Nov 15, 2023

Abstract:Complex and nonlinear dynamical systems often involve parameters that change with time, accurate tracking of which is essential to tasks such as state estimation, prediction, and control. Existing machine-learning methods require full state observation of the underlying system and tacitly assume adiabatic changes in the parameter. Formulating an inverse problem and exploiting reservoir computing, we develop a model-free and fully data-driven framework to accurately track time-varying parameters from partial state observation in real time. In particular, with training data from a subset of the dynamical variables of the system for a small number of known parameter values, the framework is able to accurately predict the parameter variations in time. Low- and high-dimensional, Markovian and non-Markovian nonlinear dynamical systems are used to demonstrate the power of the machine-learning based parameter-tracking framework. Pertinent issues affecting the tracking performance are addressed.

Model-free tracking control of complex dynamical trajectories with machine learning

Sep 20, 2023Abstract:Nonlinear tracking control enabling a dynamical system to track a desired trajectory is fundamental to robotics, serving a wide range of civil and defense applications. In control engineering, designing tracking control requires complete knowledge of the system model and equations. We develop a model-free, machine-learning framework to control a two-arm robotic manipulator using only partially observed states, where the controller is realized by reservoir computing. Stochastic input is exploited for training, which consists of the observed partial state vector as the first and its immediate future as the second component so that the neural machine regards the latter as the future state of the former. In the testing (deployment) phase, the immediate-future component is replaced by the desired observational vector from the reference trajectory. We demonstrate the effectiveness of the control framework using a variety of periodic and chaotic signals, and establish its robustness against measurement noise, disturbances, and uncertainties.

* 16 pages, 8 figures

Emergence of a stochastic resonance in machine learning

Nov 15, 2022

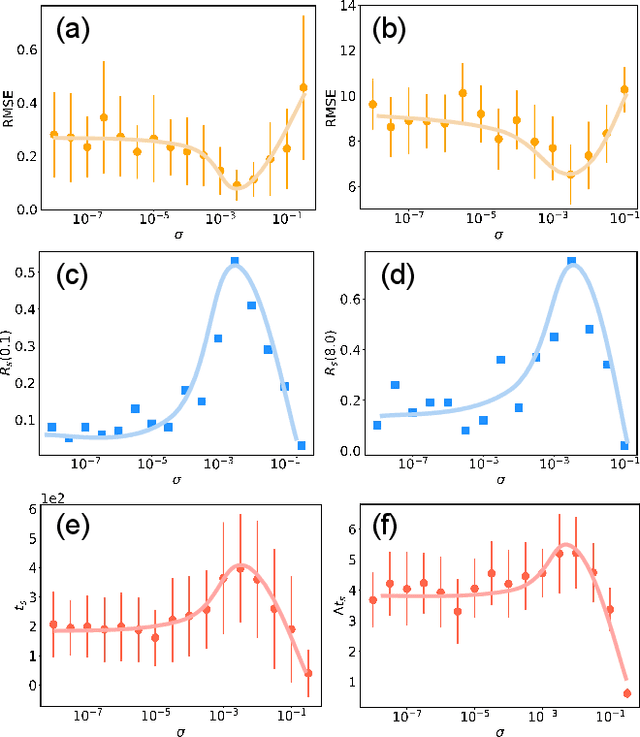

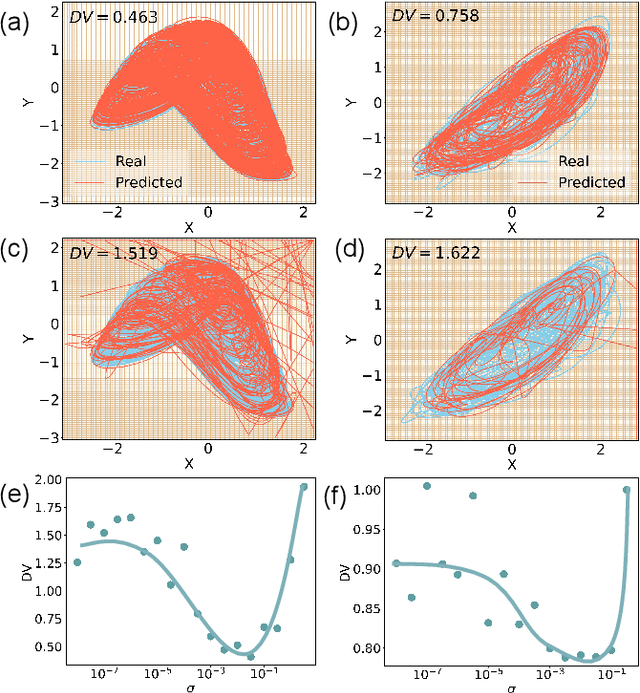

Abstract:Can noise be beneficial to machine-learning prediction of chaotic systems? Utilizing reservoir computers as a paradigm, we find that injecting noise to the training data can induce a stochastic resonance with significant benefits to both short-term prediction of the state variables and long-term prediction of the attractor of the system. A key to inducing the stochastic resonance is to include the amplitude of the noise in the set of hyperparameters for optimization. By so doing, the prediction accuracy, stability and horizon can be dramatically improved. The stochastic resonance phenomenon is demonstrated using two prototypical high-dimensional chaotic systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge