Zhekun Lv

Motion-Aware Transformer For Occluded Person Re-identification

Feb 10, 2022

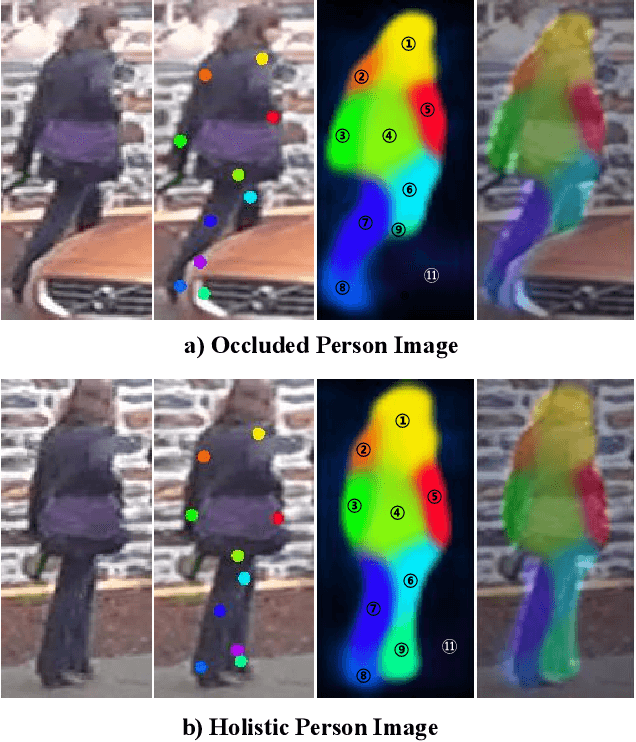

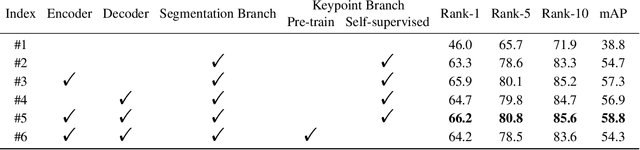

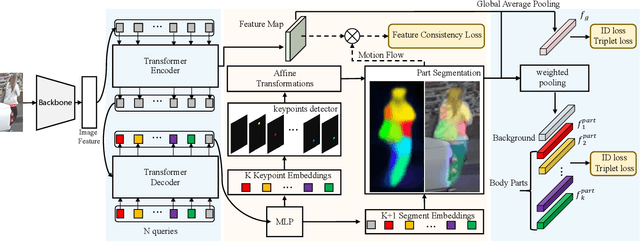

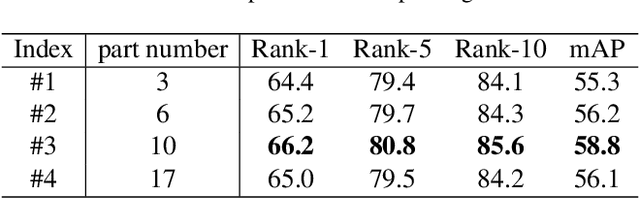

Abstract:Recently, occluded person re-identification(Re-ID) remains a challenging task that people are frequently obscured by other people or obstacles, especially in a crowd massing situation. In this paper, we propose a self-supervised deep learning method to improve the location performance for human parts through occluded person Re-ID. Unlike previous works, we find that motion information derived from the photos of various human postures can help identify major human body components. Firstly, a motion-aware transformer encoder-decoder architecture is designed to obtain keypoints heatmaps and part-segmentation maps. Secondly, an affine transformation module is utilized to acquire motion information from the keypoint detection branch. Then the motion information will support the segmentation branch to achieve refined human part segmentation maps, and effectively divide the human body into reasonable groups. Finally, several cases demonstrate the efficiency of the proposed model in distinguishing different representative parts of the human body, which can avoid the background and occlusion disturbs. Our method consistently achieves state-of-the-art results on several popular datasets, including occluded, partial, and holistic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge