Zhaoshi Meng

Marginal Likelihoods for Distributed Parameter Estimation of Gaussian Graphical Models

Aug 13, 2014

Abstract:We consider distributed estimation of the inverse covariance matrix, also called the concentration or precision matrix, in Gaussian graphical models. Traditional centralized estimation often requires global inference of the covariance matrix, which can be computationally intensive in large dimensions. Approximate inference based on message-passing algorithms, on the other hand, can lead to unstable and biased estimation in loopy graphical models. In this paper, we propose a general framework for distributed estimation based on a maximum marginal likelihood (MML) approach. This approach computes local parameter estimates by maximizing marginal likelihoods defined with respect to data collected from local neighborhoods. Due to the non-convexity of the MML problem, we introduce and solve a convex relaxation. The local estimates are then combined into a global estimate without the need for iterative message-passing between neighborhoods. The proposed algorithm is naturally parallelizable and computationally efficient, thereby making it suitable for high-dimensional problems. In the classical regime where the number of variables $p$ is fixed and the number of samples $T$ increases to infinity, the proposed estimator is shown to be asymptotically consistent and to improve monotonically as the local neighborhood size increases. In the high-dimensional scaling regime where both $p$ and $T$ increase to infinity, the convergence rate to the true parameters is derived and is seen to be comparable to centralized maximum likelihood estimation. Extensive numerical experiments demonstrate the improved performance of the two-hop version of the proposed estimator, which suffices to almost close the gap to the centralized maximum likelihood estimator at a reduced computational cost.

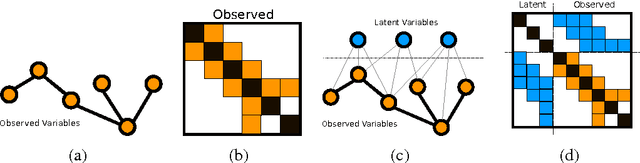

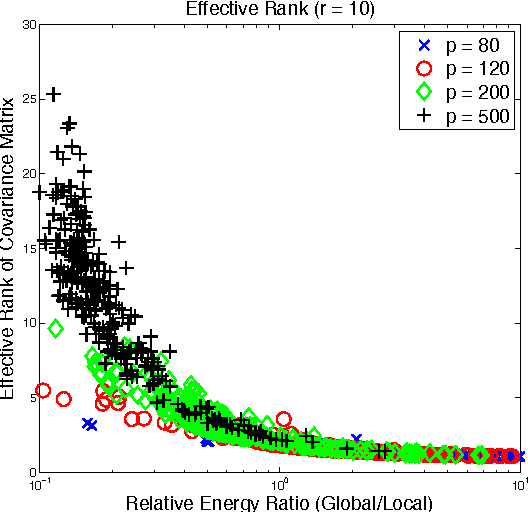

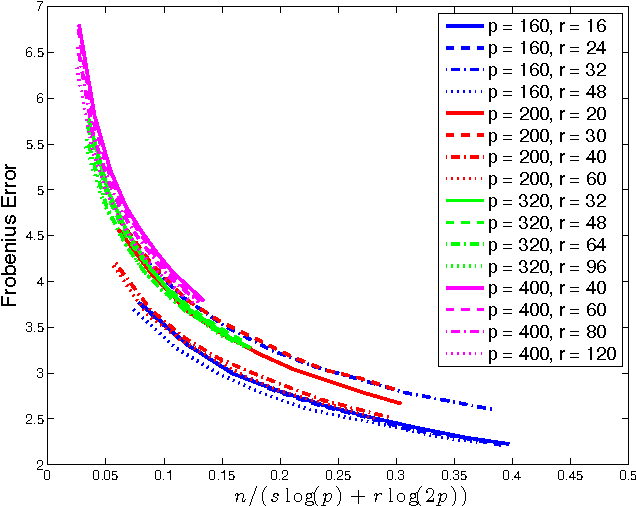

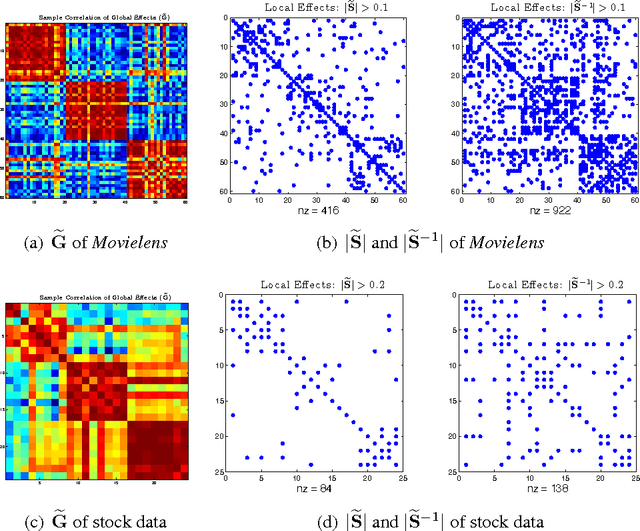

Learning Latent Variable Gaussian Graphical Models

Jun 10, 2014

Abstract:Gaussian graphical models (GGM) have been widely used in many high-dimensional applications ranging from biological and financial data to recommender systems. Sparsity in GGM plays a central role both statistically and computationally. Unfortunately, real-world data often does not fit well to sparse graphical models. In this paper, we focus on a family of latent variable Gaussian graphical models (LVGGM), where the model is conditionally sparse given latent variables, but marginally non-sparse. In LVGGM, the inverse covariance matrix has a low-rank plus sparse structure, and can be learned in a regularized maximum likelihood framework. We derive novel parameter estimation error bounds for LVGGM under mild conditions in the high-dimensional setting. These results complement the existing theory on the structural learning, and open up new possibilities of using LVGGM for statistical inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge