Zehuan Zhang

Accelerating MRI Uncertainty Estimation with Mask-based Bayesian Neural Network

Jul 07, 2024Abstract:Accurate and reliable Magnetic Resonance Imaging (MRI) analysis is particularly important for adaptive radiotherapy, a recent medical advance capable of improving cancer diagnosis and treatment. Recent studies have shown that IVIM-NET, a deep neural network (DNN), can achieve high accuracy in MRI analysis, indicating the potential of deep learning to enhance diagnostic capabilities in healthcare. However, IVIM-NET does not provide calibrated uncertainty information needed for reliable and trustworthy predictions in healthcare. Moreover, the expensive computation and memory demands of IVIM-NET reduce hardware performance, hindering widespread adoption in realistic scenarios. To address these challenges, this paper proposes an algorithm-hardware co-optimization flow for high-performance and reliable MRI analysis. At the algorithm level, a transformation design flow is introduced to convert IVIM-NET to a mask-based Bayesian Neural Network (BayesNN), facilitating reliable and efficient uncertainty estimation. At the hardware level, we propose an FPGA-based accelerator with several hardware optimizations, such as mask-zero skipping and operation reordering. Experimental results demonstrate that our co-design approach can satisfy the uncertainty requirements of MRI analysis, while achieving 7.5 times and 32.5 times speedup on an Xilinx VU13P FPGA compared to GPU and CPU implementations with reduced power consumption.

Hardware-Aware Neural Dropout Search for Reliable Uncertainty Prediction on FPGA

Jun 23, 2024

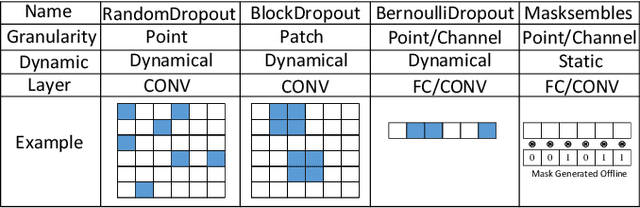

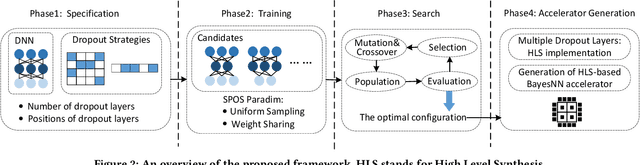

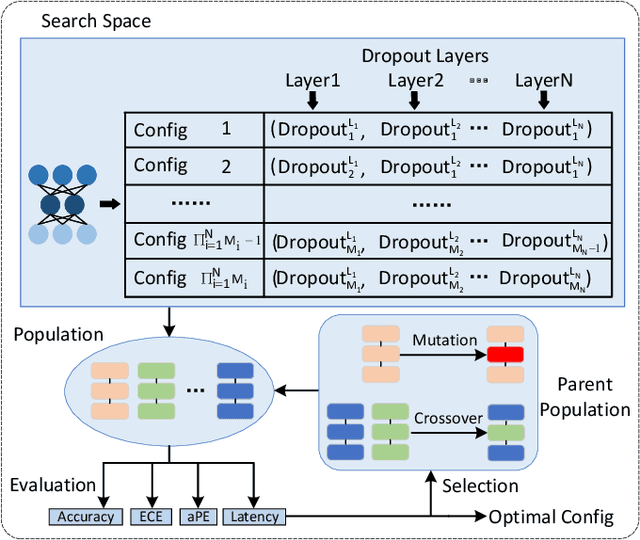

Abstract:The increasing deployment of artificial intelligence (AI) for critical decision-making amplifies the necessity for trustworthy AI, where uncertainty estimation plays a pivotal role in ensuring trustworthiness. Dropout-based Bayesian Neural Networks (BayesNNs) are prominent in this field, offering reliable uncertainty estimates. Despite their effectiveness, existing dropout-based BayesNNs typically employ a uniform dropout design across different layers, leading to suboptimal performance. Moreover, as diverse applications require tailored dropout strategies for optimal performance, manually optimizing dropout configurations for various applications is both error-prone and labor-intensive. To address these challenges, this paper proposes a novel neural dropout search framework that automatically optimizes both the dropout-based BayesNNs and their hardware implementations on FPGA. We leverage one-shot supernet training with an evolutionary algorithm for efficient dropout optimization. A layer-wise dropout search space is introduced to enable the automatic design of dropout-based BayesNNs with heterogeneous dropout configurations. Extensive experiments demonstrate that our proposed framework can effectively find design configurations on the Pareto frontier. Compared to manually-designed dropout-based BayesNNs on GPU, our search approach produces FPGA designs that can achieve up to 33X higher energy efficiency. Compared to state-of-the-art FPGA designs of BayesNN, the solutions from our approach can achieve higher algorithmic performance and energy efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge