Zachary C. Steinert-Threlkeld

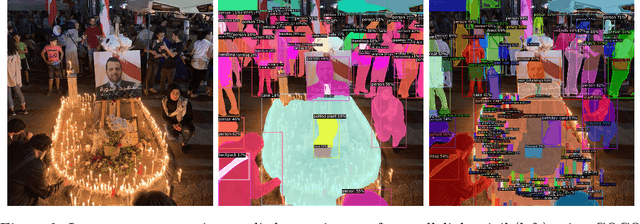

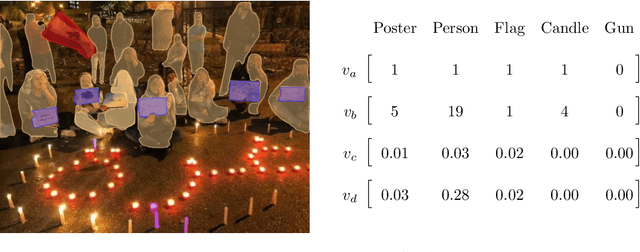

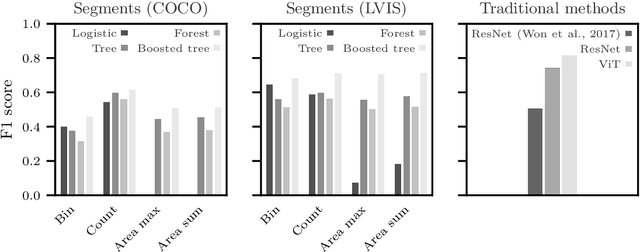

Improving Computer Vision Interpretability: Transparent Two-level Classification for Complex Scenes

Jul 04, 2024

Abstract:Treating images as data has become increasingly popular in political science. While existing classifiers for images reach high levels of accuracy, it is difficult to systematically assess the visual features on which they base their classification. This paper presents a two-level classification method that addresses this transparency problem. At the first stage, an image segmenter detects the objects present in the image and a feature vector is created from those objects. In the second stage, this feature vector is used as input for standard machine learning classifiers to discriminate between images. We apply this method to a new dataset of more than 140,000 images to detect which ones display political protest. This analysis demonstrates three advantages to this paper's approach. First, identifying objects in images improves transparency by providing human-understandable labels for the objects shown on an image. Second, knowing these objects enables analysis of which distinguish protest images from non-protest ones. Third, comparing the importance of objects across countries reveals how protest behavior varies. These insights are not available using conventional computer vision classifiers and provide new opportunities for comparative research.

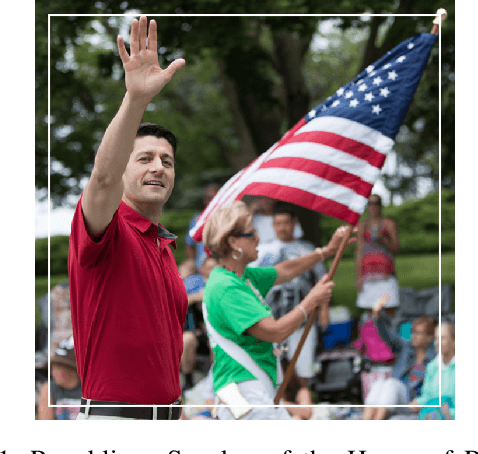

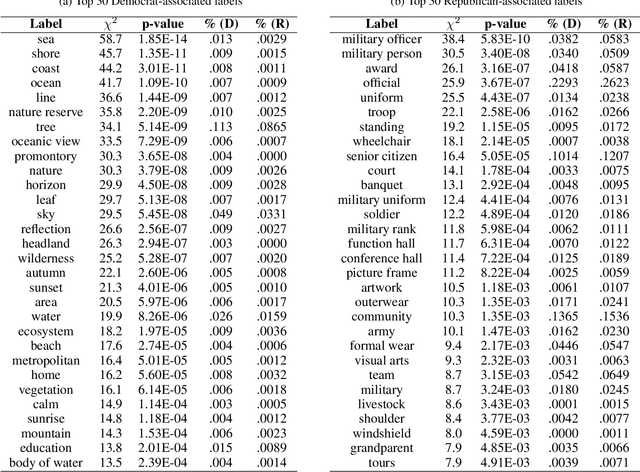

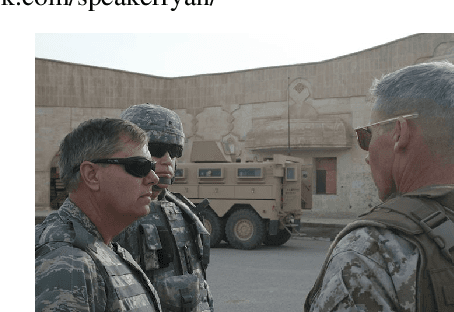

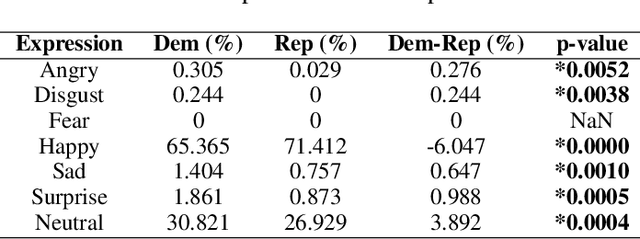

Understanding the Political Ideology of Legislators from Social Media Images

Jul 22, 2019

Abstract:In this paper, we seek to understand how politicians use images to express ideological rhetoric through Facebook images posted by members of the U.S. House and Senate. In the era of social media, politics has become saturated with imagery, a potent and emotionally salient form of political rhetoric which has been used by politicians and political organizations to influence public sentiment and voting behavior for well over a century. To date, however, little is known about how images are used as political rhetoric. Using deep learning techniques to automatically predict Republican or Democratic party affiliation solely from the Facebook photographs of the members of the 114th U.S. Congress, we demonstrate that predicted class probabilities from our model function as an accurate proxy of the political ideology of images along a left-right (liberal-conservative) dimension. After controlling for the gender and race of politicians, our method achieves an accuracy of 59.28% from single photographs and 82.35% when aggregating scores from multiple photographs (up to 150) of the same person. To better understand image content distinguishing liberal from conservative images, we also perform in-depth content analyses of the photographs. Our findings suggest that conservatives tend to use more images supporting status quo political institutions and hierarchy maintenance, featuring individuals from dominant social groups, and displaying greater happiness than liberals.

Image as Data: Automated Visual Content Analysis for Political Science

Oct 03, 2018

Abstract:Image data provide unique information about political events, actors, and their interactions which are difficult to measure from or not available in text data. This article introduces a new class of automated methods based on computer vision and deep learning which can automatically analyze visual content data. Scholars have already recognized the importance of visual data and a variety of large visual datasets have become available. The lack of scalable analytic methods, however, has prevented from incorporating large scale image data in political analysis. This article aims to offer an in-depth overview of automated methods for visual content analysis and explains their usages and implementations. We further elaborate on how these methods and results can be validated and interpreted. We then discuss how these methods can contribute to the study of political communication, identity and politics, development, and conflict, by enabling a new set of research questions at scale.

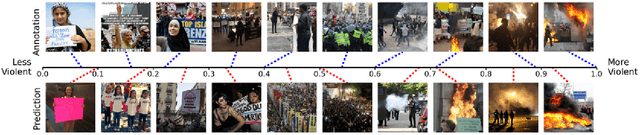

Protest Activity Detection and Perceived Violence Estimation from Social Media Images

Sep 18, 2017

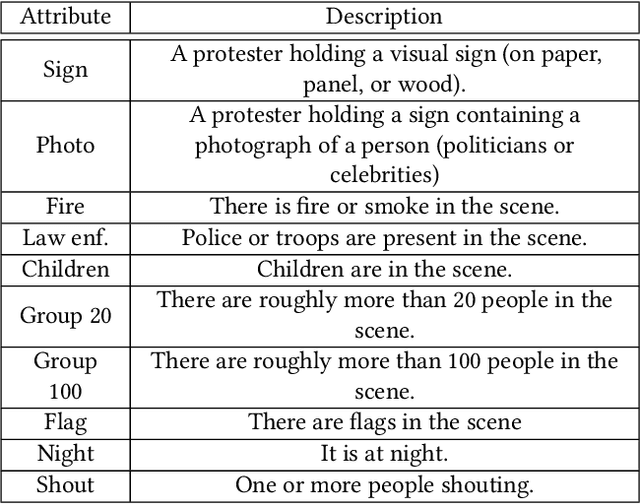

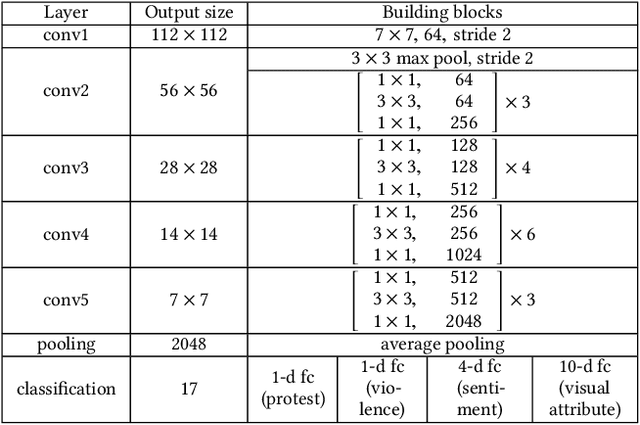

Abstract:We develop a novel visual model which can recognize protesters, describe their activities by visual attributes and estimate the level of perceived violence in an image. Studies of social media and protests use natural language processing to track how individuals use hashtags and links, often with a focus on those items' diffusion. These approaches, however, may not be effective in fully characterizing actual real-world protests (e.g., violent or peaceful) or estimating the demographics of participants (e.g., age, gender, and race) and their emotions. Our system characterizes protests along these dimensions. We have collected geotagged tweets and their images from 2013-2017 and analyzed multiple major protest events in that period. A multi-task convolutional neural network is employed in order to automatically classify the presence of protesters in an image and predict its visual attributes, perceived violence and exhibited emotions. We also release the UCLA Protest Image Dataset, our novel dataset of 40,764 images (11,659 protest images and hard negatives) with various annotations of visual attributes and sentiments. Using this dataset, we train our model and demonstrate its effectiveness. We also present experimental results from various analysis on geotagged image data in several prevalent protest events. Our dataset will be made accessible at https://www.sscnet.ucla.edu/comm/jjoo/mm-protest/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge