Z. Chen

A Strategy Transfer and Decision Support Approach for Epidemic Control in Experience Shortage Scenarios

Apr 10, 2024Abstract:Epidemic outbreaks can cause critical health concerns and severe global economic crises. For countries or regions with new infectious disease outbreaks, it is essential to generate preventive strategies by learning lessons from others with similar risk profiles. A Strategy Transfer and Decision Support Approach (STDSA) is proposed based on the profile similarity evaluation. There are four steps in this method: (1) The similarity evaluation indicators are determined from three dimensions, i.e., the Basis of National Epidemic Prevention & Control, Social Resilience, and Infection Situation. (2) The data related to the indicators are collected and preprocessed. (3) The first round of screening on the preprocessed dataset is conducted through an improved collaborative filtering algorithm to calculate the preliminary similarity result from the perspective of the infection situation. (4) Finally, the K-Means model is used for the second round of screening to obtain the final similarity values. The approach will be applied to decision-making support in the context of COVID-19. Our results demonstrate that the recommendations generated by the STDSA model are more accurate and aligned better with the actual situation than those produced by pure K-means models. This study will provide new insights into preventing and controlling epidemics in regions that lack experience.

CaveSeg: Deep Semantic Segmentation and Scene Parsing for Autonomous Underwater Cave Exploration

Sep 28, 2023Abstract:In this paper, we present CaveSeg - the first visual learning pipeline for semantic segmentation and scene parsing for AUV navigation inside underwater caves. We address the problem of scarce annotated training data by preparing a comprehensive dataset for semantic segmentation of underwater cave scenes. It contains pixel annotations for important navigation markers (e.g. caveline, arrows), obstacles (e.g. ground plain and overhead layers), scuba divers, and open areas for servoing. Through comprehensive benchmark analyses on cave systems in USA, Mexico, and Spain locations, we demonstrate that robust deep visual models can be developed based on CaveSeg for fast semantic scene parsing of underwater cave environments. In particular, we formulate a novel transformer-based model that is computationally light and offers near real-time execution in addition to achieving state-of-the-art performance. Finally, we explore the design choices and implications of semantic segmentation for visual servoing by AUVs inside underwater caves. The proposed model and benchmark dataset open up promising opportunities for future research in autonomous underwater cave exploration and mapping.

Observation of high-energy neutrinos from the Galactic plane

Jul 10, 2023Abstract:The origin of high-energy cosmic rays, atomic nuclei that continuously impact Earth's atmosphere, has been a mystery for over a century. Due to deflection in interstellar magnetic fields, cosmic rays from the Milky Way arrive at Earth from random directions. However, near their sources and during propagation, cosmic rays interact with matter and produce high-energy neutrinos. We search for neutrino emission using machine learning techniques applied to ten years of data from the IceCube Neutrino Observatory. We identify neutrino emission from the Galactic plane at the 4.5$\sigma$ level of significance, by comparing diffuse emission models to a background-only hypothesis. The signal is consistent with modeled diffuse emission from the Galactic plane, but could also arise from a population of unresolved point sources.

* Submitted on May 12th, 2022; Accepted on May 4th, 2023

Language Models are Few-shot Learners for Prognostic Prediction

Mar 02, 2023Abstract:Clinical prediction is an essential task in the healthcare industry. However, the recent success of transformers, on which large language models are built, has not been extended to this domain. In this research, we explore the use of transformers and language models in prognostic prediction for immunotherapy using real-world patients' clinical data and molecular profiles. This paper investigates the potential of transformers to improve clinical prediction compared to conventional machine learning approaches and addresses the challenge of few-shot learning in predicting rare disease areas. The study benchmarks the efficacy of baselines and language models on prognostic prediction across multiple cancer types and investigates the impact of different pretrained language models under few-shot regimes. The results demonstrate significant improvements in accuracy and highlight the potential of NLP in clinical research to improve early detection and intervention for different diseases.

Graph Neural Networks for Low-Energy Event Classification & Reconstruction in IceCube

Sep 07, 2022Abstract:IceCube, a cubic-kilometer array of optical sensors built to detect atmospheric and astrophysical neutrinos between 1 GeV and 1 PeV, is deployed 1.45 km to 2.45 km below the surface of the ice sheet at the South Pole. The classification and reconstruction of events from the in-ice detectors play a central role in the analysis of data from IceCube. Reconstructing and classifying events is a challenge due to the irregular detector geometry, inhomogeneous scattering and absorption of light in the ice and, below 100 GeV, the relatively low number of signal photons produced per event. To address this challenge, it is possible to represent IceCube events as point cloud graphs and use a Graph Neural Network (GNN) as the classification and reconstruction method. The GNN is capable of distinguishing neutrino events from cosmic-ray backgrounds, classifying different neutrino event types, and reconstructing the deposited energy, direction and interaction vertex. Based on simulation, we provide a comparison in the 1-100 GeV energy range to the current state-of-the-art maximum likelihood techniques used in current IceCube analyses, including the effects of known systematic uncertainties. For neutrino event classification, the GNN increases the signal efficiency by 18% at a fixed false positive rate (FPR), compared to current IceCube methods. Alternatively, the GNN offers a reduction of the FPR by over a factor 8 (to below half a percent) at a fixed signal efficiency. For the reconstruction of energy, direction, and interaction vertex, the resolution improves by an average of 13%-20% compared to current maximum likelihood techniques in the energy range of 1-30 GeV. The GNN, when run on a GPU, is capable of processing IceCube events at a rate nearly double of the median IceCube trigger rate of 2.7 kHz, which opens the possibility of using low energy neutrinos in online searches for transient events.

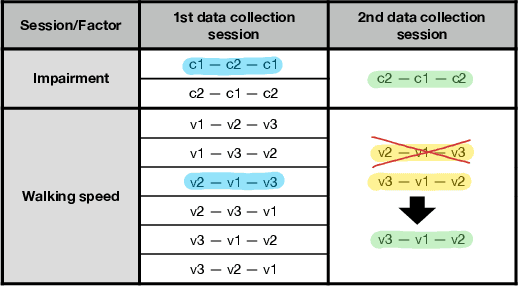

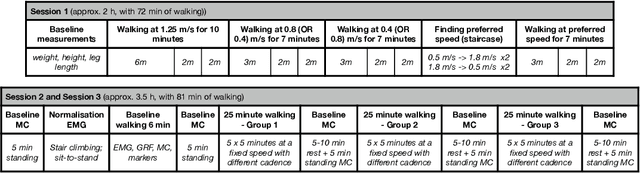

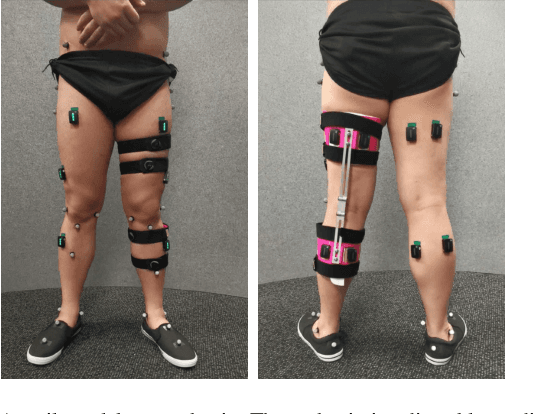

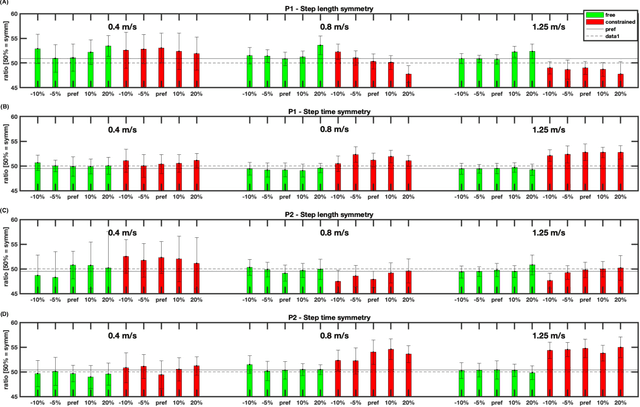

Varying Joint Patterns and Compensatory Strategies Can Lead to the Same Functional Gait Outcomes: A Case Study

Jun 27, 2022

Abstract:This paper analyses joint-space walking mechanisms and redundancies in delivering functional gait outcomes. Multiple biomechanical measures are analysed for two healthy male adults who participated in a multi-factorial study and walked during three sessions. Both participants employed varying intra- and inter-personal compensatory strategies (e.g., vaulting, hip hiking) across walking conditions and exhibited notable gait pattern alterations while keeping task-space (functional) gait parameters invariant. They also preferred various levels of asymmetric step length but kept their symmetric step time consistent and cadence-invariant during free walking. The results demonstrate the importance of an individualised approach and the need for a paradigm shift from functional (task-space) to joint-space gait analysis in attending to (a)typical gaits and delivering human-centred human-robot interaction.

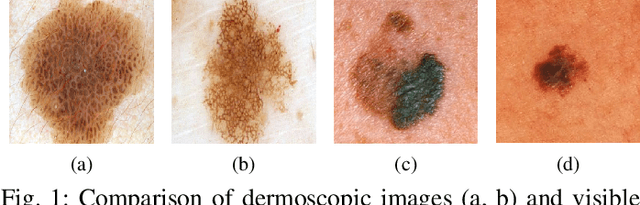

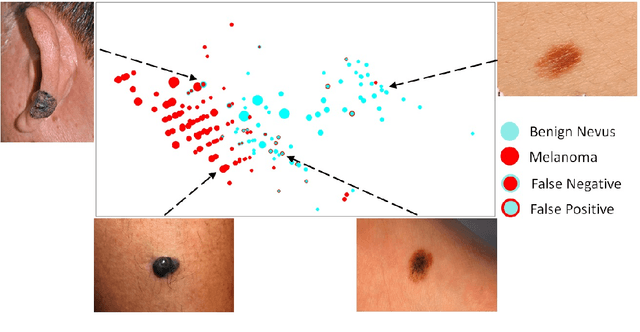

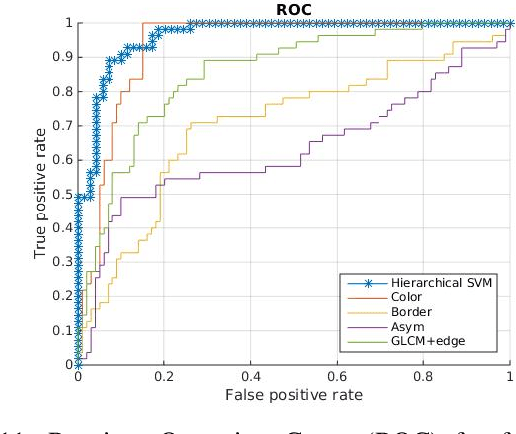

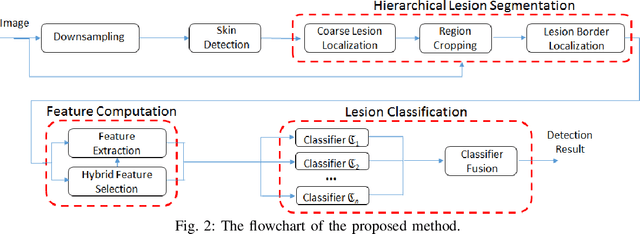

Accessible Melanoma Detection using Smartphones and Mobile Image Analysis

Feb 26, 2018

Abstract:We investigate the design of an entire mobile imaging system for early detection of melanoma. Different from previous work, we focus on smartphone-captured visible light images. Our design addresses two major challenges. First, images acquired using a smartphone under loosely-controlled environmental conditions may be subject to various distortions, and this makes melanoma detection more difficult. Second, processing performed on a smartphone is subject to stringent computation and memory constraints. In our work, we propose a detection system that is optimized to run entirely on the resource-constrained smartphone. Our system intends to localize the skin lesion by combining a lightweight method for skin detection with a hierarchical segmentation approach using two fast segmentation methods. Moreover, we study an extensive set of image features and propose new numerical features to characterize a skin lesion. Furthermore, we propose an improved feature selection algorithm to determine a small set of discriminative features used by the final lightweight system. In addition, we study the human-computer interface (HCI) design to understand the usability and acceptance issues of the proposed system.

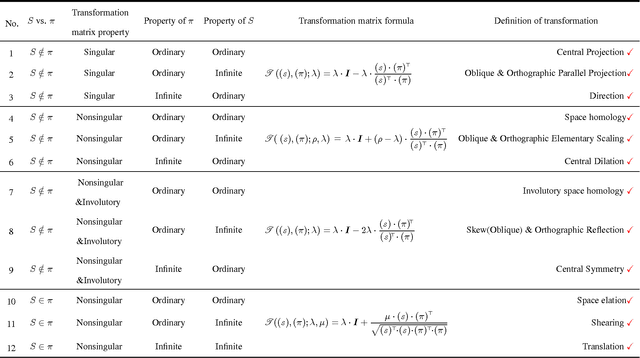

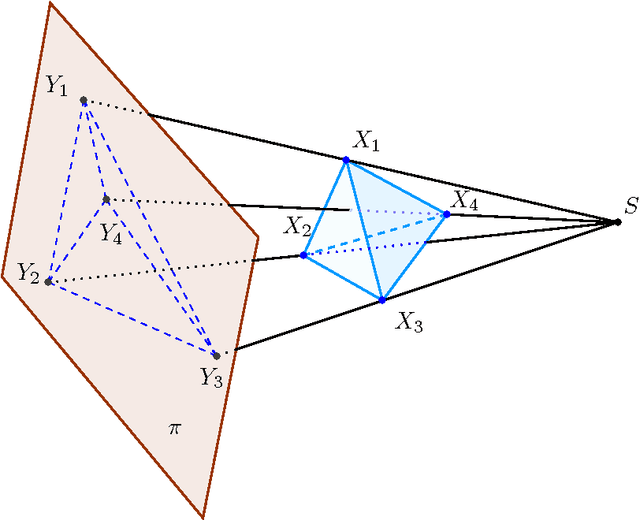

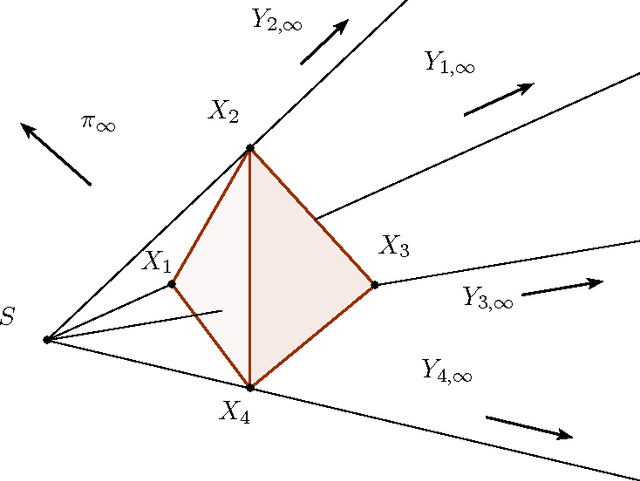

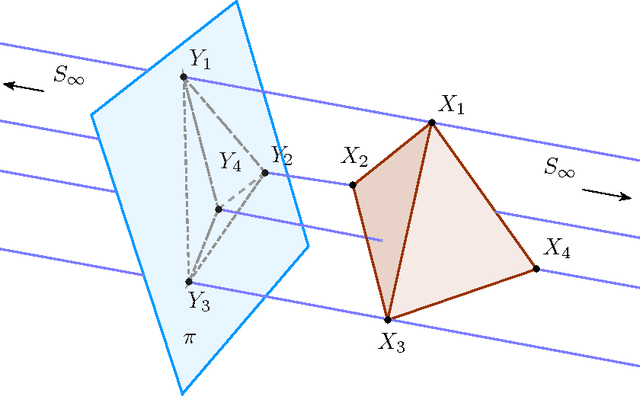

A Unified Framework of Elementary Geometric Transformation Representation

Jul 01, 2014

Abstract:As an extension of projective homology, stereohomology is proposed via an extension of Desargues theorem and the extended Desargues configuration. Geometric transformations such as reflection, translation, central symmetry, central projection, parallel projection, shearing, central dilation, scaling, and so on are all included in stereohomology and represented as Householder-Chen elementary matrices. Hence all these geometric transformations are called elementary. This makes it possible to represent these elementary geometric transformations in homogeneous square matrices independent of a particular choice of coordinate system.

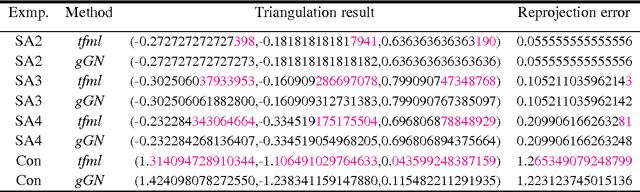

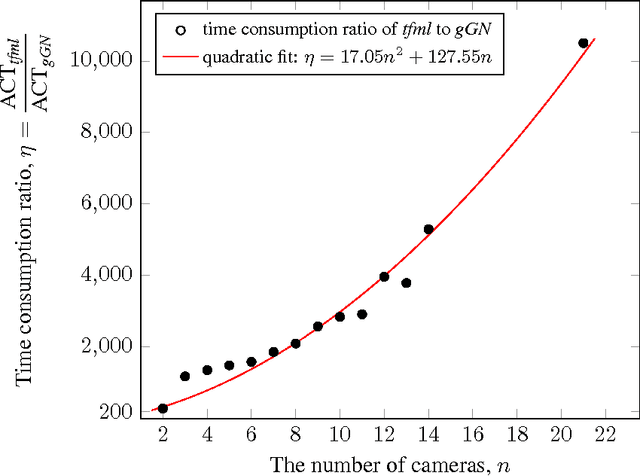

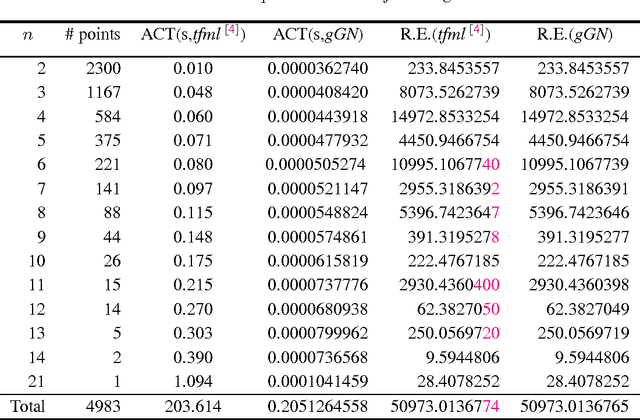

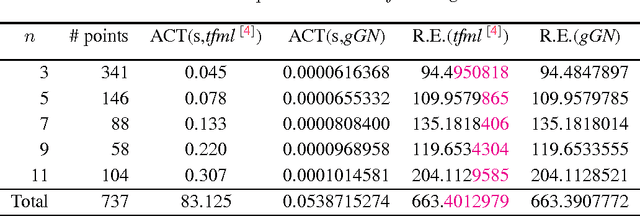

Newton-Type Iterative Solver for Multiple View $L2$ Triangulation

Jun 30, 2014

Abstract:In this note, we show that the L2 optimal solutions to most real multiple view L2 triangulation problems can be efficiently obtained by two-stage Newton-like iterative methods, while the difficulty of such problems mainly lies in how to verify the L2 optimality. Such a working two-stage bundle adjustment approach features: first, the algorithm is initialized by symmedian point triangulation, a multiple-view generalization of the mid-point method; second, a symbolic-numeric method is employed to compute derivatives accurately; third, globalizing strategy such as line search or trust region is smoothly applied to the underlying iteration which assures algorithm robustness in general cases. Numerical comparison with tfml method shows that the local minimizers obtained by the two-stage iterative bundle adjustment approach proposed here are also the L2 optimal solutions to all the calibrated data sets available online by the Oxford visual geometry group. Extensive numerical experiments indicate the bundle adjustment approach solves more than 99% the real triangulation problems optimally. An IEEE 754 double precision C++ implementation shows that it takes only about 0.205 second tocompute allthe 4983 points in the Oxford dinosaur data setvia Gauss-Newton iteration hybrid with a line search strategy on a computer with a 3.4GHz Intel i7 CPU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge