Y. Zhou

Interpreting Training Aspects of Deep-Learned Error-Correcting Codes

May 07, 2023

Abstract:As new deep-learned error-correcting codes continue to be introduced, it is important to develop tools to interpret the designed codes and understand the training process. Prior work focusing on the deep-learned TurboAE has both interpreted the learned encoders post-hoc by mapping these onto nearby ``interpretable'' encoders, and experimentally evaluated the performance of these interpretable encoders with various decoders. Here we look at developing tools for interpreting the training process for deep-learned error-correcting codes, focusing on: 1) using the Goldreich-Levin algorithm to quickly interpret the learned encoder; 2) using Fourier coefficients as a tool for understanding the training dynamics and the loss landscape; 3) reformulating the training loss, the binary cross entropy, by relating it to encoder and decoder parameters, and the bit error rate (BER); 4) using these insights to formulate and study a new training procedure. All tools are demonstrated on TurboAE, but are applicable to other deep-learned forward error correcting codes (without feedback).

Accessible Melanoma Detection using Smartphones and Mobile Image Analysis

Feb 26, 2018

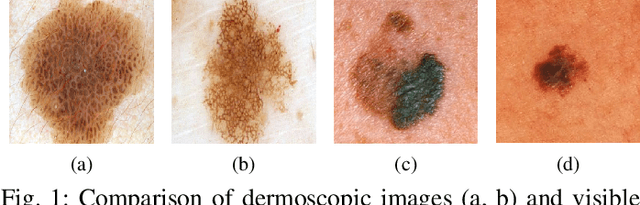

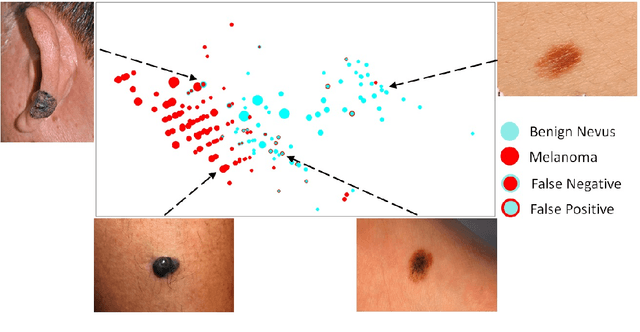

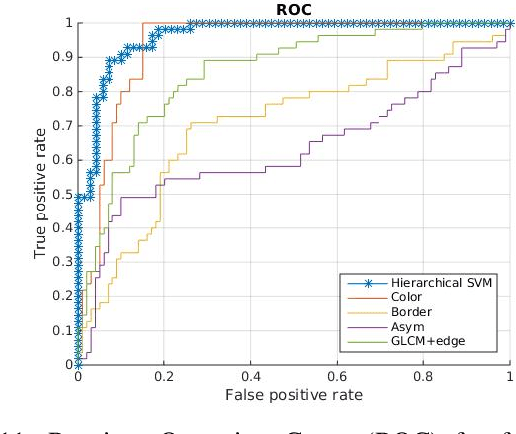

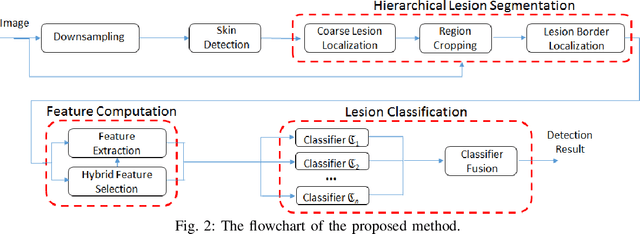

Abstract:We investigate the design of an entire mobile imaging system for early detection of melanoma. Different from previous work, we focus on smartphone-captured visible light images. Our design addresses two major challenges. First, images acquired using a smartphone under loosely-controlled environmental conditions may be subject to various distortions, and this makes melanoma detection more difficult. Second, processing performed on a smartphone is subject to stringent computation and memory constraints. In our work, we propose a detection system that is optimized to run entirely on the resource-constrained smartphone. Our system intends to localize the skin lesion by combining a lightweight method for skin detection with a hierarchical segmentation approach using two fast segmentation methods. Moreover, we study an extensive set of image features and propose new numerical features to characterize a skin lesion. Furthermore, we propose an improved feature selection algorithm to determine a small set of discriminative features used by the final lightweight system. In addition, we study the human-computer interface (HCI) design to understand the usability and acceptance issues of the proposed system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge