Yusong Zhao

Scalable Multi-Task Gaussian Processes with Neural Embedding of Coregionalization

Sep 20, 2021

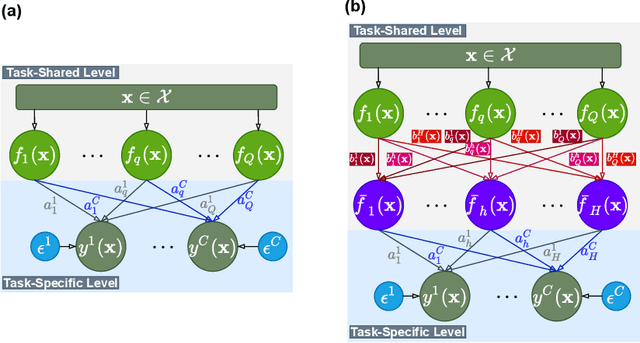

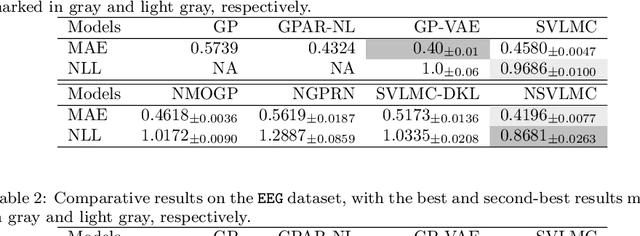

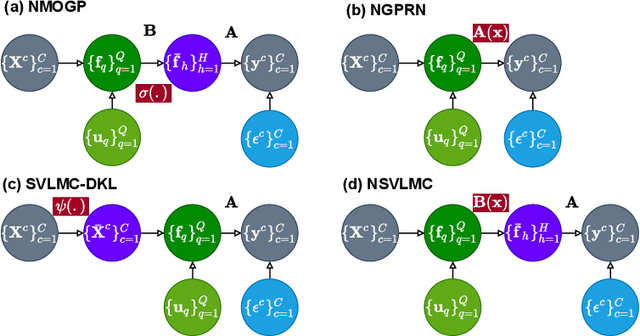

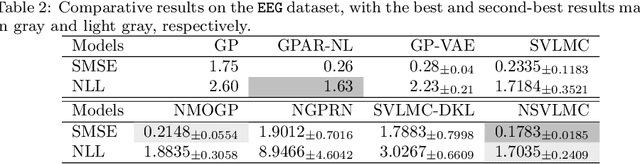

Abstract:Multi-task regression attempts to exploit the task similarity in order to achieve knowledge transfer across related tasks for performance improvement. The application of Gaussian process (GP) in this scenario yields the non-parametric yet informative Bayesian multi-task regression paradigm. Multi-task GP (MTGP) provides not only the prediction mean but also the associated prediction variance to quantify uncertainty, thus gaining popularity in various scenarios. The linear model of coregionalization (LMC) is a well-known MTGP paradigm which exploits the dependency of tasks through linear combination of several independent and diverse GPs. The LMC however suffers from high model complexity and limited model capability when handling complicated multi-task cases. To this end, we develop the neural embedding of coregionalization that transforms the latent GPs into a high-dimensional latent space to induce rich yet diverse behaviors. Furthermore, we use advanced variational inference as well as sparse approximation to devise a tight and compact evidence lower bound (ELBO) for higher quality of scalable model inference. Extensive numerical experiments have been conducted to verify the higher prediction quality and better generalization of our model, named NSVLMC, on various real-world multi-task datasets and the cross-fluid modeling of unsteady fluidized bed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge