Yuqin Wang

Learning Smooth Representation for Unsupervised Domain Adaptation

May 26, 2019

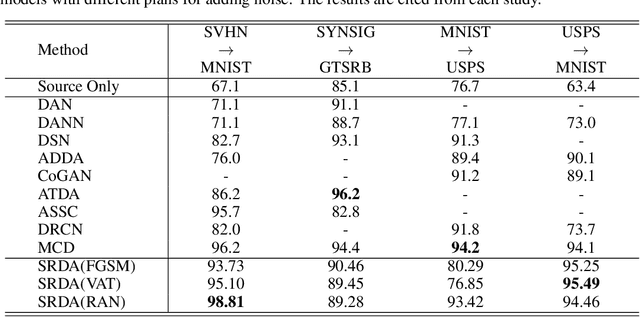

Abstract:In unsupervised domain adaptation, existing methods utilizing the boundary decision have achieved remarkable performance, but they lack analysis of the relationship between decision boundary and features. In our work, we propose a new principle that adaptive classifiers and transferable features can be obtained in the target domain by learning smooth representations. We analyze the relationship between decision boundary and ambiguous target features in terms of smoothness. Thereafter, local smooth discrepancy is defined to measure the smoothness of a sample and detect sensitive samples which are easily misclassified. To strengthen the smoothness, sensitive samples are corrected in feature space by optimizing local smooth discrepancy. Moreover, the generalization error upper bound is derived theoretically. Finally, We evaluate our method in several standard benchmark datasets. Empirical evidence shows that the proposed method is comparable or superior to the state-of-the-art methods and local smooth discrepancy is a valid metric to evaluate the performance of a domain adaptation method.

Virtual Conditional Generative Adversarial Networks

Jan 25, 2019

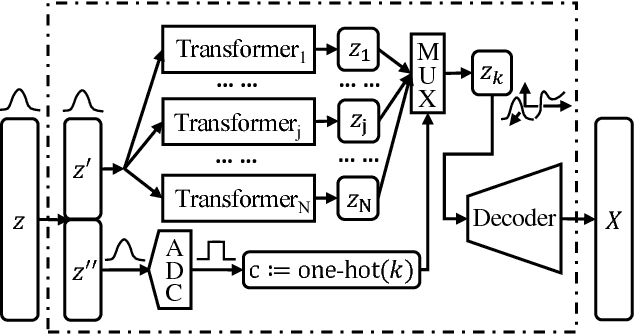

Abstract:When trained on multimodal image datasets, normal Generative Adversarial Networks (GANs) are usually outperformed by class-conditional GANs and ensemble GANs, but conditional GANs is restricted to labeled datasets and ensemble GANs lack efficiency. We propose a novel GAN variant called virtual conditional GAN (vcGAN) which is not only an ensemble GAN with multiple generative paths while adding almost zero network parameters, but also a conditional GAN that can be trained on unlabeled datasets without explicit clustering steps or objectives other than the adversary loss. Inside the vcGAN's generator, a learnable ``analog-to-digital converter (ADC)" module maps a slice of the inputted multivariate Gaussian noise to discrete/digital noise (virtual label), according to which a selector selects the corresponding generative path to produce the sample. All the generative paths share the same decoder network while in each path the decoder network is fed with a concatenation of a different pre-computed amplified one-hot vector and the inputted Gaussian noise. We conducted a lot of experiments on several balanced/imbalanced image datasets to demonstrate that vcGAN converges faster and achieves improved Frech\'et Inception Distance (FID). In addition, we show the training byproduct that the ADC in vcGAN learned the categorical probability of each mode and that each generative path generates samples of specific mode, which enables class-conditional sampling. Codes are available at \url{https://github.com/annonnymmouss/vcgan}

Unsupervised Domain Adaptation with Adversarial Residual Transform Networks

Apr 25, 2018

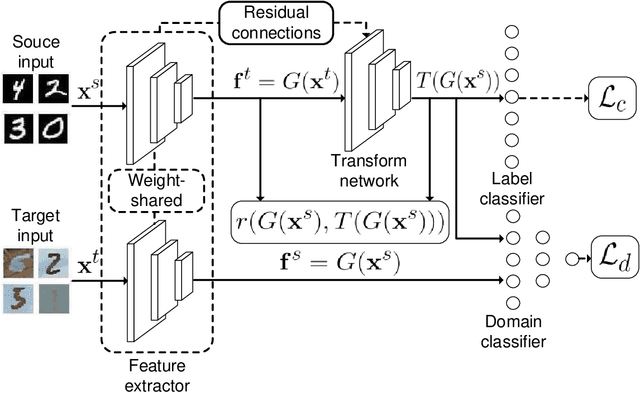

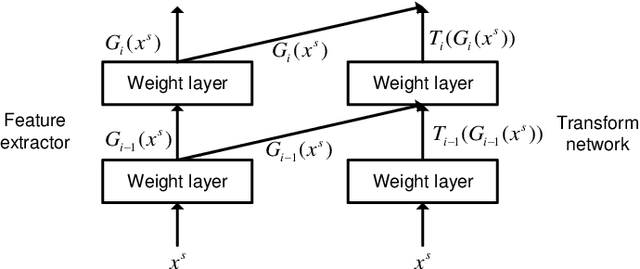

Abstract:Domain adaptation is widely used in learning problems lacking labels. Recent researches show that deep adversarial domain adaptation models can make markable improvements in performance, which include symmetric and asymmetric architectures. However, the former has poor generalization ability whereas the latter is very hard to train. In this paper, we propose a novel adversarial domain adaptation method named Adversarial Residual Transform Networks (ARTNs) to improve the generalization ability, which directly transforms the source features into the space of target features. In this model, residual connections are used to share features and adversarial loss is reconstructed, thus making the model more generalized and easier to train. Moreover, regularization is added to the loss function to alleviate a vanishing gradient problem, which enables the training process stable. A series of experimental results based on Amazon review dataset, digits datasets and Office-31 image datasets show that the proposed ARTN method greatly outperform the methods of the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge