Unsupervised Domain Adaptation with Adversarial Residual Transform Networks

Paper and Code

Apr 25, 2018

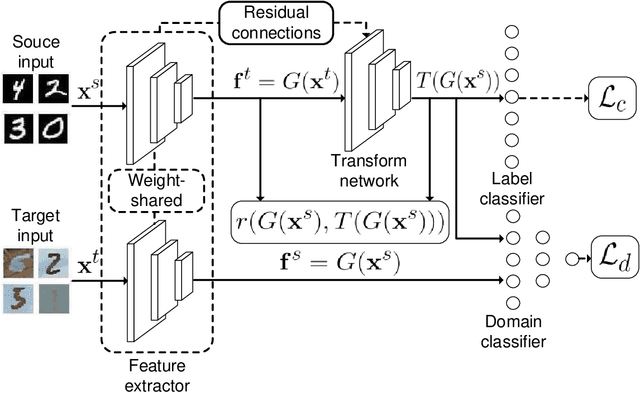

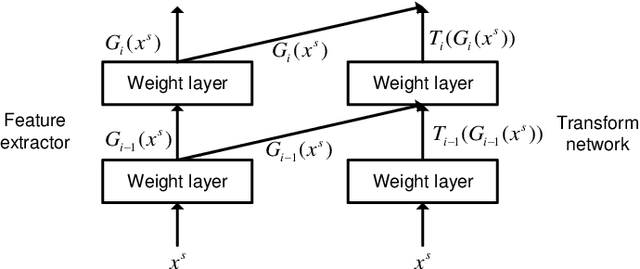

Domain adaptation is widely used in learning problems lacking labels. Recent researches show that deep adversarial domain adaptation models can make markable improvements in performance, which include symmetric and asymmetric architectures. However, the former has poor generalization ability whereas the latter is very hard to train. In this paper, we propose a novel adversarial domain adaptation method named Adversarial Residual Transform Networks (ARTNs) to improve the generalization ability, which directly transforms the source features into the space of target features. In this model, residual connections are used to share features and adversarial loss is reconstructed, thus making the model more generalized and easier to train. Moreover, regularization is added to the loss function to alleviate a vanishing gradient problem, which enables the training process stable. A series of experimental results based on Amazon review dataset, digits datasets and Office-31 image datasets show that the proposed ARTN method greatly outperform the methods of the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge