Yunxiang Fu

Scaling Continual Learning with Bi-Level Routing Mixture-of-Experts

Feb 03, 2026Abstract:Continual learning, especially class-incremental learning (CIL), on the basis of a pre-trained model (PTM) has garnered substantial research interest in recent years. However, how to effectively learn both discriminative and comprehensive feature representations while maintaining stability and plasticity over very long task sequences remains an open problem. We propose CaRE, a scalable {C}ontinual Le{a}rner with efficient Bi-Level {R}outing Mixture-of-{E}xperts (BR-MoE). The core idea of BR-MoE is a bi-level routing mechanism: a router selection stage that dynamically activates relevant task-specific routers, followed by an expert routing phase that dynamically activates and aggregates experts, aiming to inject discriminative and comprehensive representations into every intermediate network layer. On the other hand, we introduce a challenging evaluation protocol for comprehensively assessing CIL methods across very long task sequences spanning hundreds of tasks. Extensive experiments show that CaRE demonstrates leading performance across a variety of datasets and task settings, including commonly used CIL datasets with classical CIL settings (e.g., 5-20 tasks). To the best of our knowledge, CaRE is the first continual learner that scales to very long task sequences (ranging from 100 to over 300 non-overlapping tasks), while outperforming all baselines by a large margin on such task sequences. Code will be publicly released at https://github.com/LMMMEng/CaRE.git.

A2Mamba: Attention-augmented State Space Models for Visual Recognition

Jul 22, 2025Abstract:Transformers and Mamba, initially invented for natural language processing, have inspired backbone architectures for visual recognition. Recent studies integrated Local Attention Transformers with Mamba to capture both local details and global contexts. Despite competitive performance, these methods are limited to simple stacking of Transformer and Mamba layers without any interaction mechanism between them. Thus, deep integration between Transformer and Mamba layers remains an open problem. We address this problem by proposing A2Mamba, a powerful Transformer-Mamba hybrid network architecture, featuring a new token mixer termed Multi-scale Attention-augmented State Space Model (MASS), where multi-scale attention maps are integrated into an attention-augmented SSM (A2SSM). A key step of A2SSM performs a variant of cross-attention by spatially aggregating the SSM's hidden states using the multi-scale attention maps, which enhances spatial dependencies pertaining to a two-dimensional space while improving the dynamic modeling capabilities of SSMs. Our A2Mamba outperforms all previous ConvNet-, Transformer-, and Mamba-based architectures in visual recognition tasks. For instance, A2Mamba-L achieves an impressive 86.1% top-1 accuracy on ImageNet-1K. In semantic segmentation, A2Mamba-B exceeds CAFormer-S36 by 2.5% in mIoU, while exhibiting higher efficiency. In object detection and instance segmentation with Cascade Mask R-CNN, A2Mamba-S surpasses MambaVision-B by 1.2%/0.9% in AP^b/AP^m, while having 40% less parameters. Code is publicly available at https://github.com/LMMMEng/A2Mamba.

SegMAN: Omni-scale Context Modeling with State Space Models and Local Attention for Semantic Segmentation

Dec 16, 2024Abstract:High-quality semantic segmentation relies on three key capabilities: global context modeling, local detail encoding, and multi-scale feature extraction. However, recent methods struggle to possess all these capabilities simultaneously. Hence, we aim to empower segmentation networks to simultaneously carry out efficient global context modeling, high-quality local detail encoding, and rich multi-scale feature representation for varying input resolutions. In this paper, we introduce SegMAN, a novel linear-time model comprising a hybrid feature encoder dubbed SegMAN Encoder, and a decoder based on state space models. Specifically, the SegMAN Encoder synergistically integrates sliding local attention with dynamic state space models, enabling highly efficient global context modeling while preserving fine-grained local details. Meanwhile, the MMSCopE module in our decoder enhances multi-scale context feature extraction and adaptively scales with the input resolution. We comprehensively evaluate SegMAN on three challenging datasets: ADE20K, Cityscapes, and COCO-Stuff. For instance, SegMAN-B achieves 52.6% mIoU on ADE20K, outperforming SegNeXt-L by 1.6% mIoU while reducing computational complexity by over 15% GFLOPs. On Cityscapes, SegMAN-B attains 83.8% mIoU, surpassing SegFormer-B3 by 2.1% mIoU with approximately half the GFLOPs. Similarly, SegMAN-B improves upon VWFormer-B3 by 1.6% mIoU with lower GFLOPs on the COCO-Stuff dataset. Our code is available at https://github.com/yunxiangfu2001/SegMAN.

SparX: A Sparse Cross-Layer Connection Mechanism for Hierarchical Vision Mamba and Transformer Networks

Sep 15, 2024

Abstract:Due to the capability of dynamic state space models (SSMs) in capturing long-range dependencies with near-linear computational complexity, Mamba has shown notable performance in NLP tasks. This has inspired the rapid development of Mamba-based vision models, resulting in promising results in visual recognition tasks. However, such models are not capable of distilling features across layers through feature aggregation, interaction, and selection. Moreover, existing cross-layer feature aggregation methods designed for CNNs or ViTs are not practical in Mamba-based models due to high computational costs. Therefore, this paper aims to introduce an efficient cross-layer feature aggregation mechanism for Mamba-based vision backbone networks. Inspired by the Retinal Ganglion Cells (RGCs) in the human visual system, we propose a new sparse cross-layer connection mechanism termed SparX to effectively improve cross-layer feature interaction and reuse. Specifically, we build two different types of network layers: ganglion layers and normal layers. The former has higher connectivity and complexity, enabling multi-layer feature aggregation and interaction in an input-dependent manner. In contrast, the latter has lower connectivity and complexity. By interleaving these two types of layers, we design a new vision backbone network with sparsely cross-connected layers, achieving an excellent trade-off among model size, computational cost, memory cost, and accuracy in comparison to its counterparts. For instance, with fewer parameters, SparX-Mamba-T improves the top-1 accuracy of VMamba-T from 82.5% to 83.5%, while SparX-Swin-T achieves a 1.3% increase in top-1 accuracy compared to Swin-T. Extensive experimental results demonstrate that our new connection mechanism possesses both superior performance and generalization capabilities on various vision tasks.

LaMamba-Diff: Linear-Time High-Fidelity Diffusion Models Based on Local Attention and Mamba

Aug 05, 2024

Abstract:Recent Transformer-based diffusion models have shown remarkable performance, largely attributed to the ability of the self-attention mechanism to accurately capture both global and local contexts by computing all-pair interactions among input tokens. However, their quadratic complexity poses significant computational challenges for long-sequence inputs. Conversely, a recent state space model called Mamba offers linear complexity by compressing a filtered global context into a hidden state. Despite its efficiency, compression inevitably leads to information loss of fine-grained local dependencies among tokens, which are crucial for effective visual generative modeling. Motivated by these observations, we introduce Local Attentional Mamba (LaMamba) blocks that combine the strengths of self-attention and Mamba, capturing both global contexts and local details with linear complexity. Leveraging the efficient U-Net architecture, our model exhibits exceptional scalability and surpasses the performance of DiT across various model scales on ImageNet at 256x256 resolution, all while utilizing substantially fewer GFLOPs and a comparable number of parameters. Compared to state-of-the-art diffusion models on ImageNet 256x256 and 512x512, our largest model presents notable advantages, such as a reduction of up to 62\% GFLOPs compared to DiT-XL/2, while achieving superior performance with comparable or fewer parameters.

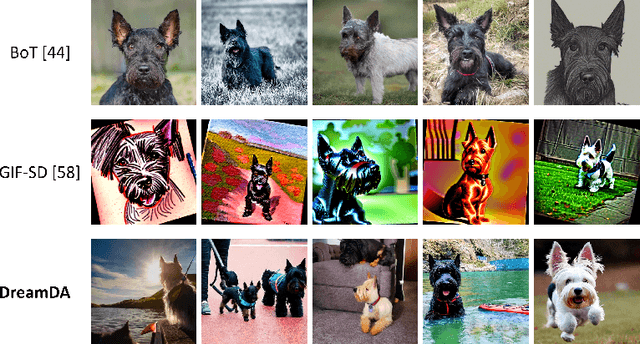

DreamDA: Generative Data Augmentation with Diffusion Models

Mar 19, 2024

Abstract:The acquisition of large-scale, high-quality data is a resource-intensive and time-consuming endeavor. Compared to conventional Data Augmentation (DA) techniques (e.g. cropping and rotation), exploiting prevailing diffusion models for data generation has received scant attention in classification tasks. Existing generative DA methods either inadequately bridge the domain gap between real-world and synthesized images, or inherently suffer from a lack of diversity. To solve these issues, this paper proposes a new classification-oriented framework DreamDA, which enables data synthesis and label generation by way of diffusion models. DreamDA generates diverse samples that adhere to the original data distribution by considering training images in the original data as seeds and perturbing their reverse diffusion process. In addition, since the labels of the generated data may not align with the labels of their corresponding seed images, we introduce a self-training paradigm for generating pseudo labels and training classifiers using the synthesized data. Extensive experiments across four tasks and five datasets demonstrate consistent improvements over strong baselines, revealing the efficacy of DreamDA in synthesizing high-quality and diverse images with accurate labels. Our code will be available at https://github.com/yunxiangfu2001/DreamDA.

Protein Representation Learning via Knowledge Enhanced Primary Structure Modeling

Feb 15, 2023Abstract:Protein representation learning has primarily benefited from the remarkable development of language models (LMs). Accordingly, pre-trained protein models also suffer from a problem in LMs: a lack of factual knowledge. The recent solution models the relationships between protein and associated knowledge terms as the knowledge encoding objective. However, it fails to explore the relationships at a more granular level, i.e., the token level. To mitigate this, we propose Knowledge-exploited Auto-encoder for Protein (KeAP), which performs token-level knowledge graph exploration for protein representation learning. In practice, non-masked amino acids iteratively query the associated knowledge tokens to extract and integrate helpful information for restoring masked amino acids via attention. We show that KeAP can consistently outperform the previous counterpart on 9 representative downstream applications, sometimes surpassing it by large margins. These results suggest that KeAP provides an alternative yet effective way to perform knowledge enhanced protein representation learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge