Yunfeng Lu

SifterNet: A Generalized and Model-Agnostic Trigger Purification Approach

May 20, 2025Abstract:Aiming at resisting backdoor attacks in convolution neural networks and vision Transformer-based large model, this paper proposes a generalized and model-agnostic trigger-purification approach resorting to the classic Ising model. To date, existing trigger detection/removal studies usually require to know the detailed knowledge of target model in advance, access to a large number of clean samples or even model-retraining authorization, which brings the huge inconvenience for practical applications, especially inaccessible to target model. An ideal countermeasure ought to eliminate the implanted trigger without regarding whatever the target models are. To this end, a lightweight and black-box defense approach SifterNet is proposed through leveraging the memorization-association functionality of Hopfield network, by which the triggers of input samples can be effectively purified in a proper manner. The main novelty of our proposed approach lies in the introduction of ideology of Ising model. Extensive experiments also validate the effectiveness of our approach in terms of proper trigger purification and high accuracy achievement, and compared to the state-of-the-art baselines under several commonly-used datasets, our SiferNet has a significant superior performance.

Contrastive Prompt Learning-based Code Search based on Interaction Matrix

Oct 10, 2023

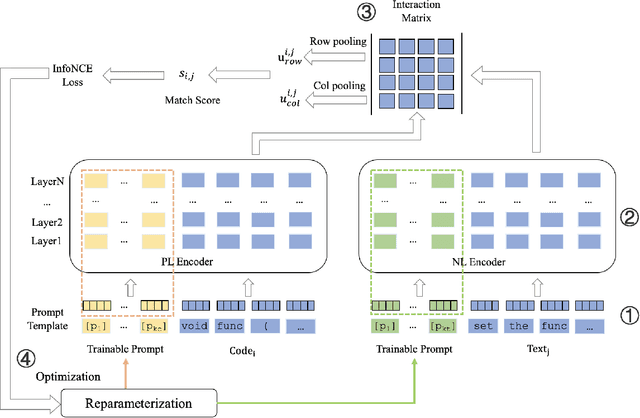

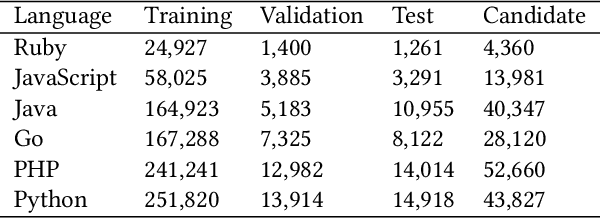

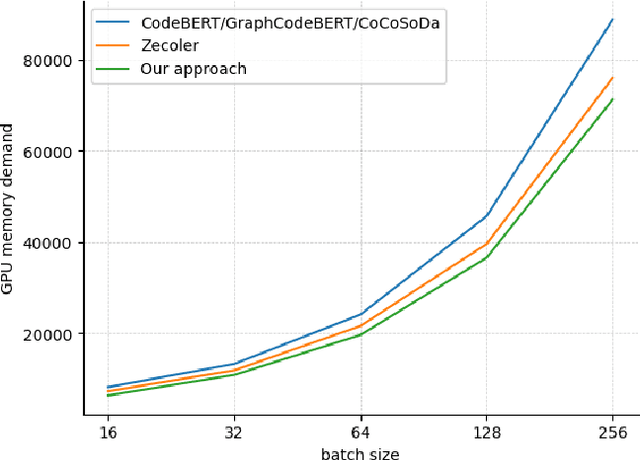

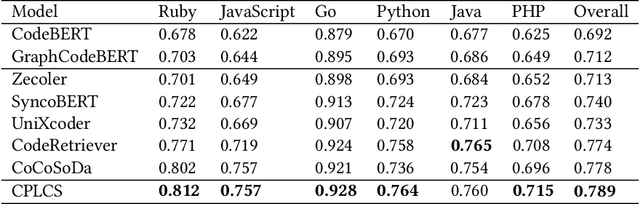

Abstract:Code search aims to retrieve the code snippet that highly matches the given query described in natural language. Recently, many code pre-training approaches have demonstrated impressive performance on code search. However, existing code search methods still suffer from two performance constraints: inadequate semantic representation and the semantic gap between natural language (NL) and programming language (PL). In this paper, we propose CPLCS, a contrastive prompt learning-based code search method based on the cross-modal interaction mechanism. CPLCS comprises:(1) PL-NL contrastive learning, which learns the semantic matching relationship between PL and NL representations; (2) a prompt learning design for a dual-encoder structure that can alleviate the problem of inadequate semantic representation; (3) a cross-modal interaction mechanism to enhance the fine-grained mapping between NL and PL. We conduct extensive experiments to evaluate the effectiveness of our approach on a real-world dataset across six programming languages. The experiment results demonstrate the efficacy of our approach in improving semantic representation quality and mapping ability between PL and NL.

Predicting Li-ion Battery Cycle Life with LSTM RNN

Jul 08, 2022

Abstract:Efficient and accurate remaining useful life prediction is a key factor for reliable and safe usage of lithium-ion batteries. This work trains a long short-term memory recurrent neural network model to learn from sequential data of discharge capacities at various cycles and voltages and to work as a cycle life predictor for battery cells cycled under different conditions. Using experimental data of first 60 - 80 cycles, our model achieves promising prediction accuracy on test sets of around 80 samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge