Yun-Chien Cheng

Active Learning-Driven Lightweight YOLOv9: Enhancing Efficiency in Smart Agriculture

Jan 30, 2026Abstract:This study addresses the demand for real-time detection of tomatoes and tomato flowers by agricultural robots deployed on edge devices in greenhouse environments. Under practical imaging conditions, object detection systems often face challenges such as large scale variations caused by varying camera distances, severe occlusion from plant structures, and highly imbalanced class distributions. These factors make conventional object detection approaches that rely on fully annotated datasets difficult to simultaneously achieve high detection accuracy and deployment efficiency. To overcome these limitations, this research proposes an active learning driven lightweight object detection framework, integrating data analysis, model design, and training strategy. First, the size distribution of objects in raw agricultural images is analyzed to redefine an operational target range, thereby improving learning stability under real-world conditions. Second, an efficient feature extraction module is incorporated to reduce computational cost, while a lightweight attention mechanism is introduced to enhance feature representation under multi-scale and occluded scenarios. Finally, an active learning strategy is employed to iteratively select high-information samples for annotation and training under a limited labeling budget, effectively improving the recognition performance of minority and small-object categories. Experimental results demonstrate that, while maintaining a low parameter count and inference cost suitable for edge-device deployment, the proposed method effectively improves the detection performance of tomatoes and tomato flowers in raw images. Under limited annotation conditions, the framework achieves an overall detection accuracy of 67.8% mAP, validating its practicality and feasibility for intelligent agricultural applications.

Towards Enhanced Classification of Abnormal Lung sound in Multi-breath: A Light Weight Multi-label and Multi-head Attention Classification Method

Jul 15, 2024Abstract:This study aims to develop an auxiliary diagnostic system for classifying abnormal lung respiratory sounds, enhancing the accuracy of automatic abnormal breath sound classification through an innovative multi-label learning approach and multi-head attention mechanism. Addressing the issue of class imbalance and lack of diversity in existing respiratory sound datasets, our study employs a lightweight and highly accurate model, using a two-dimensional label set to represent multiple respiratory sound characteristics. Our method achieved a 59.2% ICBHI score in the four-category task on the ICBHI2017 dataset, demonstrating its advantages in terms of lightweight and high accuracy. This study not only improves the accuracy of automatic diagnosis of lung respiratory sound abnormalities but also opens new possibilities for clinical applications.

Towards Enhanced Analysis of Lung Cancer Lesions in EBUS-TBNA -- A Semi-Supervised Video Object Detection Method

Apr 10, 2024

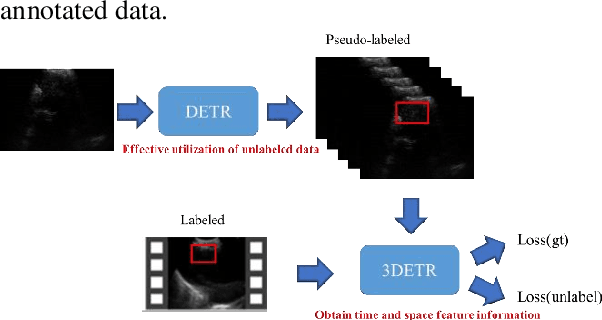

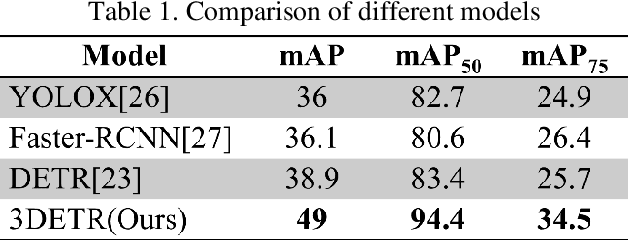

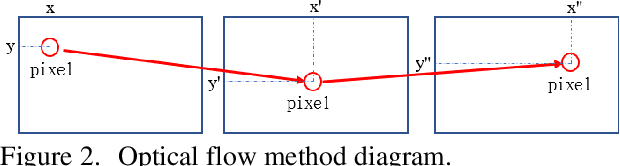

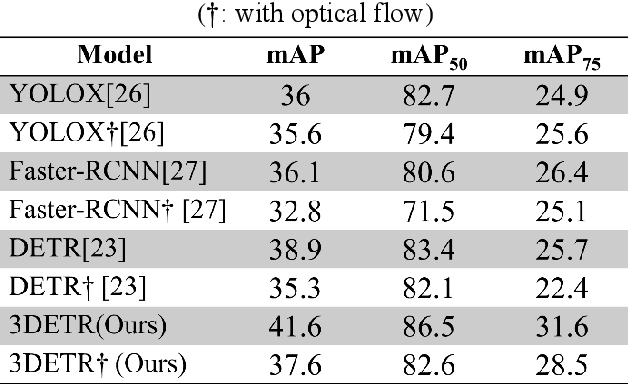

Abstract:This study aims to establish a computer-aided diagnostic system for lung lesions using bronchoscope endobronchial ultrasound (EBUS) to assist physicians in identifying lesion areas. During EBUS-transbronchial needle aspiration (EBUS-TBNA) procedures, physicians rely on grayscale ultrasound images to determine the location of lesions. However, these images often contain significant noise and can be influenced by surrounding tissues or blood vessels, making interpretation challenging. Previous research has lacked the application of object detection models to EBUS-TBNA, and there has been no well-defined solution for annotating the EBUS-TBNA dataset. In related studies on ultrasound images, although models have been successful in capturing target regions for their respective tasks, their training and predictions have been based on two-dimensional images, limiting their ability to leverage temporal features for improved predictions. This study introduces a three-dimensional image-based object detection model. It utilizes an attention mechanism to capture temporal correlations and we will implements a filtering mechanism to select relevant information from previous frames. Subsequently, a teacher-student model training approach is employed to optimize the model further, leveraging unlabeled data. To mitigate the impact of poor-quality pseudo-labels on the student model, we will add a special Gaussian Mixture Model (GMM) to ensure the quality of pseudo-labels.

Using Few-Shot Learning to Classify Primary Lung Cancer and Other Malignancy with Lung Metastasis in Cytological Imaging via Endobronchial Ultrasound Procedures

Apr 10, 2024

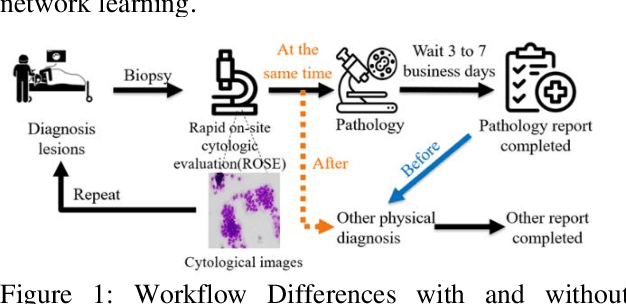

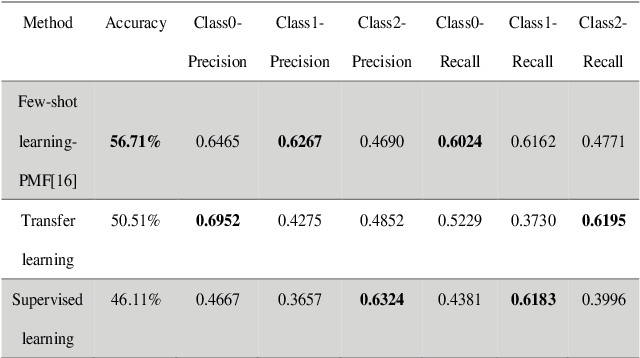

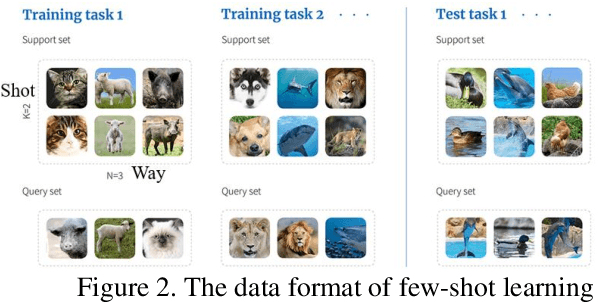

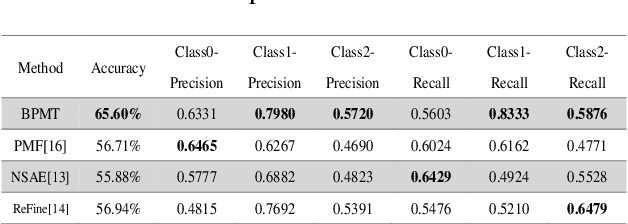

Abstract:This study aims to establish a computer-aided diagnosis system for endobronchial ultrasound (EBUS) surgery to assist physicians in the preliminary diagnosis of metastatic cancer. This involves arranging immediate examinations for other sites of metastatic cancer after EBUS surgery, eliminating the need to wait for reports, thereby shortening the waiting time by more than half and enabling patients to detect other cancers earlier, allowing for early planning and implementation of treatment plans. Unlike previous studies on cell image classification, which have abundant datasets for training, this study must also be able to make effective classifications despite the limited amount of case data for lung metastatic cancer. In the realm of small data set classification methods, Few-shot learning (FSL) has become mainstream in recent years. Through its ability to train on small datasets and its strong generalization capabilities, FSL shows potential in this task of lung metastatic cell image classification. This study will adopt the approach of Few-shot learning, referencing existing proposed models, and designing a model architecture for classifying lung metastases cell images. Batch Spectral Regularization (BSR) will be incorporated as a loss update parameter, and the Finetune method of PMF will be modified. In terms of test results, the addition of BSR and the modified Finetune method further increases the accuracy by 8.89% to 65.60%, outperforming other FSL methods. This study confirms that FSL is superior to supervised and transfer learning in classifying metastatic cancer and demonstrates that using BSR as a loss function and modifying Finetune can enhance the model's capabilities.

Using Spatio-Temporal Dual-Stream Network with Self-Supervised Learning for Lung Tumor Classification on Radial Probe Endobronchial Ultrasound Video

May 07, 2023Abstract:The purpose of this study is to develop a computer-aided diagnosis system for classifying benign and malignant lung lesions, and to assist physicians in real-time analysis of radial probe endobronchial ultrasound (EBUS) videos. During the biopsy process of lung cancer, physicians use real-time ultrasound images to find suitable lesion locations for sampling. However, most of these images are difficult to classify and contain a lot of noise. Previous studies have employed 2D convolutional neural networks to effectively differentiate between benign and malignant lung lesions, but doctors still need to manually select good-quality images, which can result in additional labor costs. In addition, the 2D neural network has no ability to capture the temporal information of the ultrasound video, so it is difficult to obtain the relationship between the features of the continuous images. This study designs an automatic diagnosis system based on a 3D neural network, uses the SlowFast architecture as the backbone to fuse temporal and spatial features, and uses the SwAV method of contrastive learning to enhance the noise robustness of the model. The method we propose includes the following advantages, such as (1) using clinical ultrasound films as model input, thereby reducing the need for high-quality image selection by physicians, (2) high-accuracy classification of benign and malignant lung lesions can assist doctors in clinical diagnosis and reduce the time and risk of surgery, and (3) the capability to classify well even in the presence of significant image noise. The AUC, accuracy, precision, recall and specificity of our proposed method on the validation set reached 0.87, 83.87%, 86.96%, 90.91% and 66.67%, respectively. The results have verified the importance of incorporating temporal information and the effectiveness of using the method of contrastive learning on feature extraction.

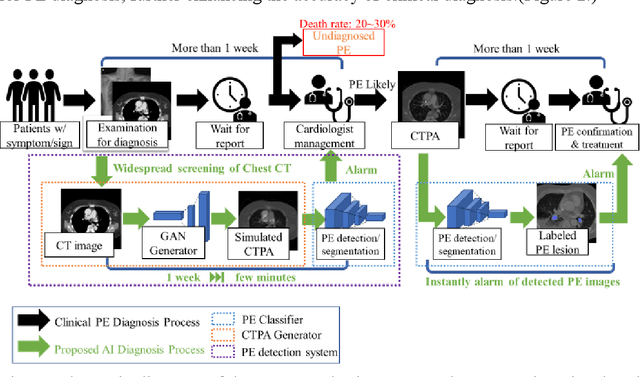

Detecting Pulmonary Embolism from Computed Tomography Using Convolutional Neural Network

Jun 03, 2022

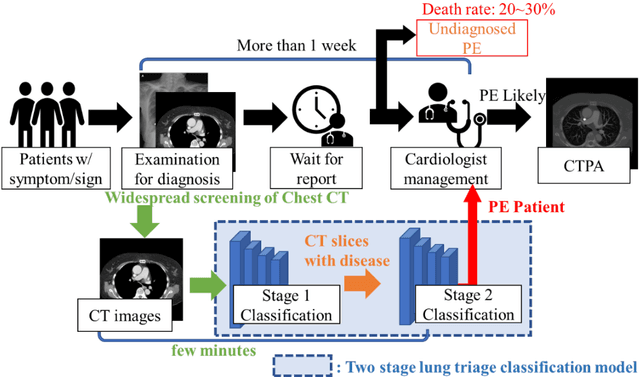

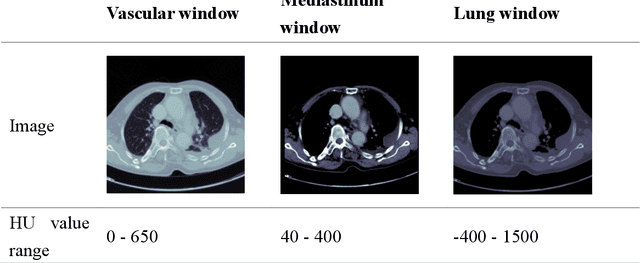

Abstract:The clinical symptoms of pulmonary embolism (PE) are very diverse and non-specific, which makes it difficult to diagnose. In addition, pulmonary embolism has multiple triggers and is one of the major causes of vascular death. Therefore, if it can be detected and treated quickly, it can significantly reduce the risk of death in hospitalized patients. In the detection process, the cost of computed tomography pulmonary angiography (CTPA) is high, and angiography requires the injection of contrast agents, which increase the risk of damage to the patient. Therefore, this study will use a deep learning approach to detect pulmonary embolism in all patients who take a CT image of the chest using a convolutional neural network. With the proposed pulmonary embolism detection system, we can detect the possibility of pulmonary embolism at the same time as the patient's first CT image, and schedule the CTPA test immediately, saving more than a week of CT image screening time and providing timely diagnosis and treatment to the patient.

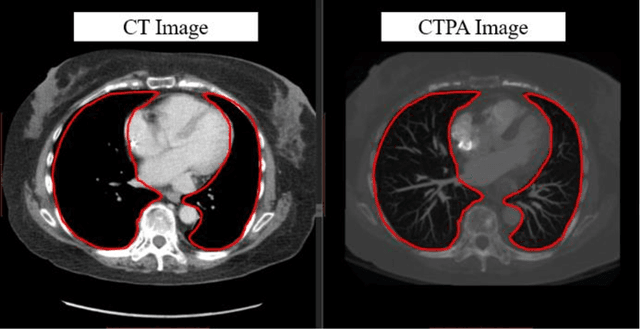

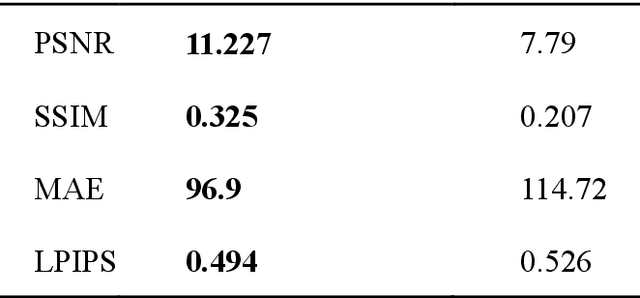

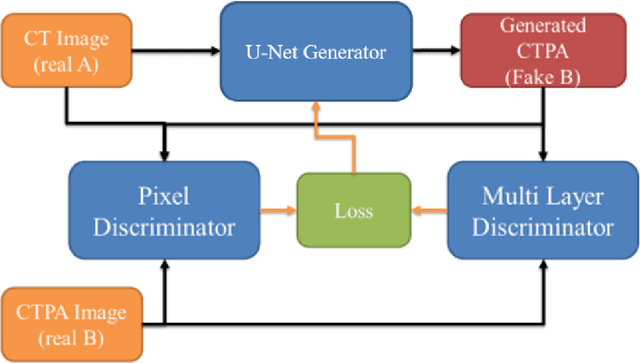

Computerized Tomography Pulmonary Angiography Image Simulation using Cycle Generative Adversarial Network from Chest CT imaging in Pulmonary Embolism Patients

May 17, 2022

Abstract:The purpose of this research is to develop a system that generates simulated computed tomography pulmonary angiography (CTPA) images clinically for pulmonary embolism diagnoses. Nowadays, CTPA images are the gold standard computerized detection method to determine and identify the symptoms of pulmonary embolism (PE), although performing CTPA is harmful for patients and also expensive. Therefore, we aim to detect possible PE patients through CT images. The system will simulate CTPA images with deep learning models for the identification of PE patients' symptoms, providing physicians with another reference for determining PE patients. In this study, the simulated CTPA image generation system uses a generative antagonistic network to enhance the features of pulmonary vessels in the CT images to strengthen the reference value of the images and provide a basis for hospitals to judge PE patients. We used the CT images of 22 patients from National Cheng Kung University Hospital and the corresponding CTPA images as the training data for the task of simulating CTPA images and generated them using two sets of generative countermeasure networks. This study is expected to propose a new approach to the clinical diagnosis of pulmonary embolism, in which a deep learning network is used to assist in the complex screening process and to review the generated simulated CTPA images, allowing physicians to assess whether a patient needs to undergo detailed testing for CTPA, improving the speed of detection of pulmonary embolism and significantly reducing the number of undetected patients.

Feature-enhanced Adversarial Semi-supervised Semantic Segmentation Network for Pulmonary Embolism Annotation

Apr 08, 2022

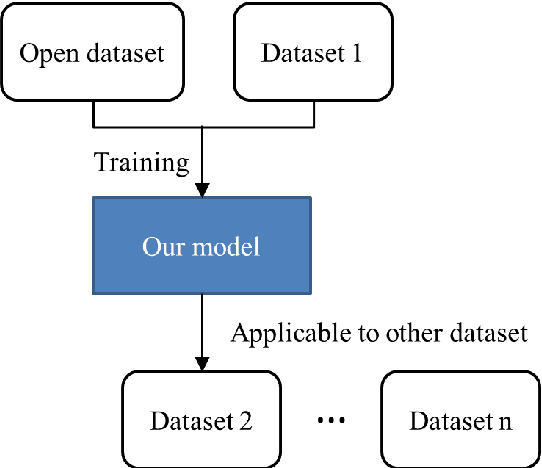

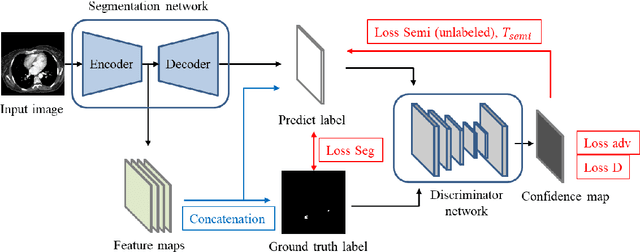

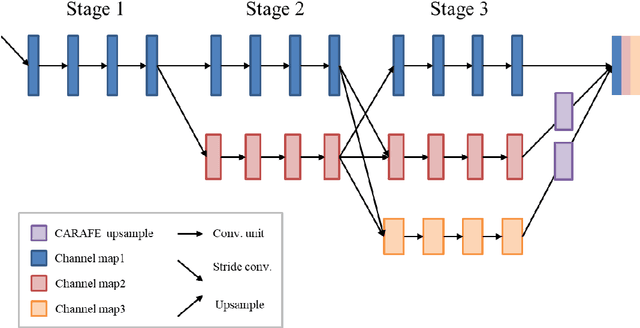

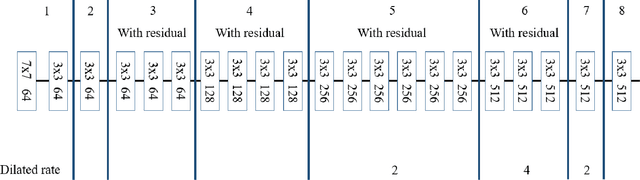

Abstract:This study established a feature-enhanced adversarial semi-supervised semantic segmentation model to automatically annotate pulmonary embolism lesion areas in computed tomography pulmonary angiogram (CTPA) images. In current studies, all of the PE CTPA image segmentation methods are trained by supervised learning. However, the supervised learning models need to be retrained and the images need to be relabeled when the CTPA images come from different hospitals. This study proposed a semi-supervised learning method to make the model applicable to different datasets by adding a small amount of unlabeled images. By training the model with both labeled and unlabeled images, the accuracy of unlabeled images can be improved and the labeling cost can be reduced. Our semi-supervised segmentation model includes a segmentation network and a discriminator network. We added feature information generated from the encoder of segmentation network to the discriminator so that it can learn the similarity between predicted mask and ground truth mask. This HRNet-based architecture can maintain a higher resolution for convolutional operations so the prediction of small PE lesion areas can be improved. We used the labeled open-source dataset and the unlabeled National Cheng Kung University Hospital (NCKUH) (IRB number: B-ER-108-380) dataset to train the semi-supervised learning model, and the resulting mean intersection over union (mIOU), dice score, and sensitivity achieved 0.3510, 0.4854, and 0.4253, respectively on the NCKUH dataset. Then, we fine-tuned and tested the model with a small amount of unlabeled PE CTPA images from China Medical University Hospital (CMUH) (IRB number: CMUH110-REC3-173) dataset. Comparing the results of our semi-supervised model with the supervised model, the mIOU, dice score, and sensitivity improved from 0.2344, 0.3325, and 0.3151 to 0.3721, 0.5113, and 0.4967, respectively.

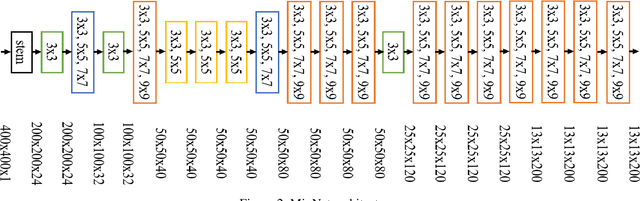

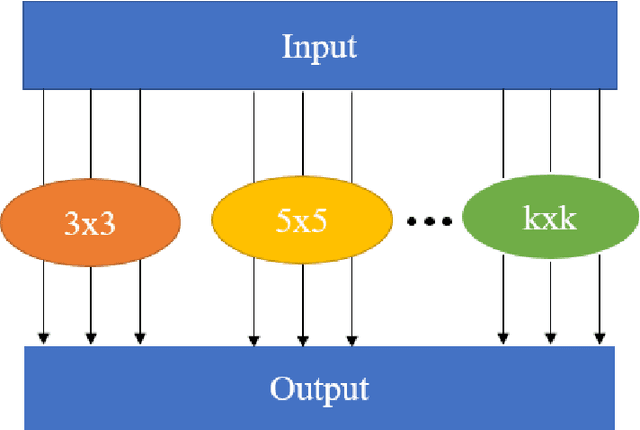

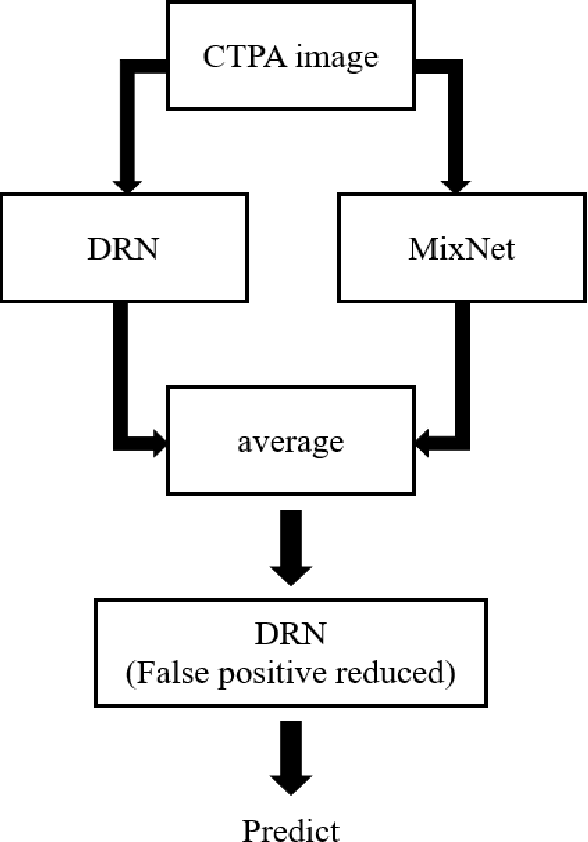

Convolutional Neural Network for Early Pulmonary Embolism Detection via Computed Tomography Pulmonary Angiography

Apr 07, 2022

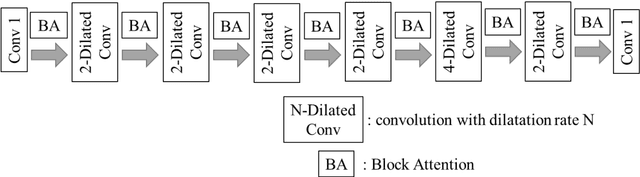

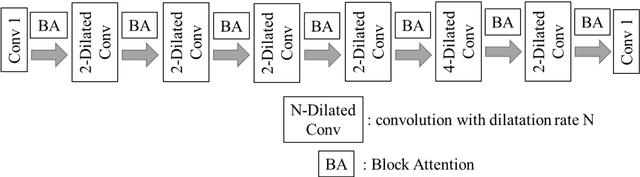

Abstract:This study was conducted to develop a computer-aided detection (CAD) system for triaging patients with pulmonary embolism (PE). The purpose of the system was to reduce the death rate during the waiting period. Computed tomography pulmonary angiography (CTPA) is used for PE diagnosis. Because CTPA reports require a radiologist to review the case and suggest further management, this creates a waiting period during which patients may die. Our proposed CAD method was thus designed to triage patients with PE from those without PE. In contrast to related studies involving CAD systems that identify key PE lesion images to expedite PE diagnosis, our system comprises a novel classification-model ensemble for PE detection and a segmentation model for PE lesion labeling. The models were trained using data from National Cheng Kung University Hospital and open resources. The classification model yielded 0.73 for receiver operating characteristic curve (accuracy = 0.85), while the mean intersection over union was 0.689 for the segmentation model. The proposed CAD system can distinguish between patients with and without PE and automatically label PE lesions to expedite PE diagnosis

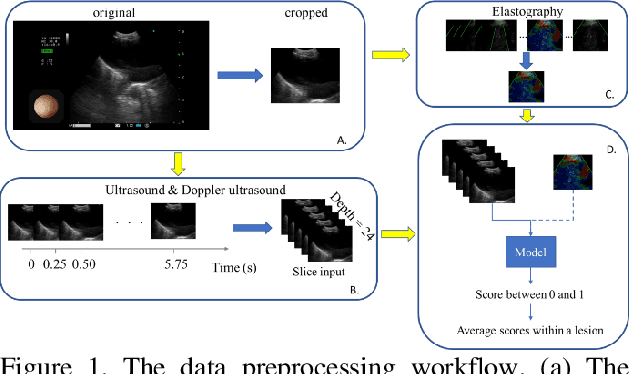

The interpretation of endobronchial ultrasound image using 3D convolutional neural network for differentiating malignant and benign mediastinal lesions

Aug 02, 2021

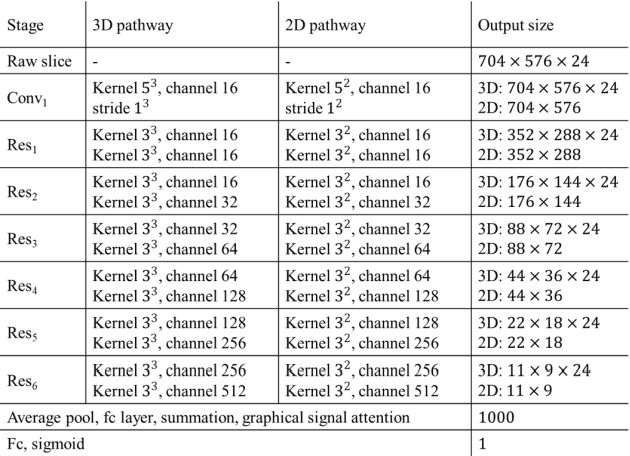

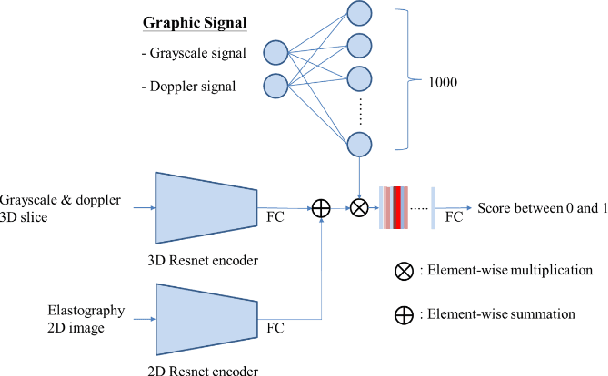

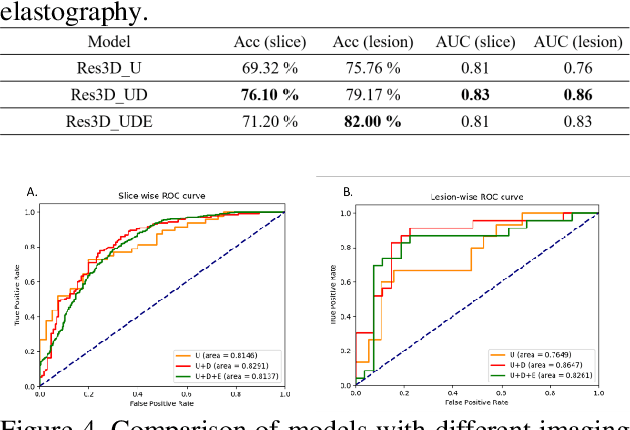

Abstract:The purpose of this study is to differentiate malignant and benign mediastinal lesions by using the three-dimensional convolutional neural network through the endobronchial ultrasound (EBUS) image. Compared with previous study, our proposed model is robust to noise and able to fuse various imaging features and spatiotemporal features of EBUS videos. Endobronchial ultrasound-guided transbronchial needle aspiration (EBUS-TBNA) is a diagnostic tool for intrathoracic lymph nodes. Physician can observe the characteristics of the lesion using grayscale mode, doppler mode, and elastography during the procedure. To process the EBUS data in the form of a video and appropriately integrate the features of multiple imaging modes, we used a time-series three-dimensional convolutional neural network (3D CNN) to learn the spatiotemporal features and design a variety of architectures to fuse each imaging mode. Our model (Res3D_UDE) took grayscale mode, Doppler mode, and elastography as training data and achieved an accuracy of 82.00% and area under the curve (AUC) of 0.83 on the validation set. Compared with previous study, we directly used videos recorded during procedure as training and validation data, without additional manual selection, which might be easier for clinical application. In addition, model designed with 3D CNN can also effectively learn spatiotemporal features and improve accuracy. In the future, our model may be used to guide physicians to quickly and correctly find the target lesions for slice sampling during the inspection process, reduce the number of slices of benign lesions, and shorten the inspection time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge