Yuji Sekiya

mdx: A Cloud Platform for Supporting Data Science and Cross-Disciplinary Research Collaborations

Mar 27, 2022

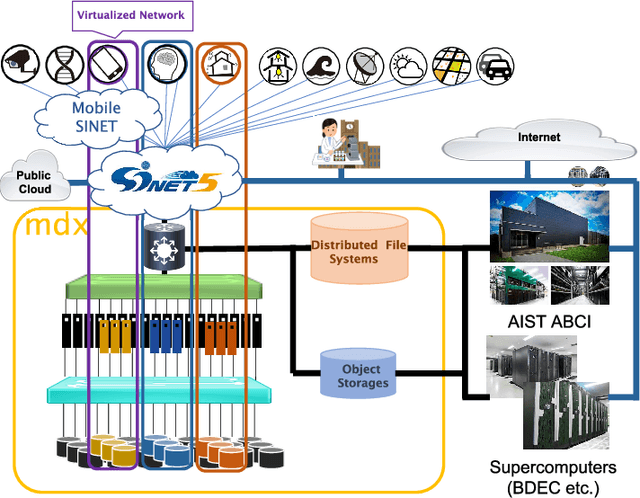

Abstract:The growing amount of data and advances in data science have created a need for a new kind of cloud platform that provides users with flexibility, strong security, and the ability to couple with supercomputers and edge devices through high-performance networks. We have built such a nation-wide cloud platform, called "mdx" to meet this need. The mdx platform's virtualization service, jointly operated by 9 national universities and 2 national research institutes in Japan, launched in 2021, and more features are in development. Currently mdx is used by researchers in a wide variety of domains, including materials informatics, geo-spatial information science, life science, astronomical science, economics, social science, and computer science. This paper provides an the overview of the mdx platform, details the motivation for its development, reports its current status, and outlines its future plans.

Classification of URL bitstreams using Bag of Bytes

Nov 11, 2021Abstract:Protecting users from accessing malicious web sites is one of the important management tasks for network operators. There are many open-source and commercial products to control web sites users can access. The most traditional approach is blacklist-based filtering. This mechanism is simple but not scalable, though there are some enhanced approaches utilizing fuzzy matching technologies. Other approaches try to use machine learning (ML) techniques by extracting features from URL strings. This approach can cover a wider area of Internet web sites, but finding good features requires deep knowledge of trends of web site design. Recently, another approach using deep learning (DL) has appeared. The DL approach will help to extract features automatically by investigating a lot of existing sample data. Using this technique, we can build a flexible filtering decision module by keep teaching the neural network module about recent trends, without any specific expert knowledge of the URL domain. In this paper, we apply a mechanical approach to generate feature vectors from URL strings. We implemented our approach and tested with realistic URL access history data taken from a research organization and data from the famous archive site of phishing site information, PhishTank.com. Our approach achieved 2~3% better accuracy compared to the existing DL-based approach.

Classifying DNS Servers based on Response Message Matrix using Machine Learning

Nov 09, 2021

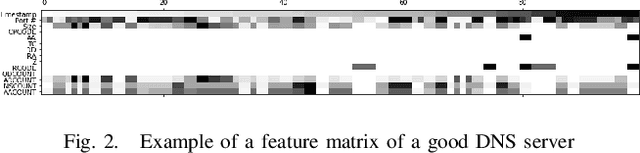

Abstract:Improperly configured domain name system (DNS) servers are sometimes used as packet reflectors as part of a DoS or DDoS attack. Detecting packets created as a result of this activity is logically possible by monitoring the DNS request and response traffic. Any response that does not have a corresponding request can be considered a reflected message; checking and tracking every DNS packet, however, is a non-trivial operation. In this paper, we propose a detection mechanism for DNS servers used as reflectors by using a DNS server feature matrix built from a small number of packets and a machine learning algorithm. The F1 score of bad DNS server detection was more than 0.9 when the test and training data are generated within the same day, and more than 0.7 for the data not used for the training and testing phase of the same day.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge