Yu-Han Huang

Finite-Horizon Quickest Change Detection Balancing Latency with False Alarm Probability

Nov 16, 2025

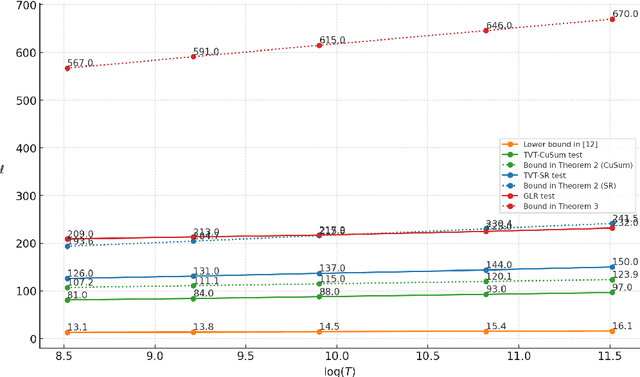

Abstract:A finite-horizon variant of the quickest change detection (QCD) problem that is of relevance to learning in non-stationary environments is studied. The metric characterizing false alarms is the probability of a false alarm occurring before the horizon ends. The metric that characterizes the delay is \emph{latency}, which is the smallest value such that the probability that detection delay exceeds this value is upper bounded to a predetermined latency level. The objective is to minimize the latency (at a given latency level), while maintaining a low false alarm probability. Under the pre-specified latency and false alarm levels, a universal lower bound on the latency, which any change detection procedure needs to satisfy, is derived. Change detectors are then developed, which are order-optimal in terms of the horizon. The case where the pre- and post-change distributions are known is considered first, and then the results are generalized to the non-parametric case when they are unknown except that they are sub-Gaussian with different means. Simulations are provided to validate the theoretical results.

Detection Is All You Need: A Feasible Optimal Prior-Free Black-Box Approach For Piecewise Stationary Bandits

Jan 31, 2025

Abstract:We study the problem of piecewise stationary bandits without prior knowledge of the underlying non-stationarity. We propose the first $\textit{feasible}$ black-box algorithm applicable to most common parametric bandit variants. Our procedure, termed Detection Augmented Bandit (DAB), is modular, accepting any stationary bandit algorithm as input and augmenting it with a change detector. DAB achieves optimal regret in the piecewise stationary setting under mild assumptions. Specifically, we prove that DAB attains the order-optimal regret bound of $\tilde{\mathcal{O}}(\sqrt{N_T T})$, where $N_T$ denotes the number of changes over the horizon $T$, if its input stationary bandit algorithm has order-optimal stationary regret guarantees. Applying DAB to different parametric bandit settings, we recover recent state-of-the-art results. Notably, for self-concordant bandits, DAB achieves optimal dynamic regret, while previous works obtain suboptimal bounds and require knowledge on the non-stationarity. In simulations on piecewise stationary environments, DAB outperforms existing approaches across varying number of changes. Interestingly, despite being theoretically designed for piecewise stationary environments, DAB is also effective in simulations in drifting environments, outperforming existing methods designed specifically for this scenario.

Change Detection-Based Procedures for Piecewise Stationary MABs: A Modular Approach

Jan 02, 2025

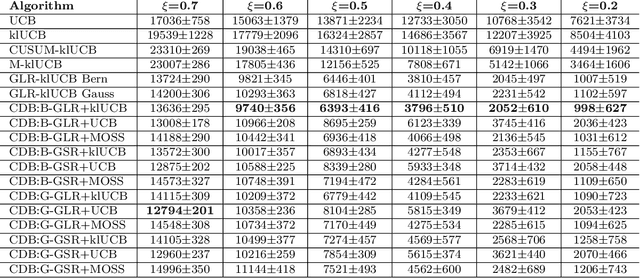

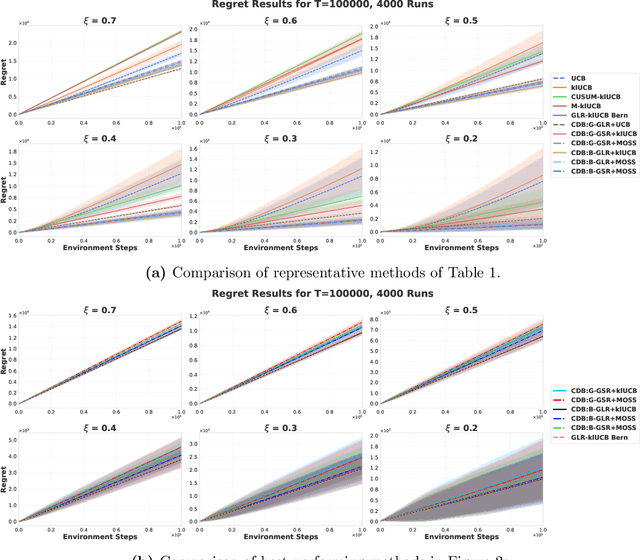

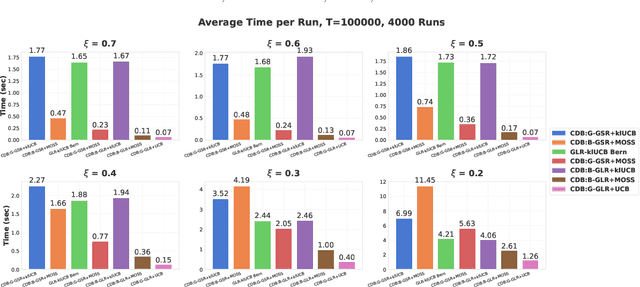

Abstract:Conventional Multi-Armed Bandit (MAB) algorithms are designed for stationary environments, where the reward distributions associated with the arms do not change with time. In many applications, however, the environment is more accurately modeled as being nonstationary. In this work, piecewise stationary MAB (PS-MAB) environments are investigated, in which the reward distributions associated with a subset of the arms change at some change-points and remain stationary between change-points. Our focus is on the asymptotic analysis of PS-MABs, for which practical algorithms based on change detection (CD) have been previously proposed. Our goal is to modularize the design and analysis of such CD-based Bandit (CDB) procedures. To this end, we identify the requirements for stationary bandit algorithms and change detectors in a CDB procedure that are needed for the modularization. We assume that the rewards are sub-Gaussian. Under this assumption and a condition on the separation of the change-points, we show that the analysis of CDB procedures can indeed be modularized, so that regret bounds can be obtained in a unified manner for various combinations of change detectors and bandit algorithms. Through this analysis, we develop new modular CDB procedures that are order-optimal. We compare the performance of our modular CDB procedures with various other methods in simulations.

Is Prior-Free Black-Box Non-Stationary Reinforcement Learning Feasible?

Oct 17, 2024

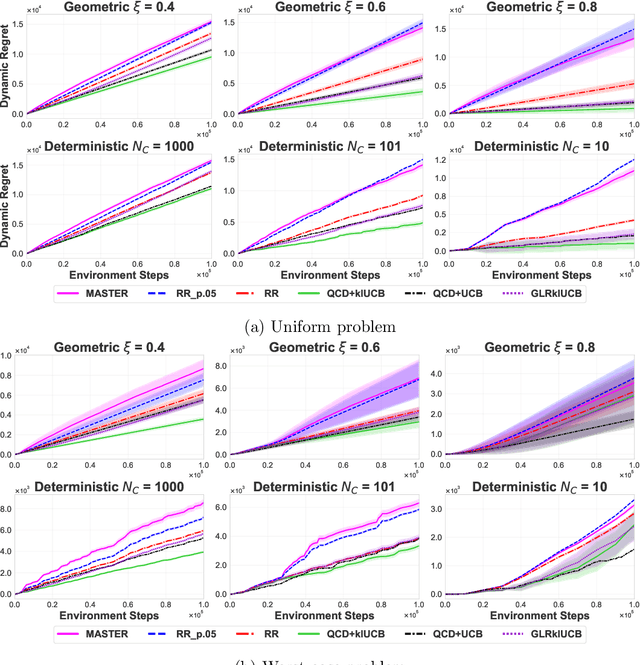

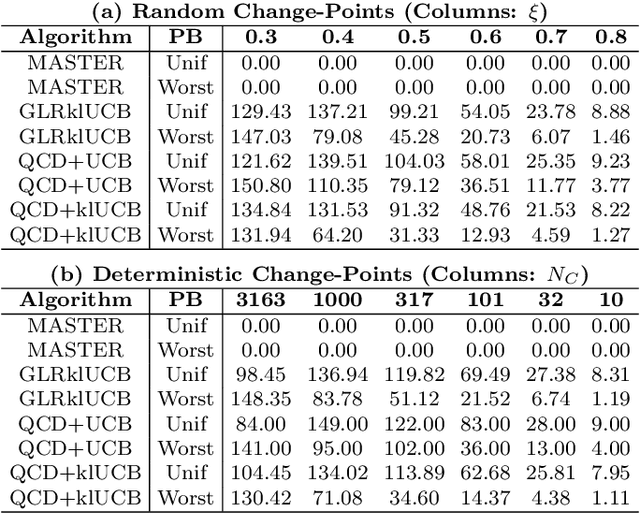

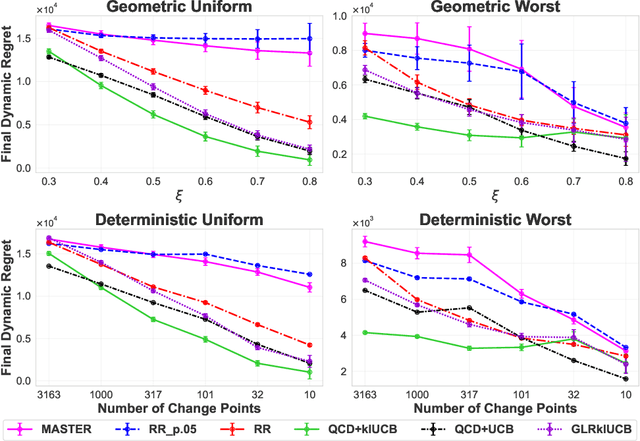

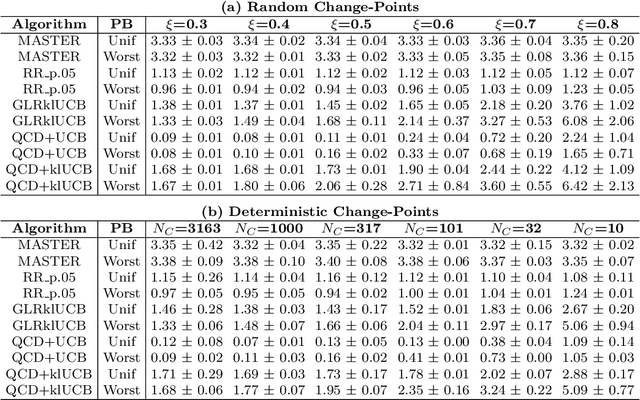

Abstract:We study the problem of Non-Stationary Reinforcement Learning (NS-RL) without prior knowledge about the system's non-stationarity. A state-of-the-art, black-box algorithm, known as MASTER, is considered, with a focus on identifying the conditions under which it can achieve its stated goals. Specifically, we prove that MASTER's non-stationarity detection mechanism is not triggered for practical choices of horizon, leading to performance akin to a random restarting algorithm. Moreover, we show that the regret bound for MASTER, while being order optimal, stays above the worst-case linear regret until unreasonably large values of the horizon. To validate these observations, MASTER is tested for the special case of piecewise stationary multi-armed bandits, along with methods that employ random restarting, and others that use quickest change detection to restart. A simple, order optimal random restarting algorithm, that has prior knowledge of the non-stationarity is proposed as a baseline. The behavior of the MASTER algorithm is validated in simulations, and it is shown that methods employing quickest change detection are more robust and consistently outperform MASTER and other random restarting approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge