Younggil Cho

Planning for target retrieval using a robotic manipulator in cluttered and occluded environments

Jul 09, 2019

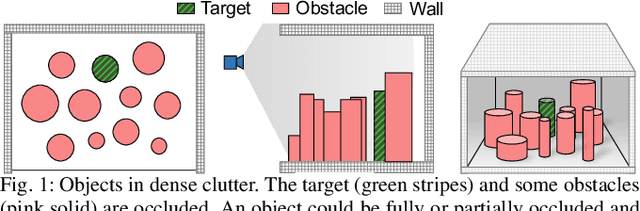

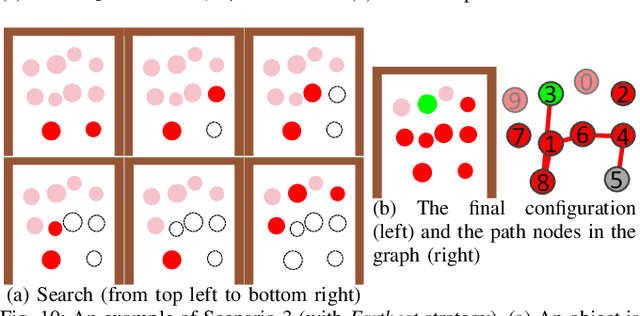

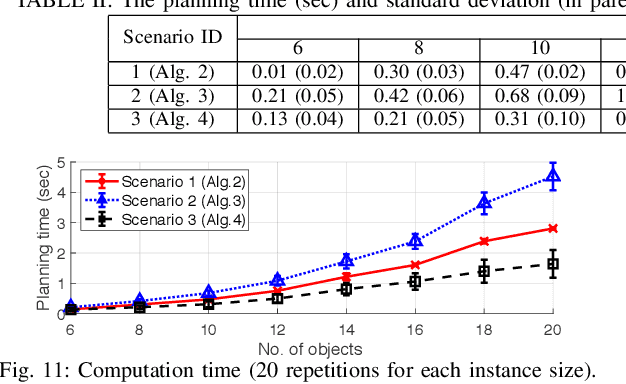

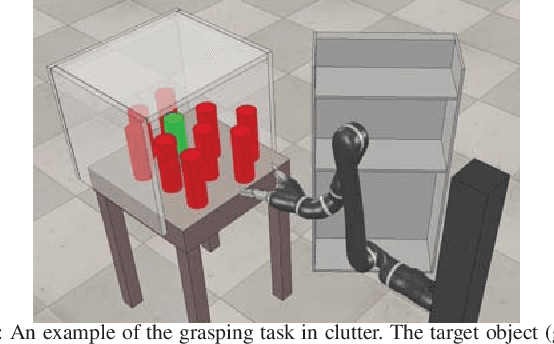

Abstract:This paper presents planning algorithms for a robotic manipulator with a fixed base in order to grasp a target object in cluttered environments. We consider a configuration of objects in a confined space with a high density so no collision-free path to the target exists. The robot must relocate some objects to retrieve the target while avoiding collisions. For fast completion of the retrieval task, the robot needs to compute a plan optimizing an appropriate objective value directly related to the execution time of the relocation plan. We propose planning algorithms that aim to minimize the number of objects to be relocated. Our objective value is appropriate for the object retrieval task because grasping and releasing objects often dominate the total running time. In addition to the algorithm working in fully known and static environments, we propose algorithms that can deal with uncertain and dynamic situations incurred by occluded views. The proposed algorithms are shown to be complete and run in polynomial time. Our methods reduce the total running time significantly compared to a baseline method (e.g., 25.1% of reduction in a known static environment with 10 objects

Efficient Obstacle Rearrangement for Object Manipulation Tasks in Cluttered Environments

Feb 19, 2019

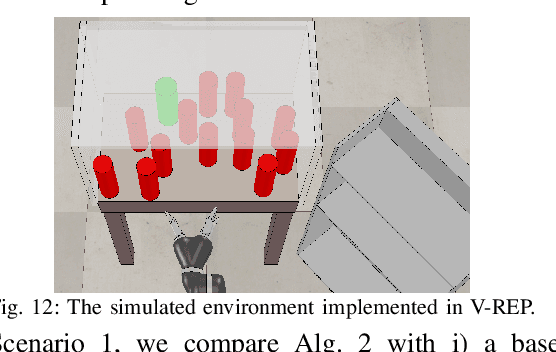

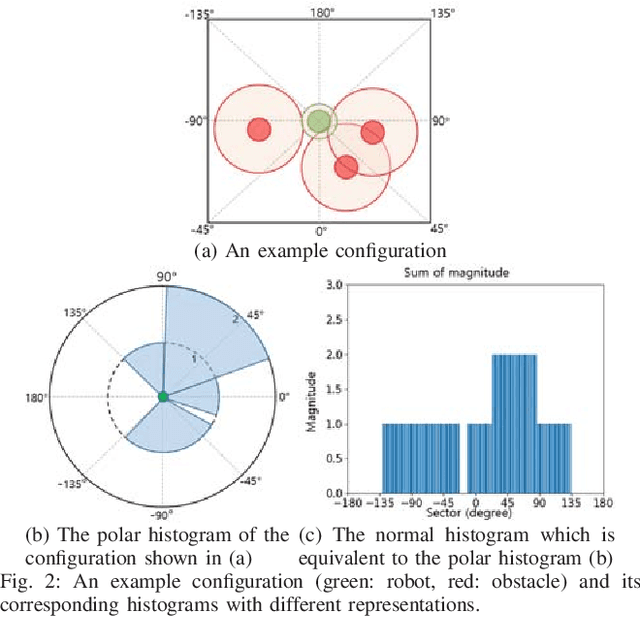

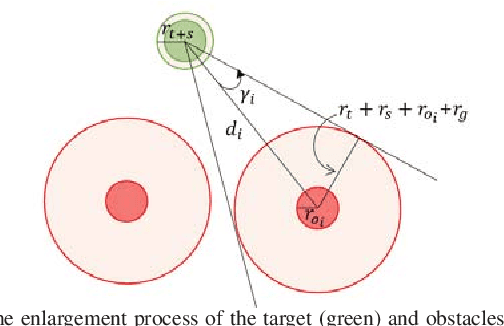

Abstract:We present an algorithm that produces a plan for relocating obstacles in order to grasp a target in clutter by a robotic manipulator without collisions. We consider configurations where objects are densely populated in a constrained and confined space. Thus, there exists no collision-free path for the manipulator without relocating obstacles. Since the problem of planning for object rearrangement has shown to be NP-hard, it is difficult to perform manipulation tasks efficiently which could frequently happen in service domains (e.g., taking out a target from a shelf or a fridge). Our proposed planner employs a collision avoidance scheme which has been widely used in mobile robot navigation. The planner determines an obstacle to be removed quickly in real time. It also can deal with dynamic changes in the configuration (e.g., changes in object poses). Our method is shown to be complete and runs in polynomial time. Experimental results in a realistic simulated environment show that our method improves up to 31% of the execution time compared to other competitors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge