Yoshihiro Yamada

CAT: Circular-Convolutional Attention for Sub-Quadratic Transformers

Apr 09, 2025Abstract:Transformers have driven remarkable breakthroughs in natural language processing and computer vision, yet their standard attention mechanism still imposes O(N^2) complexity, hindering scalability to longer sequences. We introduce Circular-convolutional ATtention (CAT), a Fourier-based approach that efficiently applies circular convolutions to reduce complexity without sacrificing representational power. CAT achieves O(NlogN) computations, requires fewer learnable parameters by streamlining fully-connected layers, and introduces no heavier operations, resulting in consistent accuracy improvements and about a 10% speedup in naive PyTorch implementations on large-scale benchmarks such as ImageNet-1k and WikiText-103. Grounded in an engineering-isomorphism framework, CAT's design not only offers practical efficiency and ease of implementation but also provides insights to guide the development of next-generation, high-performance Transformer architectures. Finally, our ablation studies highlight the key conditions underlying CAT's success, shedding light on broader principles for scalable attention mechanisms.

Joint Search of Data Augmentation Policies and Network Architectures

Jan 12, 2021

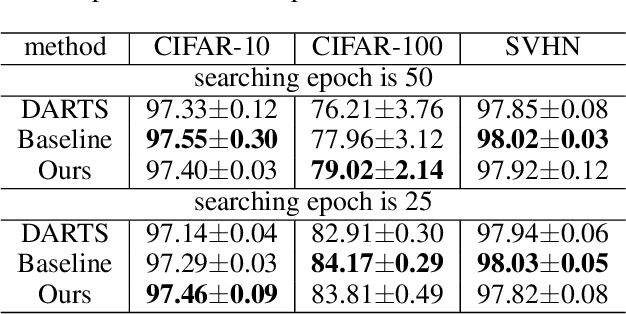

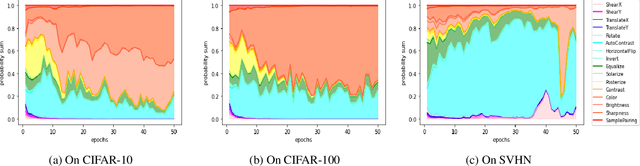

Abstract:The common pipeline of training deep neural networks consists of several building blocks such as data augmentation and network architecture selection. AutoML is a research field that aims at automatically designing those parts, but most methods explore each part independently because it is more challenging to simultaneously search all the parts. In this paper, we propose a joint optimization method for data augmentation policies and network architectures to bring more automation to the design of training pipeline. The core idea of our approach is to make the whole part differentiable. The proposed method combines differentiable methods for augmentation policy search and network architecture search to jointly optimize them in the end-to-end manner. The experimental results show our method achieves competitive or superior performance to the independently searched results.

ShakeDrop regularization

Feb 07, 2018

Abstract:This paper proposes a powerful regularization method named ShakeDrop regularization. ShakeDrop is inspired by Shake-Shake regularization that decreases error rates by disturbing learning. While Shake-Shake can be applied to only ResNeXt which has multiple branches, ShakeDrop can be applied to not only ResNeXt but also ResNet, Wide ResNet and PyramidNet in a memory efficient way. Important and interesting feature of ShakeDrop is that it strongly disturbs learning by multiplying even a negative factor to the output of a convolutional layer in the forward training pass. The effectiveness of ShakeDrop is confirmed by experiments on CIFAR-10/100 and Tiny ImageNet datasets.

Deep Pyramidal Residual Networks with Separated Stochastic Depth

Dec 05, 2016

Abstract:On general object recognition, Deep Convolutional Neural Networks (DCNNs) achieve high accuracy. In particular, ResNet and its improvements have broken the lowest error rate records. In this paper, we propose a method to successfully combine two ResNet improvements, ResDrop and PyramidNet. We confirmed that the proposed network outperformed the conventional methods; on CIFAR-100, the proposed network achieved an error rate of 16.18% in contrast to PiramidNet achieving that of 18.29% and ResNeXt 17.31%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge