Joint Search of Data Augmentation Policies and Network Architectures

Paper and Code

Jan 12, 2021

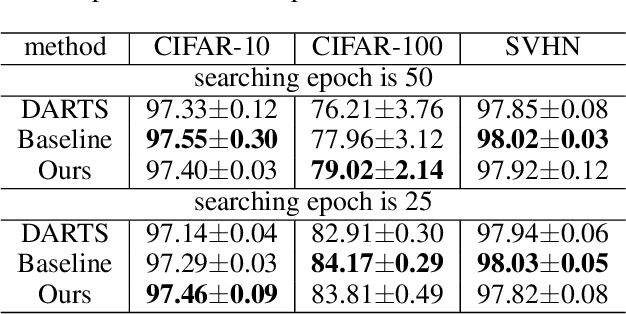

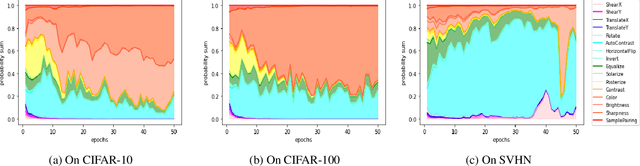

The common pipeline of training deep neural networks consists of several building blocks such as data augmentation and network architecture selection. AutoML is a research field that aims at automatically designing those parts, but most methods explore each part independently because it is more challenging to simultaneously search all the parts. In this paper, we propose a joint optimization method for data augmentation policies and network architectures to bring more automation to the design of training pipeline. The core idea of our approach is to make the whole part differentiable. The proposed method combines differentiable methods for augmentation policy search and network architecture search to jointly optimize them in the end-to-end manner. The experimental results show our method achieves competitive or superior performance to the independently searched results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge