Yongkyun Lee

Improving Robustness of Reinforcement Learning for Power System Control with Adversarial Training

Oct 19, 2021

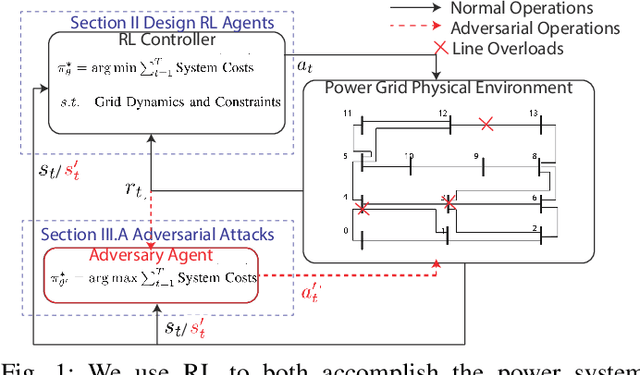

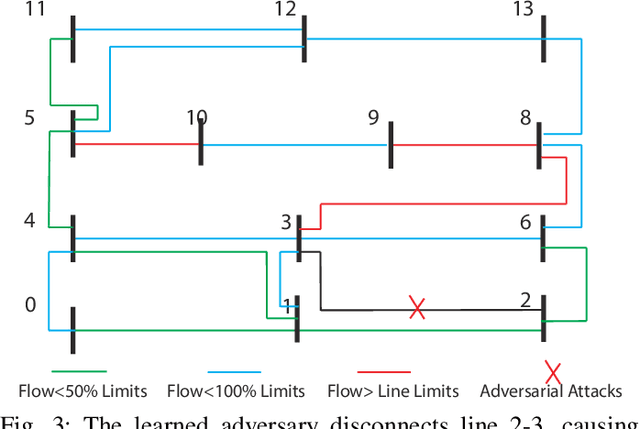

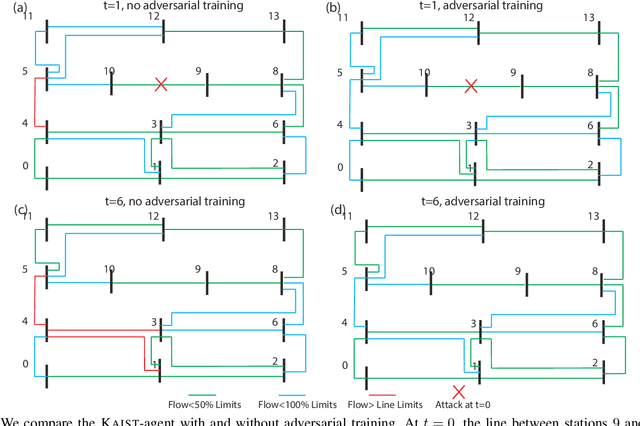

Abstract:Due to the proliferation of renewable energy and its intrinsic intermittency and stochasticity, current power systems face severe operational challenges. Data-driven decision-making algorithms from reinforcement learning (RL) offer a solution towards efficiently operating a clean energy system. Although RL algorithms achieve promising performance compared to model-based control models, there has been limited investigation of RL robustness in safety-critical physical systems. In this work, we first show that several competition-winning, state-of-the-art RL agents proposed for power system control are vulnerable to adversarial attacks. Specifically, we use an adversary Markov Decision Process to learn an attack policy, and demonstrate the potency of our attack by successfully attacking multiple winning agents from the Learning To Run a Power Network (L2RPN) challenge, under both white-box and black-box attack settings. We then propose to use adversarial training to increase the robustness of RL agent against attacks and avoid infeasible operational decisions. To the best of our knowledge, our work is the first to highlight the fragility of grid control RL algorithms, and contribute an effective defense scheme towards improving their robustness and security.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge