Yi-Ming Chan

Compacting, Picking and Growing for Unforgetting Continual Learning

Oct 30, 2019

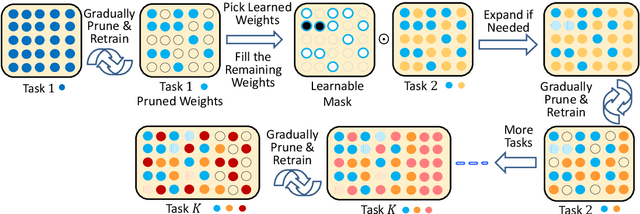

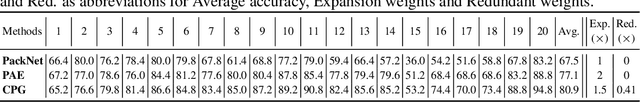

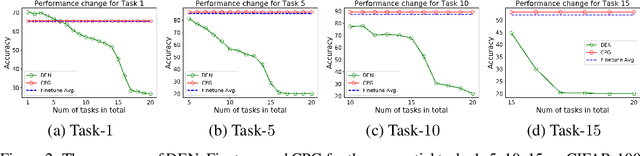

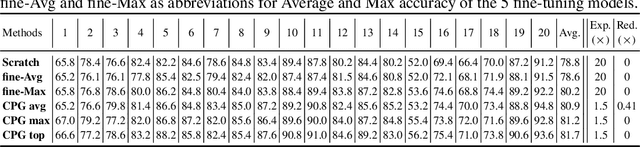

Abstract:Continual lifelong learning is essential to many applications. In this paper, we propose a simple but effective approach to continual deep learning. Our approach leverages the principles of deep model compression, critical weights selection, and progressive networks expansion. By enforcing their integration in an iterative manner, we introduce an incremental learning method that is scalable to the number of sequential tasks in a continual learning process. Our approach is easy to implement and owns several favorable characteristics. First, it can avoid forgetting (i.e., learn new tasks while remembering all previous tasks). Second, it allows model expansion but can maintain the model compactness when handling sequential tasks. Besides, through our compaction and selection/expansion mechanism, we show that the knowledge accumulated through learning previous tasks is helpful to build a better model for the new tasks compared to training the models independently with tasks. Experimental results show that our approach can incrementally learn a deep model tackling multiple tasks without forgetting, while the model compactness is maintained with the performance more satisfiable than individual task training.

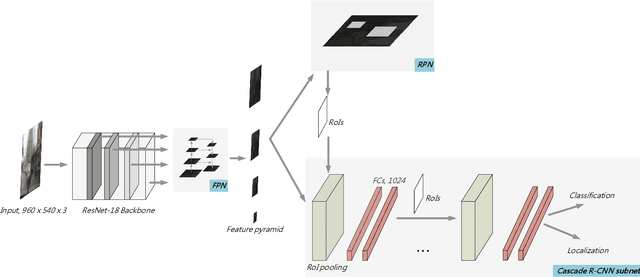

IMMVP: An Efficient Daytime and Nighttime On-Road Object Detector

Oct 28, 2019

Abstract:It is hard to detect on-road objects under various lighting conditions. To improve the quality of the classifier, three techniques are used. We define subclasses to separate daytime and nighttime samples. Then we skip similar samples in the training set to prevent overfitting. With the help of the outside training samples, the detection accuracy is also improved. To detect objects in an edge device, Nvidia Jetson TX2 platform, we exert the lightweight model ResNet-18 FPN as the backbone feature extractor. The FPN (Feature Pyramid Network) generates good features for detecting objects over various scales. With Cascade R-CNN technique, the bounding boxes are iteratively refined for better results.

Data-specific Adaptive Threshold for Face Recognition and Authentication

Oct 26, 2018

Abstract:Many face recognition systems boost the performance using deep learning models, but only a few researches go into the mechanisms for dealing with online registration. Although we can obtain discriminative facial features through the state-of-the-art deep model training, how to decide the best threshold for practical use remains a challenge. We develop a technique of adaptive threshold mechanism to improve the recognition accuracy. We also design a face recognition system along with the registering procedure to handle online registration. Furthermore, we introduce a new evaluation protocol to better evaluate the performance of an algorithm for real-world scenarios. Under our proposed protocol, our method can achieve a 22\% accuracy improvement on the LFW dataset.

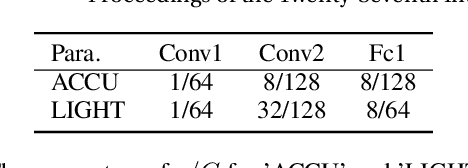

Joint Estimation of Age and Gender from Unconstrained Face Images using Lightweight Multi-task CNN for Mobile Applications

Jun 06, 2018

Abstract:Automatic age and gender classification based on unconstrained images has become essential techniques on mobile devices. With limited computing power, how to develop a robust system becomes a challenging task. In this paper, we present an efficient convolutional neural network (CNN) called lightweight multi-task CNN for simultaneous age and gender classification. Lightweight multi-task CNN uses depthwise separable convolution to reduce the model size and save the inference time. On the public challenging Adience dataset, the accuracy of age and gender classification is better than baseline multi-task CNN methods.

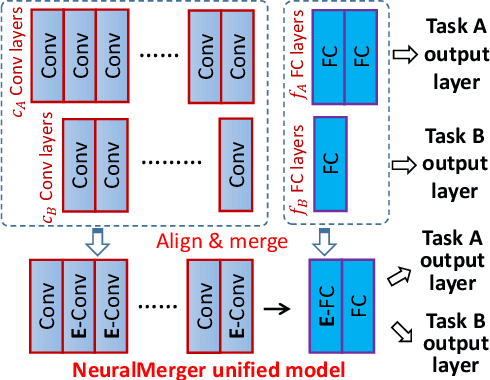

Unifying and Merging Well-trained Deep Neural Networks for Inference Stage

May 14, 2018

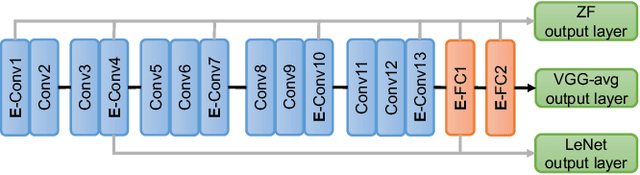

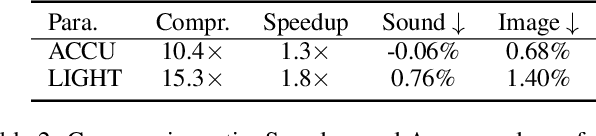

Abstract:We propose a novel method to merge convolutional neural-nets for the inference stage. Given two well-trained networks that may have different architectures that handle different tasks, our method aligns the layers of the original networks and merges them into a unified model by sharing the representative codes of weights. The shared weights are further re-trained to fine-tune the performance of the merged model. The proposed method effectively produces a compact model that may run original tasks simultaneously on resource-limited devices. As it preserves the general architectures and leverages the co-used weights of well-trained networks, a substantial training overhead can be reduced to shorten the system development time. Experimental results demonstrate a satisfactory performance and validate the effectiveness of the method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge