Yi-Jun Cao

Nighttime Thermal Infrared Image Colorization with Feedback-based Object Appearance Learning

Oct 24, 2023

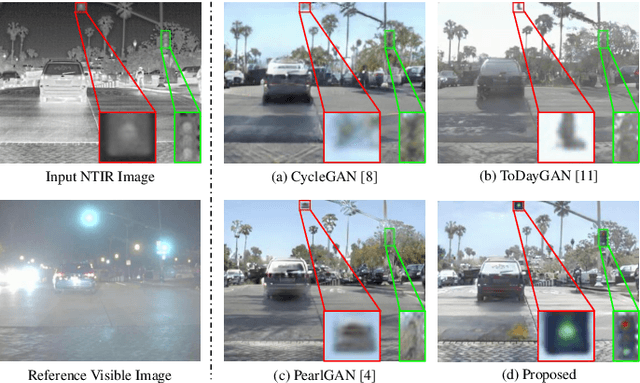

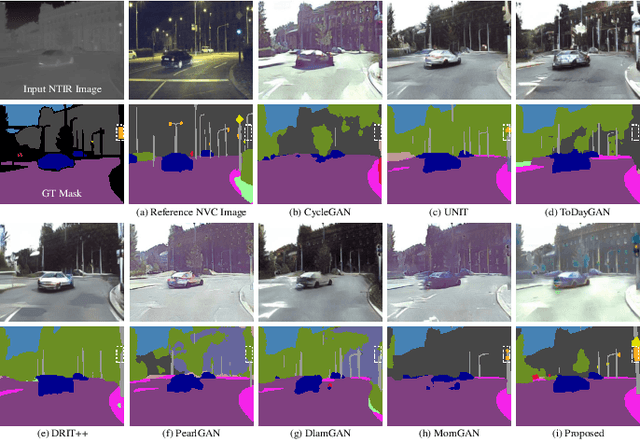

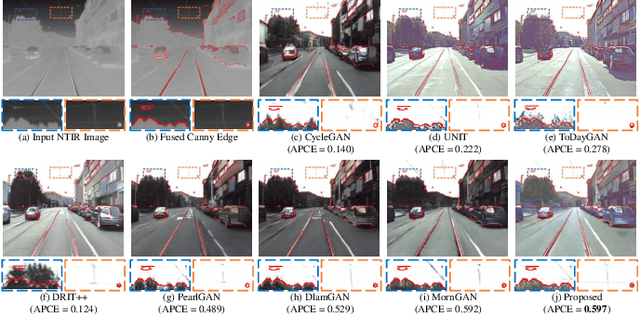

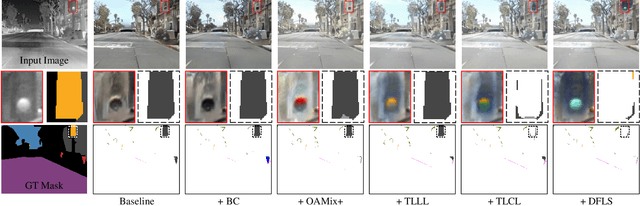

Abstract:Stable imaging in adverse environments (e.g., total darkness) makes thermal infrared (TIR) cameras a prevalent option for night scene perception. However, the low contrast and lack of chromaticity of TIR images are detrimental to human interpretation and subsequent deployment of RGB-based vision algorithms. Therefore, it makes sense to colorize the nighttime TIR images by translating them into the corresponding daytime color images (NTIR2DC). Despite the impressive progress made in the NTIR2DC task, how to improve the translation performance of small object classes is under-explored. To address this problem, we propose a generative adversarial network incorporating feedback-based object appearance learning (FoalGAN). Specifically, an occlusion-aware mixup module and corresponding appearance consistency loss are proposed to reduce the context dependence of object translation. As a representative example of small objects in nighttime street scenes, we illustrate how to enhance the realism of traffic light by designing a traffic light appearance loss. To further improve the appearance learning of small objects, we devise a dual feedback learning strategy to selectively adjust the learning frequency of different samples. In addition, we provide pixel-level annotation for a subset of the Brno dataset, which can facilitate the research of NTIR image understanding under multiple weather conditions. Extensive experiments illustrate that the proposed FoalGAN is not only effective for appearance learning of small objects, but also outperforms other image translation methods in terms of semantic preservation and edge consistency for the NTIR2DC task.

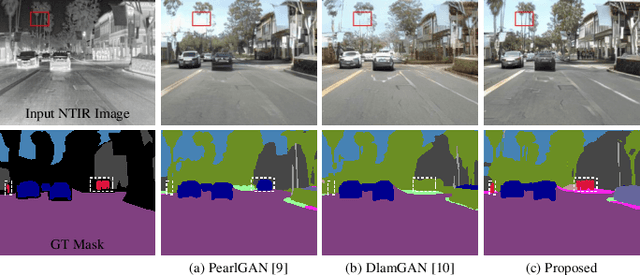

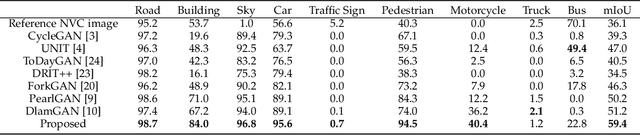

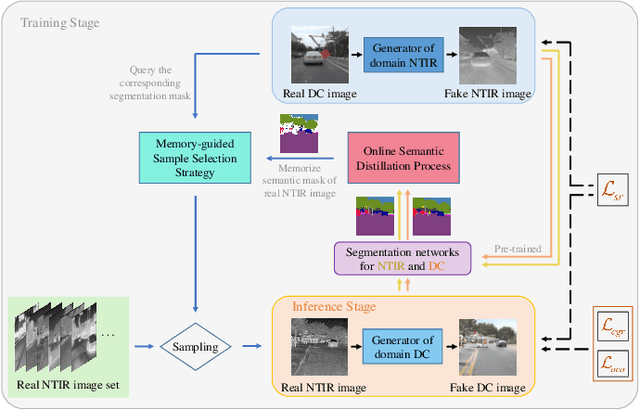

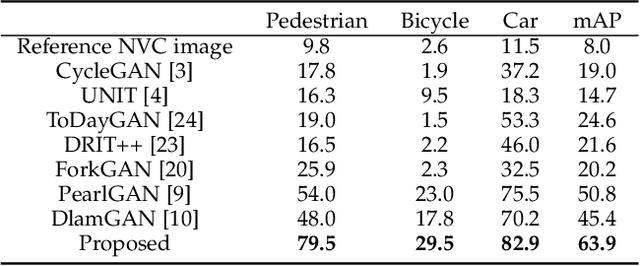

Memory-Guided Collaborative Attention for Nighttime Thermal Infrared Image Colorization

Aug 05, 2022

Abstract:Nighttime thermal infrared (NTIR) image colorization, also known as translation of NTIR images into daytime color images (NTIR2DC), is a promising research direction to facilitate nighttime scene perception for humans and intelligent systems under unfavorable conditions (e.g., complete darkness). However, previously developed methods have poor colorization performance for small sample classes. Moreover, reducing the high confidence noise in pseudo-labels and addressing the problem of image gradient disappearance during translation are still under-explored, and keeping edges from being distorted during translation is also challenging. To address the aforementioned issues, we propose a novel learning framework called Memory-guided cOllaboRative atteNtion Generative Adversarial Network (MornGAN), which is inspired by the analogical reasoning mechanisms of humans. Specifically, a memory-guided sample selection strategy and adaptive collaborative attention loss are devised to enhance the semantic preservation of small sample categories. In addition, we propose an online semantic distillation module to mine and refine the pseudo-labels of NTIR images. Further, conditional gradient repair loss is introduced for reducing edge distortion during translation. Extensive experiments on the NTIR2DC task show that the proposed MornGAN significantly outperforms other image-to-image translation methods in terms of semantic preservation and edge consistency, which helps improve the object detection accuracy remarkably.

Learning Crisp Boundaries Using Deep Refinement Network and Adaptive Weighting Loss

Mar 03, 2021

Abstract:Significant progress has been made in boundary detection with the help of convolutional neural networks. Recent boundary detection models not only focus on real object boundary detection but also "crisp" boundaries (precisely localized along the object's contour). There are two methods to evaluate crisp boundary performance. One uses more strict tolerance to measure the distance between the ground truth and the detected contour. The other focuses on evaluating the contour map without any postprocessing. In this study, we analyze both methods and conclude that both methods are two aspects of crisp contour evaluation. Accordingly, we propose a novel network named deep refinement network (DRNet) that stacks multiple refinement modules to achieve richer feature representation and a novel loss function, which combines cross-entropy and dice loss through effective adaptive fusion. Experimental results demonstrated that we achieve state-of-the-art performance for several available datasets.

* 11 pages, 7 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge