Yating Ma

GADT: Enhancing Transferable Adversarial Attacks through Gradient-guided Adversarial Data Transformation

Oct 24, 2024

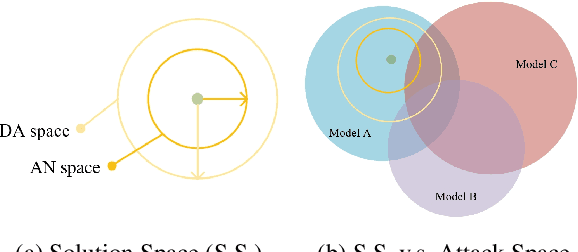

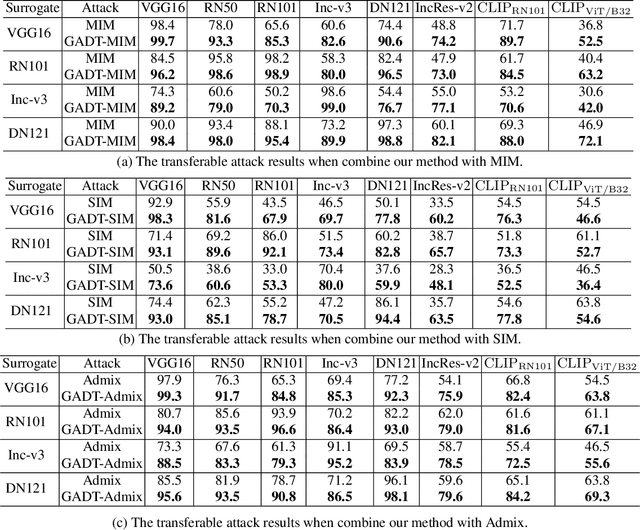

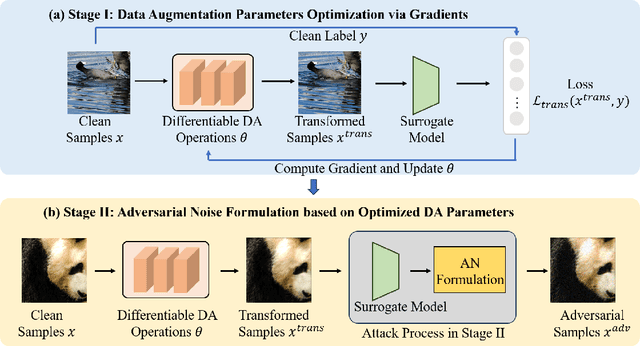

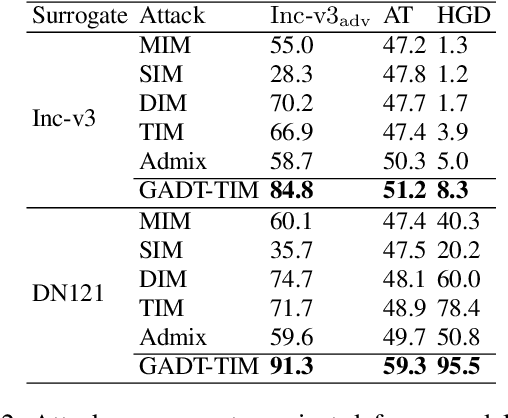

Abstract:Current Transferable Adversarial Examples (TAE) are primarily generated by adding Adversarial Noise (AN). Recent studies emphasize the importance of optimizing Data Augmentation (DA) parameters along with AN, which poses a greater threat to real-world AI applications. However, existing DA-based strategies often struggle to find optimal solutions due to the challenging DA search procedure without proper guidance. In this work, we propose a novel DA-based attack algorithm, GADT. GADT identifies suitable DA parameters through iterative antagonism and uses posterior estimates to update AN based on these parameters. We uniquely employ a differentiable DA operation library to identify adversarial DA parameters and introduce a new loss function as a metric during DA optimization. This loss term enhances adversarial effects while preserving the original image content, maintaining attack crypticity. Extensive experiments on public datasets with various networks demonstrate that GADT can be integrated with existing transferable attack methods, updating their DA parameters effectively while retaining their AN formulation strategies. Furthermore, GADT can be utilized in other black-box attack scenarios, e.g., query-based attacks, offering a new avenue to enhance attacks on real-world AI applications in both research and industrial contexts.

An Effective Fusion Method to Enhance the Robustness of CNN

May 31, 2022

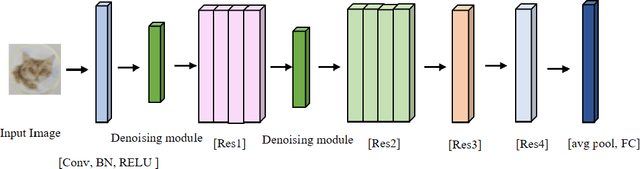

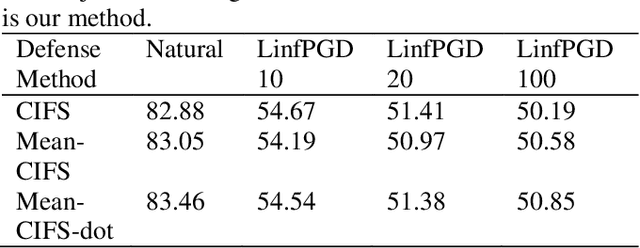

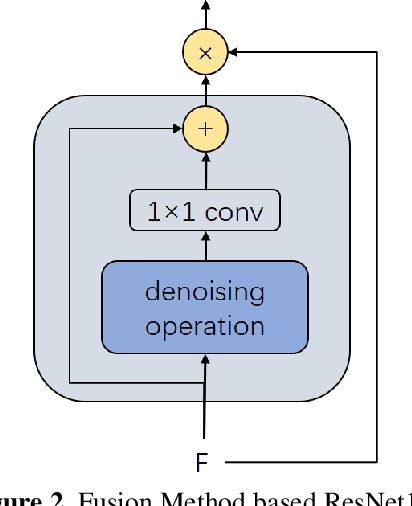

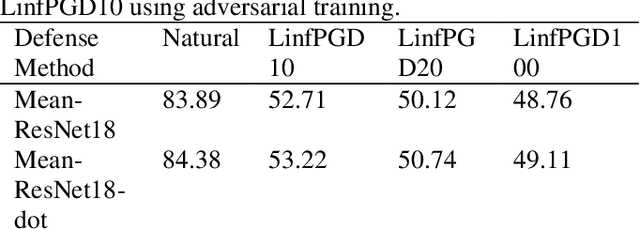

Abstract:With the development of technology rapidly, applications of convolutional neural networks have improved the convenience of our life. However, in image classification field, it has been found that when some perturbations are added to images, the CNN would misclassify it. Thus various defense methods have been proposed. The previous approach only considered how to incorporate modules in the network to improve robustness, but did not focus on the way the modules were incorporated. In this paper, we design a new fusion method to enhance the robustness of CNN. We use a dot product-based approach to add the denoising module to ResNet18 and the attention mechanism to further improve the robustness of the model. The experimental results on CIFAR10 have shown that our method is effective and better than the state-of-the-art methods under the attack of FGSM and PGD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge