Yasunari Hikima

Multi-point Feedback of Bandit Convex Optimization with Hard Constraints

Oct 17, 2023Abstract:This paper studies bandit convex optimization with constraints, where the learner aims to generate a sequence of decisions under partial information of loss functions such that the cumulative loss is reduced as well as the cumulative constraint violation is simultaneously reduced. We adopt the cumulative \textit{hard} constraint violation as the metric of constraint violation, which is defined by $\sum_{t=1}^{T} \max\{g_t(\boldsymbol{x}_t), 0\}$. Owing to the maximum operator, a strictly feasible solution cannot cancel out the effects of violated constraints compared to the conventional metric known as \textit{long-term} constraints violation. We present a penalty-based proximal gradient descent method that attains a sub-linear growth of both regret and cumulative hard constraint violation, in which the gradient is estimated with a two-point function evaluation. Precisely, our algorithm attains $O(d^2T^{\max\{c,1-c\}})$ regret bounds and $O(d^2T^{1-\frac{c}{2}})$ cumulative hard constraint violation bounds for convex loss functions and time-varying constraints, where $d$ is the dimensionality of the feasible region and $c\in[\frac{1}{2}, 1)$ is a user-determined parameter. We also extend the result for the case where the loss functions are strongly convex and show that both regret and constraint violation bounds can be further reduced.

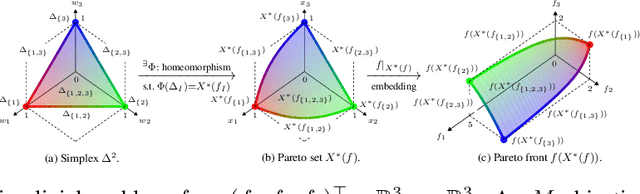

Bézier Flow: a Surface-wise Gradient Descent Method for Multi-objective Optimization

May 23, 2022

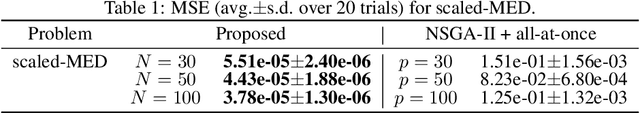

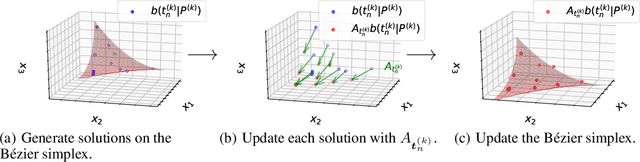

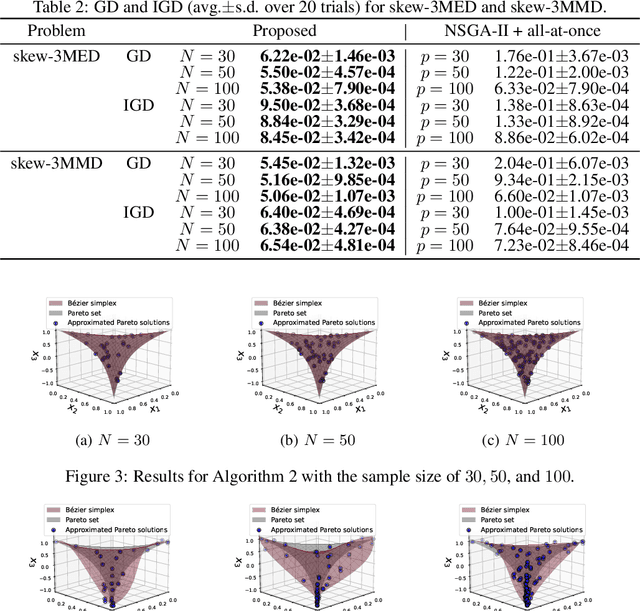

Abstract:In this paper, we propose a strategy to construct a multi-objective optimization algorithm from a single-objective optimization algorithm by using the B\'ezier simplex model. Also, we extend the stability of optimization algorithms in the sense of Probability Approximately Correct (PAC) learning and define the PAC stability. We prove that it leads to an upper bound on the generalization with high probability. Furthermore, we show that multi-objective optimization algorithms derived from a gradient descent-based single-objective optimization algorithm are PAC stable. We conducted numerical experiments and demonstrated that our method achieved lower generalization errors than the existing multi-objective optimization algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge