Yash Upadhyay

DeDrift: Robust Similarity Search under Content Drift

Aug 05, 2023

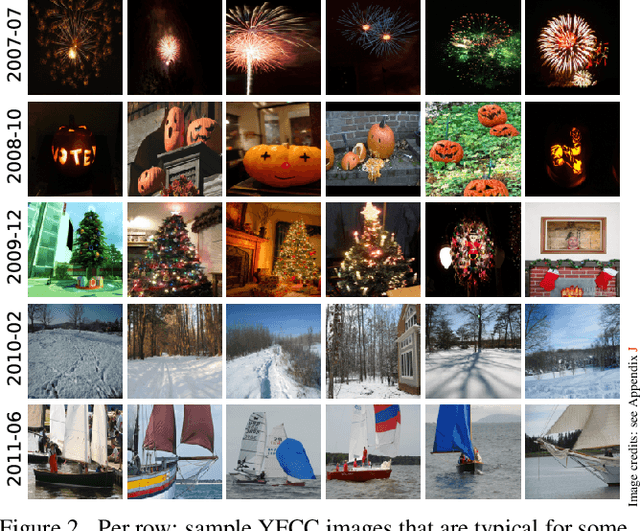

Abstract:The statistical distribution of content uploaded and searched on media sharing sites changes over time due to seasonal, sociological and technical factors. We investigate the impact of this "content drift" for large-scale similarity search tools, based on nearest neighbor search in embedding space. Unless a costly index reconstruction is performed frequently, content drift degrades the search accuracy and efficiency. The degradation is especially severe since, in general, both the query and database distributions change. We introduce and analyze real-world image and video datasets for which temporal information is available over a long time period. Based on the learnings, we devise DeDrift, a method that updates embedding quantizers to continuously adapt large-scale indexing structures on-the-fly. DeDrift almost eliminates the accuracy degradation due to the query and database content drift while being up to 100x faster than a full index reconstruction.

Generative Adversarial Network Architectures For Image Synthesis Using Capsule Networks

Jul 25, 2018

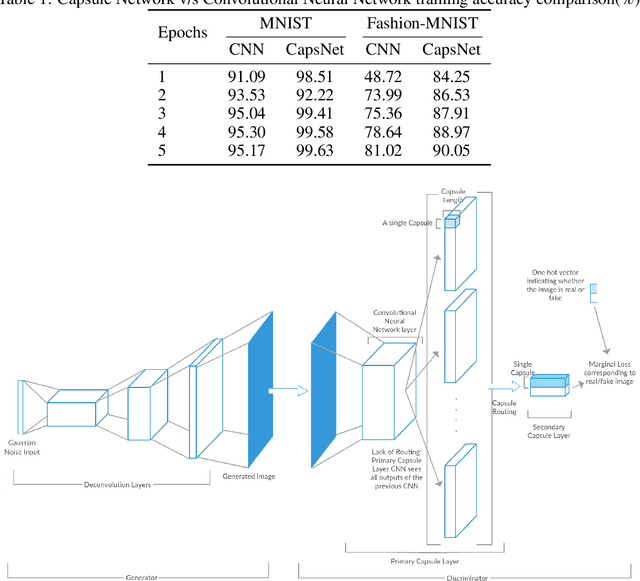

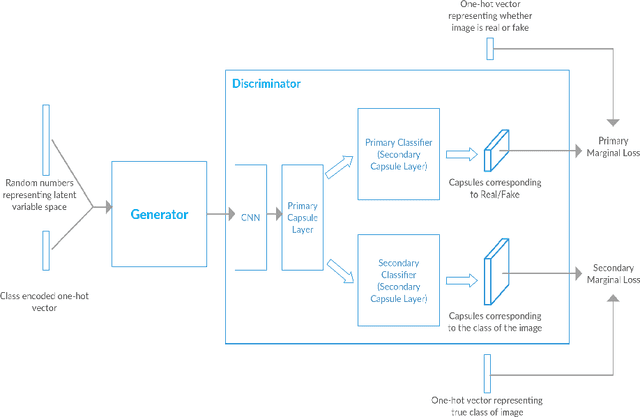

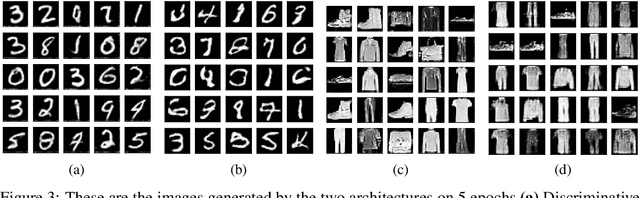

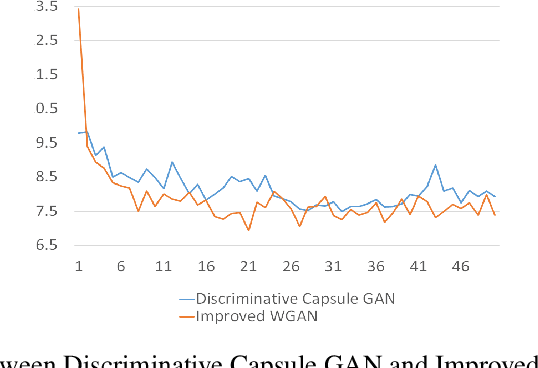

Abstract:In this paper, we propose Generative Adversarial Network (GAN) architectures using Capsule Networks for conditional and random image-synthesis. Capsule Networks encode meta-properties and spatial relationships between the features of the image, which helps it become a more powerful critic in comparison to the Convolutional Neural Networks (CNNs) used in current architectures for image synthesis. Our architectures use losses analogous to Wasserstein loss and Capsule Networks, which prove to be a more effective critic in comparison to CNNs. Thus, our proposed GAN architectures learn the data manifold much faster and therefore, show significant reduction in the number of training samples required to train when compared to the current work horses for image synthesis, DCGANs and its variants which utilize CNNs as discriminators. Also, our architecture generalizes over the datasets' manifold much better because of dynamic routing between capsules which is a more robust algorithm for feature globalization in comparison to max-pooling used by CNNs. This helps synthesize more diverse, yet visually accurate images. We have demonstrated the performance of our architectures over MNIST, Fashion-MNIST and their variants and compared them with the images synthesised using Improved Wasserstein GANs that use CNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge