Yaoxin Zhuo

Transformer-based Selective Super-Resolution for Efficient Image Refinement

Dec 10, 2023

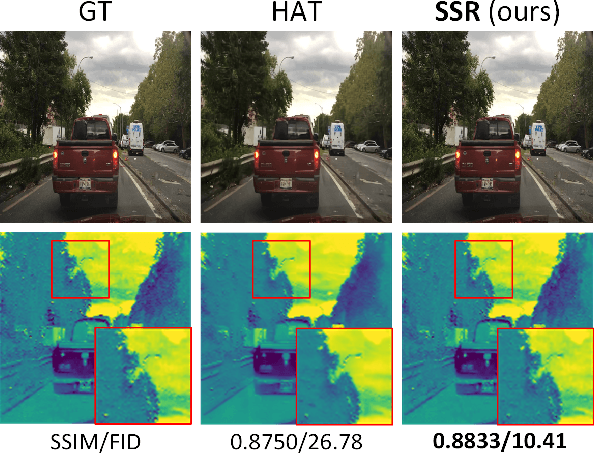

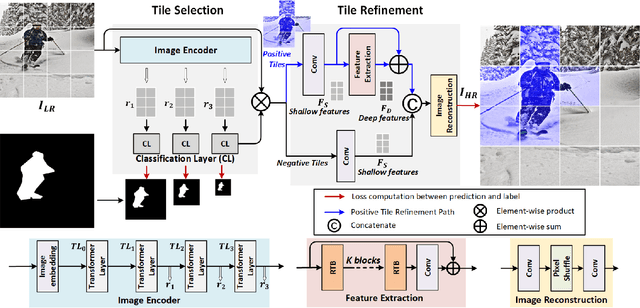

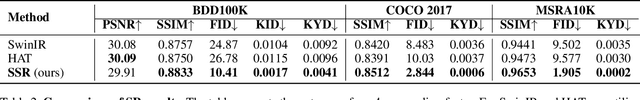

Abstract:Conventional super-resolution methods suffer from two drawbacks: substantial computational cost in upscaling an entire large image, and the introduction of extraneous or potentially detrimental information for downstream computer vision tasks during the refinement of the background. To solve these issues, we propose a novel transformer-based algorithm, Selective Super-Resolution (SSR), which partitions images into non-overlapping tiles, selects tiles of interest at various scales with a pyramid architecture, and exclusively reconstructs these selected tiles with deep features. Experimental results on three datasets demonstrate the efficiency and robust performance of our approach for super-resolution. Compared to the state-of-the-art methods, the FID score is reduced from 26.78 to 10.41 with 40% reduction in computation cost for the BDD100K dataset. The source code is available at https://github.com/destiny301/SSR.

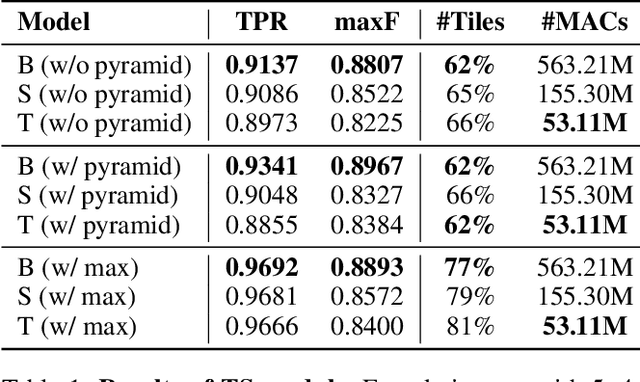

Patch-based Selection and Refinement for Early Object Detection

Nov 03, 2023

Abstract:Early object detection (OD) is a crucial task for the safety of many dynamic systems. Current OD algorithms have limited success for small objects at a long distance. To improve the accuracy and efficiency of such a task, we propose a novel set of algorithms that divide the image into patches, select patches with objects at various scales, elaborate the details of a small object, and detect it as early as possible. Our approach is built upon a transformer-based network and integrates the diffusion model to improve the detection accuracy. As demonstrated on BDD100K, our algorithms enhance the mAP for small objects from 1.03 to 8.93, and reduce the data volume in computation by more than 77\%. The source code is available at \href{https://github.com/destiny301/dpr}{https://github.com/destiny301/dpr}

FedNS: Improving Federated Learning for collaborative image classification on mobile clients

Jan 20, 2021

Abstract:Federated Learning (FL) is a paradigm that aims to support loosely connected clients in learning a global model collaboratively with the help of a centralized server. The most popular FL algorithm is Federated Averaging (FedAvg), which is based on taking weighted average of the client models, with the weights determined largely based on dataset sizes at the clients. In this paper, we propose a new approach, termed Federated Node Selection (FedNS), for the server's global model aggregation in the FL setting. FedNS filters and re-weights the clients' models at the node/kernel level, hence leading to a potentially better global model by fusing the best components of the clients. Using collaborative image classification as an example, we show with experiments from multiple datasets and networks that FedNS can consistently achieve improved performance over FedAvg.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge