Yanhong She

Logic could be learned from images

Aug 06, 2019

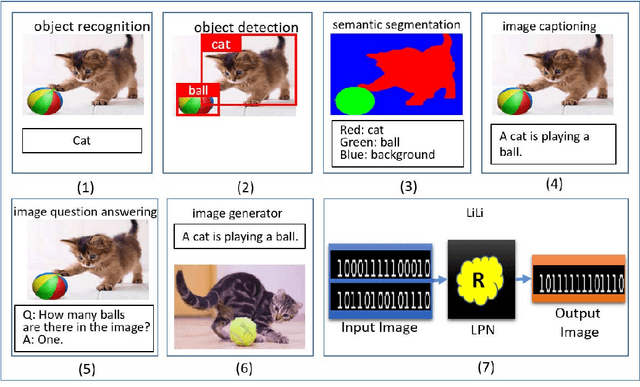

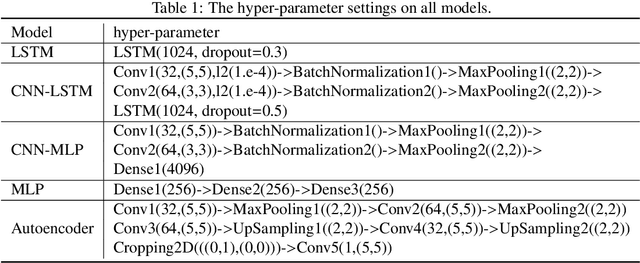

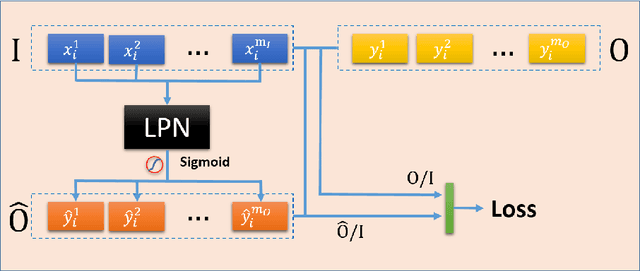

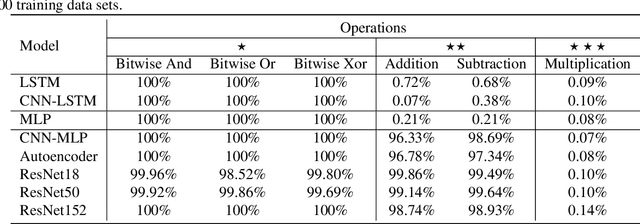

Abstract:Logic reasoning is a significant ability of human intelligence and also an important task in artificial intelligence. The existing logic reasoning methods, quite often, need to design some reasoning patterns beforehand. This has led to an interesting question: can logic reasoning patterns be directly learned from given data? The problem is termed as a data concept logic (DCL). In this study, a learning logic task from images, just a LiLi task, first is proposed. This task is to learn and reason the relation between two input images and one output image, without presetting any reasoning patterns. As a preliminary exploration, we design six LiLi data sets (Bitwise And, Bitwise Or, Bitwise Xor, Addition, Subtraction and Multiplication), in which each image is embedded with a n-digit number. It is worth noting that a learning model beforehand does not know the meaning of the n-digit number embedded in images and relation between the input images and the output image. In order to tackle the task, in this work we use many typical neural network models and produce fruitful results. However, these models have the poor performances on the difficult logic task. For furthermore addressing this task, a novel network framework called a divide and conquer model (DCM) by adding some prior information is designed, achieving a high testing accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge