Yanhan Tang

Efficiency, Fairness, and Stability in Non-Commercial Peer-to-Peer Ridesharing

Oct 04, 2021

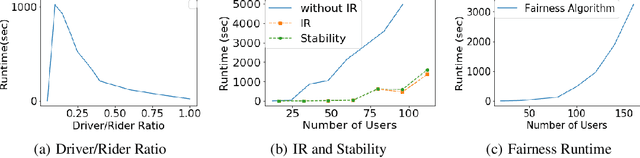

Abstract:Unlike commercial ridesharing, non-commercial peer-to-peer (P2P) ridesharing has been subject to limited research -- although it can promote viable solutions in non-urban communities. This paper focuses on the core problem in P2P ridesharing: the matching of riders and drivers. We elevate users' preferences as a first-order concern and introduce novel notions of fairness and stability in P2P ridesharing. We propose algorithms for efficient matching while considering user-centric factors, including users' preferred departure time, fairness, and stability. Results suggest that fair and stable solutions can be obtained in reasonable computational times and can improve baseline outcomes based on system-wide efficiency exclusively.

When is it permissible for artificial intelligence to lie? A trust-based approach

Mar 14, 2021Abstract:Conversational Artificial Intelligence (AI) used in industry settings can be trained to closely mimic human behaviors, including lying and deception. However, lying is often a necessary part of negotiation. To address this, we develop a normative framework for when it is ethical or unethical for a conversational AI to lie to humans, based on whether there is what we call "invitation of trust" in a particular scenario. Importantly, cultural norms play an important role in determining whether there is invitation of trust across negotiation settings, and thus an AI trained in one culture may not be generalizable to others. Moreover, individuals may have different expectations regarding the invitation of trust and propensity to lie for human vs. AI negotiators, and these expectations may vary across cultures as well. Finally, we outline how a conversational chatbot can be trained to negotiate ethically by applying autoregressive models to large dialog and negotiations datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge