Yale Tung Chen

covEcho Resource constrained lung ultrasound image analysis tool for faster triaging and active learning

Jun 21, 2022

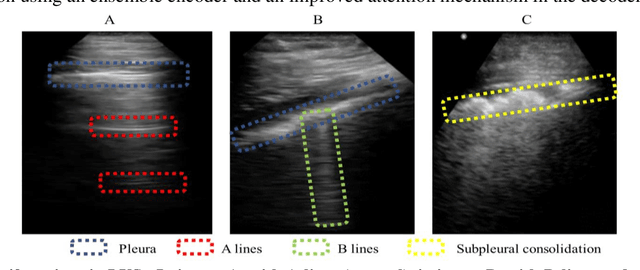

Abstract:Lung ultrasound (LUS) is possibly the only medical imaging modality which could be used for continuous and periodic monitoring of the lung. This is extremely useful in tracking the lung manifestations either during the onset of lung infection or to track the effect of vaccination on lung as in pandemics such as COVID-19. There have been many attempts in automating the classification of severity of lung into various classes or automatic segmentation of various LUS landmarks and manifestations. However, all these approaches are based on training static machine learning models which require a significantly clinically annotated large dataset and are computationally heavy and most of the time non-real time. In this work, a real-time light weight active learning-based approach is presented for faster triaging in COVID-19 subjects in resource constrained settings. The tool, based on the you look only once (YOLO) network, has the capability of providing the quality of images based on the identification of various LUS landmarks, artefacts and manifestations, prediction of severity of lung infection, possibility of active learning based on the feedback from clinicians or on the image quality and a summarization of the significant frames which are having high severity of infection and high image quality for further analysis. The results show that the proposed tool has a mean average precision (mAP) of 66% at an Intersection over Union (IoU) threshold of 0.5 for the prediction of LUS landmarks. The 14MB lightweight YOLOv5s network achieves 123 FPS while running in a Quadro P4000 GPU. The tool is available for usage and analysis upon request from the authors.

Physics Driven Domain Specific Transporter Framework with Attention Mechanism for Ultrasound Imaging

Sep 13, 2021

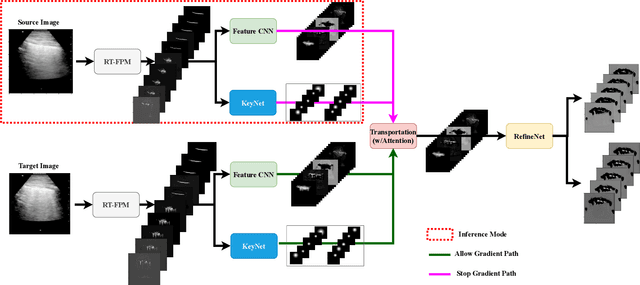

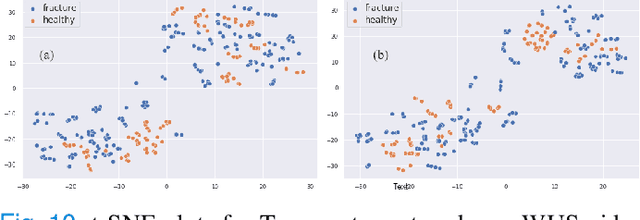

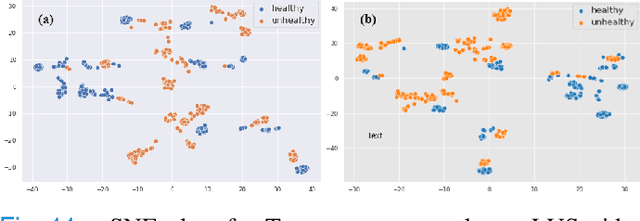

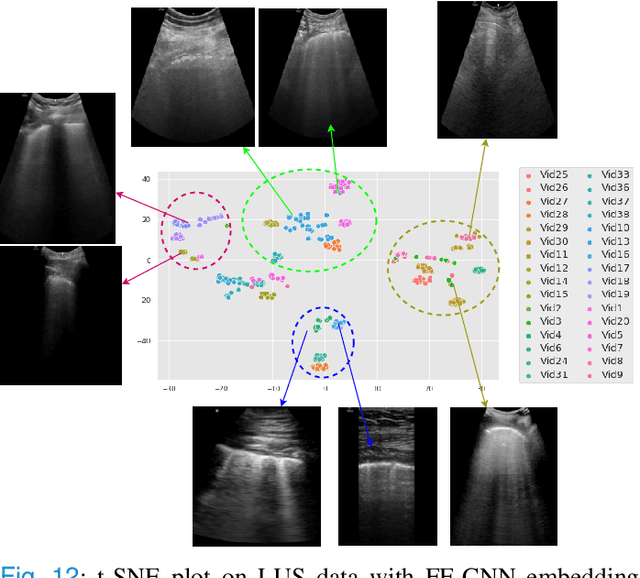

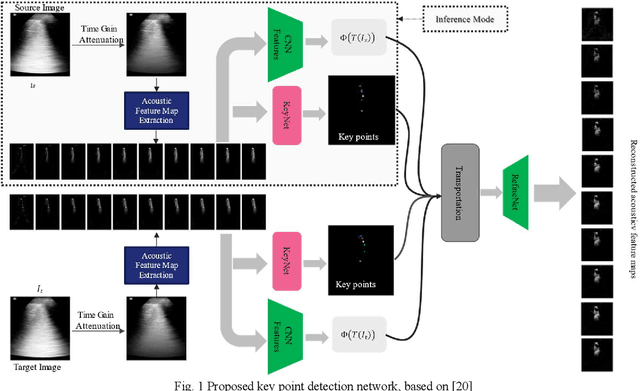

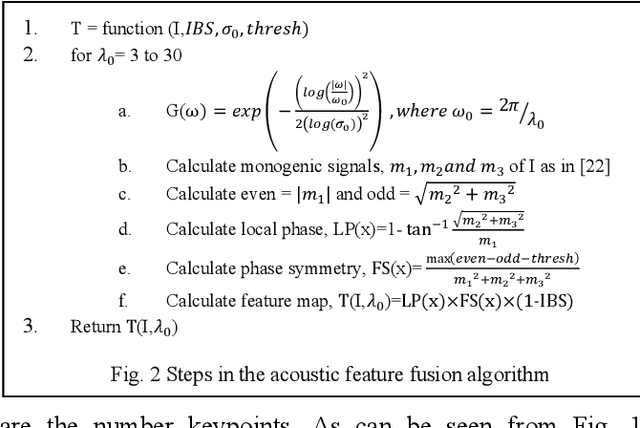

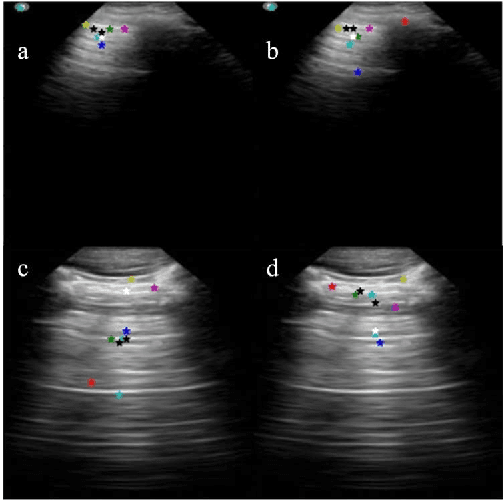

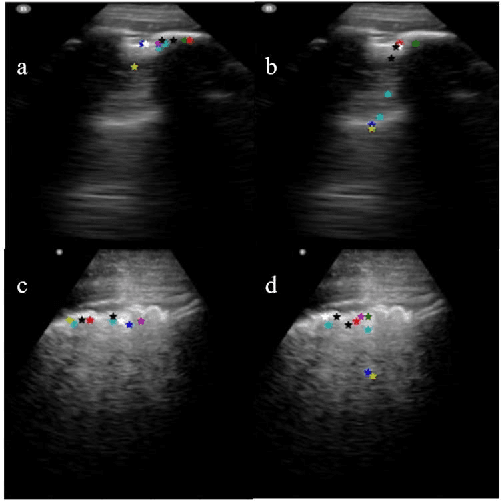

Abstract:Most applications of deep learning techniques in medical imaging are supervised and require a large number of labeled data which is expensive and requires many hours of careful annotation by experts. In this paper, we propose an unsupervised, physics driven domain specific transporter framework with an attention mechanism to identify relevant key points with applications in ultrasound imaging. The proposed framework identifies key points that provide a concise geometric representation highlighting regions with high structural variation in ultrasound videos. We incorporate physics driven domain specific information as a feature probability map and use the radon transform to highlight features in specific orientations. The proposed framework has been trained on130 Lung ultrasound (LUS) videos and 113 Wrist ultrasound (WUS) videos and validated on 100 Lung ultrasound (LUS) videos and 58 Wrist ultrasound (WUS) videos acquired from multiple centers across the globe. Images from both datasets were independently assessed by experts to identify clinically relevant features such as A-lines, B-lines and pleura from LUS and radial metaphysis, radial epiphysis and carpal bones from WUS videos. The key points detected from both datasets showed high sensitivity (LUS = 99\% , WUS = 74\%) in detecting the image landmarks identified by experts. Also, on employing for classification of the given lung image into normal and abnormal classes, the proposed approach, even with no prior training, achieved an average accuracy of 97\% and an average F1-score of 95\% respectively on the task of co-classification with 3 fold cross-validation. With the purely unsupervised nature of the proposed approach, we expect the key point detection approach to increase the applicability of ultrasound in various examination performed in emergency and point of care.

Unsupervised multi-latent space reinforcement learning framework for video summarization in ultrasound imaging

Sep 03, 2021

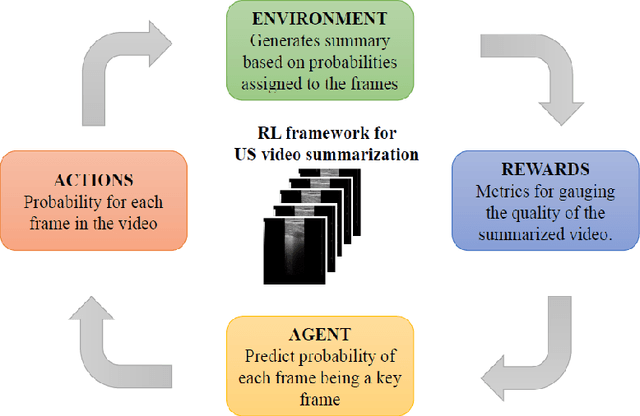

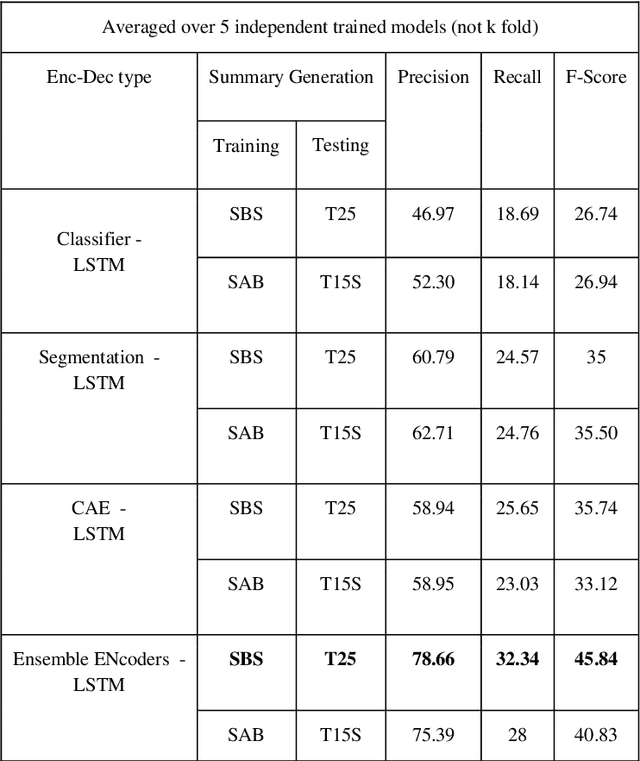

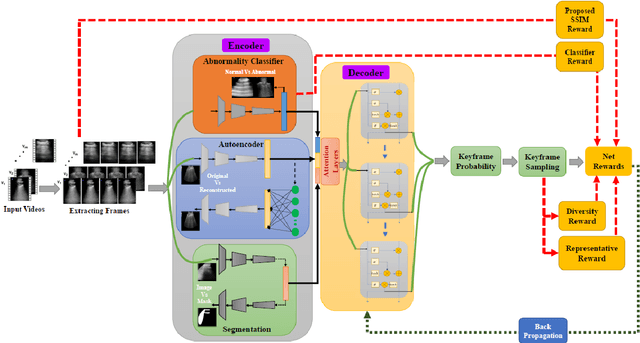

Abstract:The COVID-19 pandemic has highlighted the need for a tool to speed up triage in ultrasound scans and provide clinicians with fast access to relevant information. The proposed video-summarization technique is a step in this direction that provides clinicians access to relevant key-frames from a given ultrasound scan (such as lung ultrasound) while reducing resource, storage and bandwidth requirements. We propose a new unsupervised reinforcement learning (RL) framework with novel rewards that facilitates unsupervised learning avoiding tedious and impractical manual labelling for summarizing ultrasound videos to enhance its utility as a triage tool in the emergency department (ED) and for use in telemedicine. Using an attention ensemble of encoders, the high dimensional image is projected into a low dimensional latent space in terms of: a) reduced distance with a normal or abnormal class (classifier encoder), b) following a topology of landmarks (segmentation encoder), and c) the distance or topology agnostic latent representation (convolutional autoencoders). The decoder is implemented using a bi-directional long-short term memory (Bi-LSTM) which utilizes the latent space representation from the encoder. Our new paradigm for video summarization is capable of delivering classification labels and segmentation of key landmarks for each of the summarized keyframes. Validation is performed on lung ultrasound (LUS) dataset, that typically represent potential use cases in telemedicine and ED triage acquired from different medical centers across geographies (India, Spain and Canada).

Learning the Imaging Landmarks: Unsupervised Key point Detection in Lung Ultrasound Videos

Jun 13, 2021

Abstract:Lung ultrasound (LUS) is an increasingly popular diagnostic imaging modality for continuous and periodic monitoring of lung infection, given its advantages of non-invasiveness, non-ionizing nature, portability and easy disinfection. The major landmarks assessed by clinicians for triaging using LUS are pleura, A and B lines. There have been many efforts for the automatic detection of these landmarks. However, restricting to a few pre-defined landmarks may not reveal the actual imaging biomarkers particularly in case of new pathologies like COVID-19. Rather, the identification of key landmarks should be driven by data given the availability of a plethora of neural network algorithms. This work is a first of its kind attempt towards unsupervised detection of the key LUS landmarks in LUS videos of COVID-19 subjects during various stages of infection. We adapted the relatively newer approach of transporter neural networks to automatically mark and track pleura, A and B lines based on their periodic motion and relatively stable appearance in the videos. Initial results on unsupervised pleura detection show an accuracy of 91.8% employing 1081 LUS video frames.

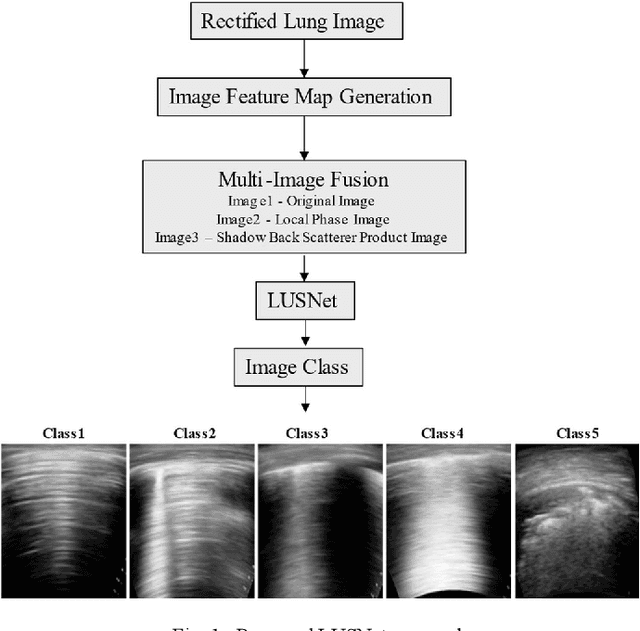

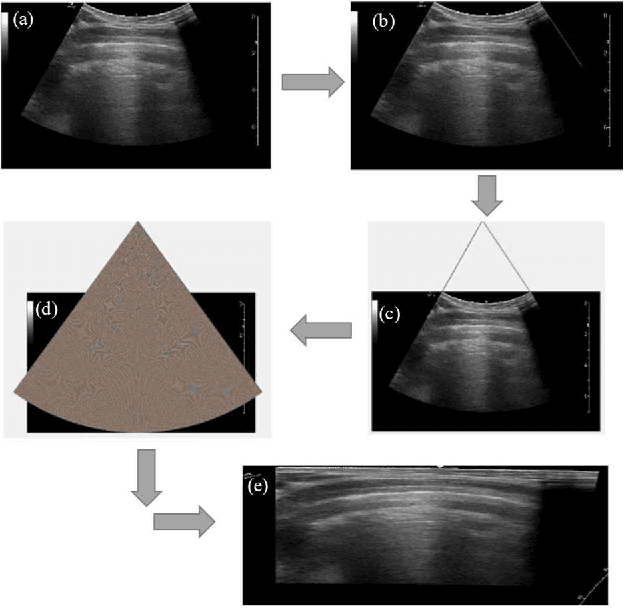

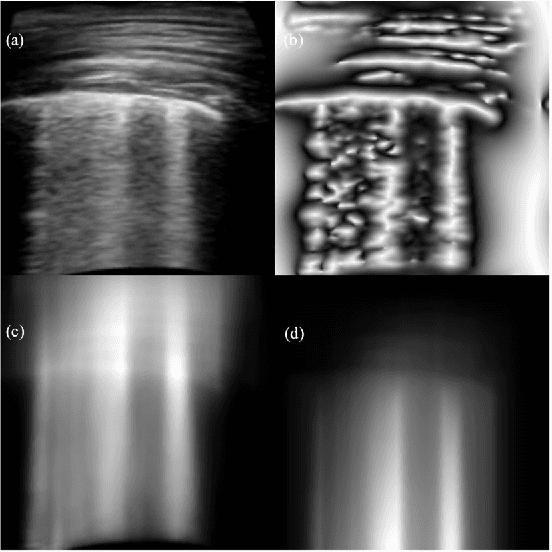

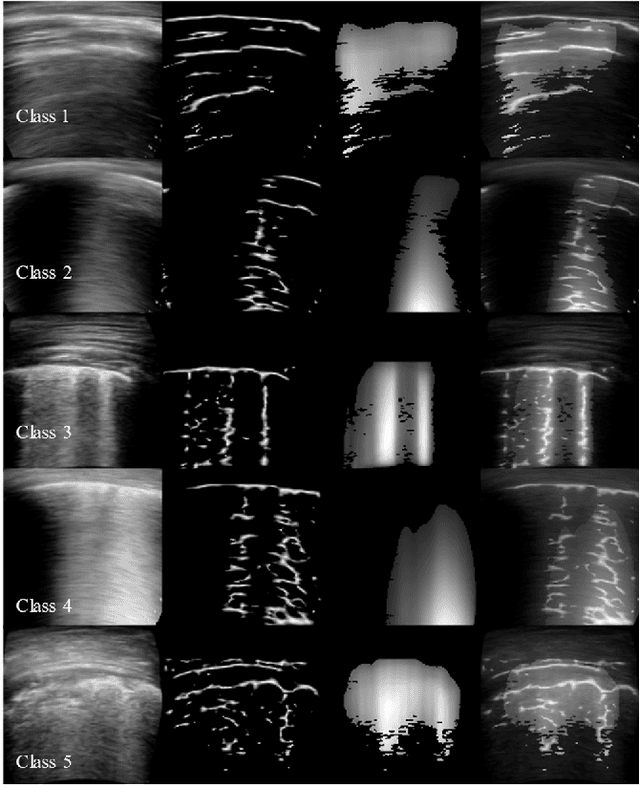

An Approach Towards Physics Informed Lung Ultrasound Image Scoring Neural Network for Diagnostic Assistance in COVID-19

Jun 13, 2021

Abstract:Ultrasound is fast becoming an inevitable diagnostic tool for regular and continuous monitoring of the lung with the recent outbreak of COVID-19. In this work, a novel approach is presented to extract acoustic propagation-based features to automatically highlight the region below pleura, which is an important landmark in lung ultrasound (LUS). Subsequently, a multichannel input formed by using the acoustic physics-based feature maps is fused to train a neural network, referred to as LUSNet, to classify the LUS images into five classes of varying severity of lung infection to track the progression of COVID-19. In order to ensure that the proposed approach is agnostic to the type of acquisition, the LUSNet, which consists of a U-net architecture is trained in an unsupervised manner with the acoustic feature maps to ensure that the encoder-decoder architecture is learning features in the pleural region of interest. A novel combination of the U-net output and the U-net encoder output is employed for the classification of severity of infection in the lung. A detailed analysis of the proposed approach on LUS images over the infection to full recovery period of ten confirmed COVID-19 subjects shows an average five-fold cross-validation accuracy, sensitivity, and specificity of 97%, 93%, and 98% respectively over 5000 frames of COVID-19 videos. The analysis also shows that, when the input dataset is limited and diverse as in the case of COVID-19 pandemic, an aided effort of combining acoustic propagation-based features along with the gray scale images, as proposed in this work, improves the performance of the neural network significantly and also aids the labelling and triaging process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge