Yael Rapson

Learned super resolution ultrasound for improved breast lesion characterization

Jul 12, 2021

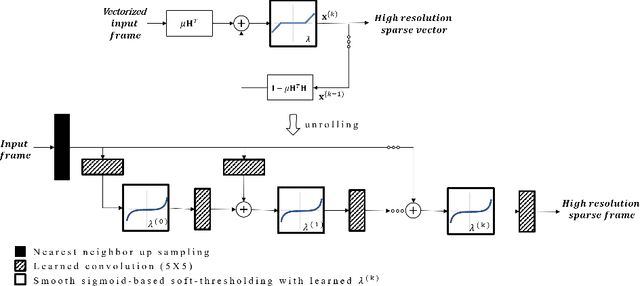

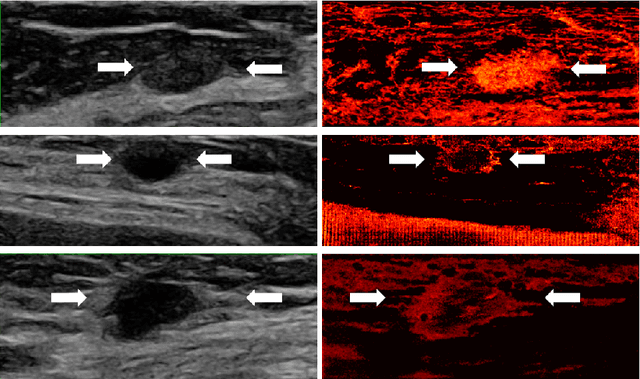

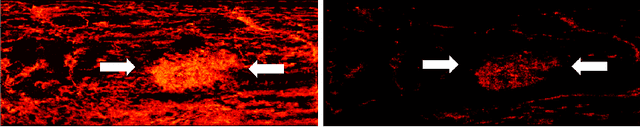

Abstract:Breast cancer is the most common malignancy in women. Mammographic findings such as microcalcifications and masses, as well as morphologic features of masses in sonographic scans, are the main diagnostic targets for tumor detection. However, improved specificity of these imaging modalities is required. A leading alternative target is neoangiogenesis. When pathological, it contributes to the development of numerous types of tumors, and the formation of metastases. Hence, demonstrating neoangiogenesis by visualization of the microvasculature may be of great importance. Super resolution ultrasound localization microscopy enables imaging of the microvasculature at the capillary level. Yet, challenges such as long reconstruction time, dependency on prior knowledge of the system Point Spread Function (PSF), and separability of the Ultrasound Contrast Agents (UCAs), need to be addressed for translation of super-resolution US into the clinic. In this work we use a deep neural network architecture that makes effective use of signal structure to address these challenges. We present in vivo human results of three different breast lesions acquired with a clinical US scanner. By leveraging our trained network, the microvasculature structure is recovered in a short time, without prior PSF knowledge, and without requiring separability of the UCAs. Each of the recoveries exhibits a different structure that corresponds with the known histological structure. This study demonstrates the feasibility of in vivo human super resolution, based on a clinical scanner, to increase US specificity for different breast lesions and promotes the use of US in the diagnosis of breast pathologies.

COVID-19 Classification of X-ray Images Using Deep Neural Networks

Oct 07, 2020Abstract:In the midst of the coronavirus disease 2019 (COVID-19) outbreak, chest X-ray (CXR) imaging is playing an important role in the diagnosis and monitoring of patients with COVID-19. Machine learning solutions have been shown to be useful for X-ray analysis and classification in a range of medical contexts. The purpose of this study is to create and evaluate a machine learning model for diagnosis of COVID-19, and to provide a tool for searching for similar patients according to their X-ray scans. In this retrospective study, a classifier was built using a pre-trained deep learning model (ReNet50) and enhanced by data augmentation and lung segmentation to detect COVID-19 in frontal CXR images collected between January 2018 and July 2020 in four hospitals in Israel. A nearest-neighbors algorithm was implemented based on the network results that identifies the images most similar to a given image. The model was evaluated using accuracy, sensitivity, area under the curve (AUC) of receiver operating characteristic (ROC) curve and of the precision-recall (P-R) curve. The dataset sourced for this study includes 2362 CXRs, balanced for positive and negative COVID-19, from 1384 patients (63 +/- 18 years, 552 men). Our model achieved 89.7% (314/350) accuracy and 87.1% (156/179) sensitivity in classification of COVID-19 on a test dataset comprising 15% (350 of 2326) of the original data, with AUC of ROC 0.95 and AUC of the P-R curve 0.94. For each image we retrieve images with the most similar DNN-based image embeddings; these can be used to compare with previous cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge