Yael Mathov

Enhancing Real-World Adversarial Patches with 3D Modeling Techniques

Feb 10, 2021

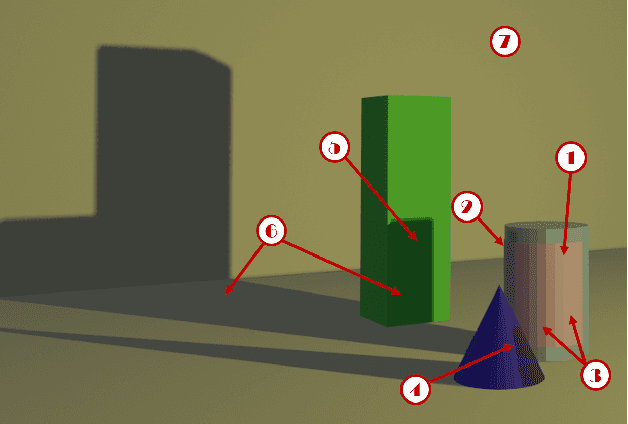

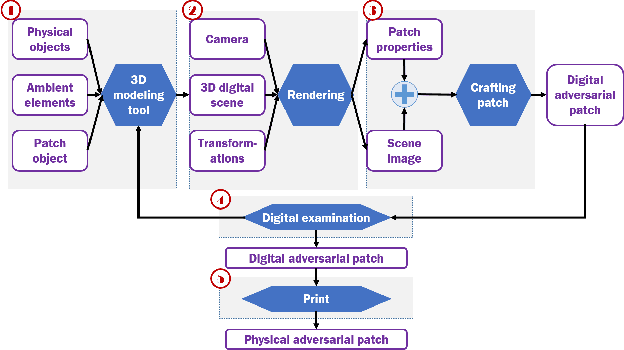

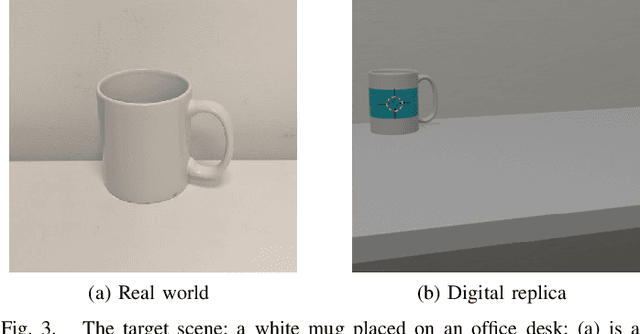

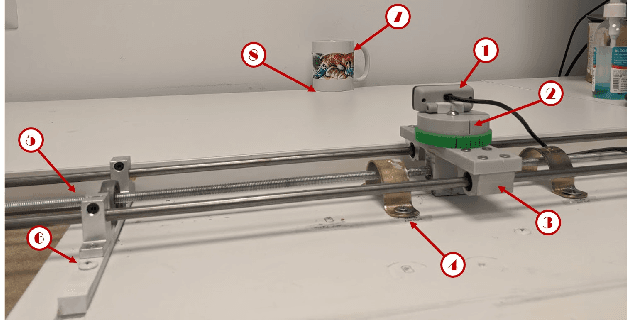

Abstract:Although many studies have examined adversarial examples in the real world, most of them relied on 2D photos of the attack scene; thus, the attacks proposed cannot address realistic environments with 3D objects or varied conditions. Studies that use 3D objects are limited, and in many cases, the real-world evaluation process is not replicable by other researchers, preventing others from reproducing the results. In this study, we present a framework that crafts an adversarial patch for an existing real-world scene. Our approach uses a 3D digital approximation of the scene as a simulation of the real world. With the ability to add and manipulate any element in the digital scene, our framework enables the attacker to improve the patch's robustness in real-world settings. We use the framework to create a patch for an everyday scene and evaluate its performance using a novel evaluation process that ensures that our results are reproducible in both the digital space and the real world. Our evaluation results show that the framework can generate adversarial patches that are robust to different settings in the real world.

Stop Bugging Me! Evading Modern-Day Wiretapping Using Adversarial Perturbations

Oct 24, 2020

Abstract:Mass surveillance systems for voice over IP (VoIP) conversations pose a huge risk to privacy. These automated systems use learning models to analyze conversations, and upon detecting calls that involve specific topics, route them to a human agent. In this study, we present an adversarial learning-based framework for privacy protection for VoIP conversations. We present a novel algorithm that finds a universal adversarial perturbation (UAP), which, when added to the audio stream, prevents an eavesdropper from automatically detecting the conversation's topic. As shown in our experiments, the UAP is agnostic to the speaker or audio length, and its volume can be changed in real-time, as needed. In a real-world demonstration, we use a Teensy microcontroller that acts as an external microphone and adds the UAP to the audio in real-time. We examine different speakers, VoIP applications (Skype, Zoom), audio lengths, and speech-to-text models (Deep Speech, Kaldi). Our results in the real world suggest that our approach is a feasible solution for privacy protection.

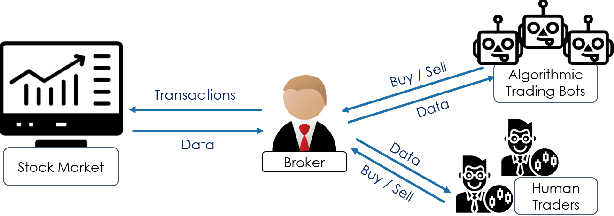

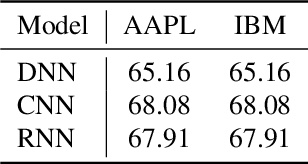

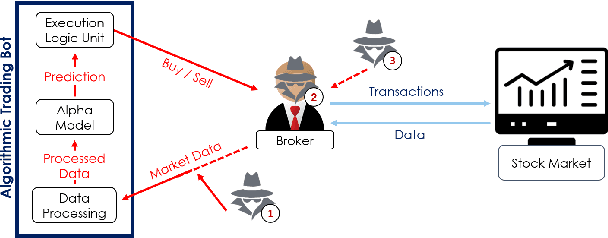

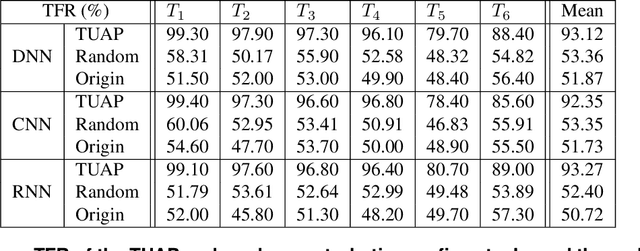

When Bots Take Over the Stock Market: Evasion Attacks Against Algorithmic Traders

Oct 19, 2020

Abstract:In recent years, machine learning has become prevalent in numerous tasks, including algorithmic trading. Stock market traders utilize learning models to predict the market's behavior and execute an investment strategy accordingly. However, learning models have been shown to be susceptible to input manipulations called adversarial examples. Yet, the trading domain remains largely unexplored in the context of adversarial learning. This is mainly because of the rapid changes in the market which impair the attacker's ability to create a real-time attack. In this study, we present a realistic scenario in which an attacker gains control of an algorithmic trading bots by manipulating the input data stream in real-time. The attacker creates an universal perturbation that is agnostic to the target model and time of use, while also remaining imperceptible. We evaluate our attack on a real-world market data stream and target three different trading architectures. We show that our perturbation can fool the model at future unseen data points, in both white-box and black-box settings. We believe these findings should serve as an alert to the finance community about the threats in this area and prompt further research on the risks associated with using automated learning models in the finance domain.

Not All Datasets Are Born Equal: On Heterogeneous Data and Adversarial Examples

Oct 07, 2020

Abstract:Recent work on adversarial learning has focused mainly on neural networks and domains where they excel, such as computer vision. The data in these domains is homogeneous, whereas heterogeneous tabular data domains remain underexplored despite their prevalence. Constructing an attack on models with heterogeneous input spaces is challenging, as they are governed by complex domain-specific validity rules and comprised of nominal, ordinal, and numerical features. We argue that machine learning models trained on heterogeneous tabular data are as susceptible to adversarial manipulations as those trained on continuous or homogeneous data such as images. In this paper, we introduce an optimization framework for identifying adversarial perturbations in heterogeneous input spaces. We define distribution-aware constraints for preserving the consistency of the adversarial examples and incorporate them by embedding the heterogeneous input into a continuous latent space. Our approach focuses on an adversary who aims to craft valid perturbations of minimal l_0-norms and apply them in real life. We propose a neural network-based implementation of our approach and demonstrate its effectiveness using three datasets from different content domains. Our results suggest that despite the several constraints heterogeneity imposes on the input space of a machine learning model, the susceptibility to adversarial examples remains unimpaired.

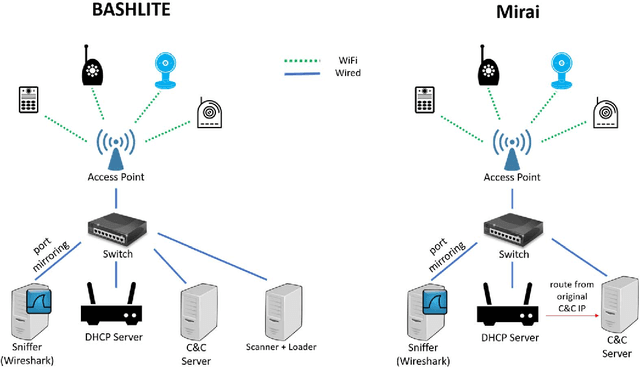

N-BaIoT: Network-based Detection of IoT Botnet Attacks Using Deep Autoencoders

May 09, 2018

Abstract:The proliferation of IoT devices which can be more easily compromised than desktop computers has led to an increase in the occurrence of IoT based botnet attacks. In order to mitigate this new threat there is a need to develop new methods for detecting attacks launched from compromised IoT devices and differentiate between hour and millisecond long IoTbased attacks. In this paper we propose and empirically evaluate a novel network based anomaly detection method which extracts behavior snapshots of the network and uses deep autoencoders to detect anomalous network traffic emanating from compromised IoT devices. To evaluate our method, we infected nine commercial IoT devices in our lab with two of the most widely known IoT based botnets, Mirai and BASHLITE. Our evaluation results demonstrated our proposed method's ability to accurately and instantly detect the attacks as they were being launched from the compromised IoT devices which were part of a botnet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge