Xuran Ming

PEL-BERT: A Joint Model for Protocol Entity Linking

Jan 28, 2020

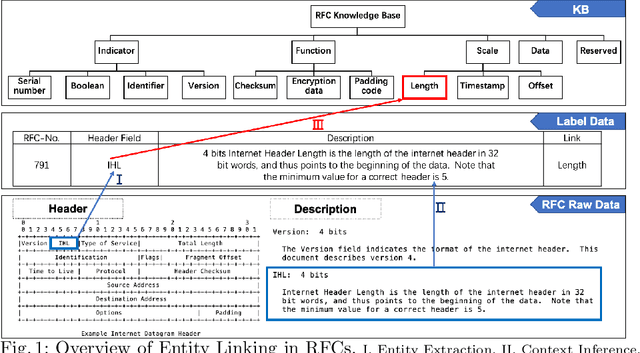

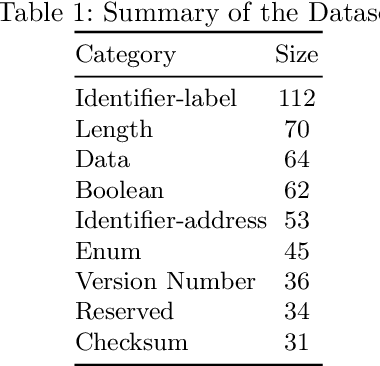

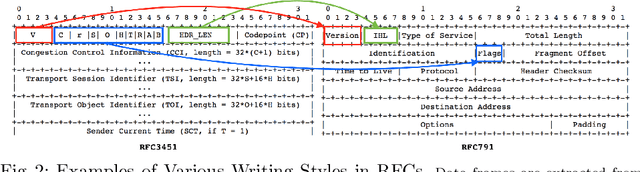

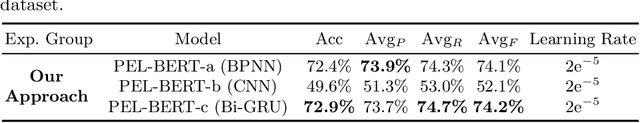

Abstract:Pre-trained models such as BERT are widely used in NLP tasks and are fine-tuned to improve the performance of various NLP tasks consistently. Nevertheless, the fine-tuned BERT model trained on our protocol corpus still has a weak performance on the Entity Linking (EL) task. In this paper, we propose a model that joints a fine-tuned language model with an RFC Domain Model. Firstly, we design a Protocol Knowledge Base as the guideline for protocol EL. Secondly, we propose a novel model, PEL-BERT, to link named entities in protocols to categories in Protocol Knowledge Base. Finally, we conduct a comprehensive study on the performance of pre-trained language models on descriptive texts and abstract concepts. Experimental results demonstrate that our model achieves state-of-the-art performance in EL on our annotated dataset, outperforming all the baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge