Xujie Wan

Attention-Guided Multi-scale Interaction Network for Face Super-Resolution

Sep 01, 2024

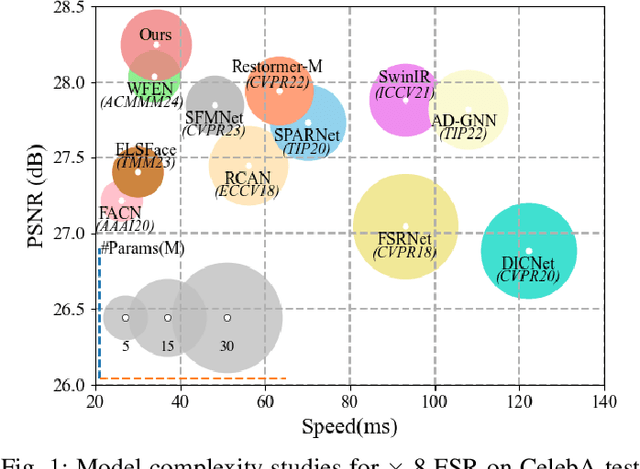

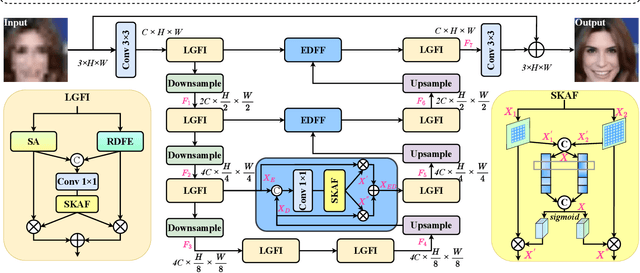

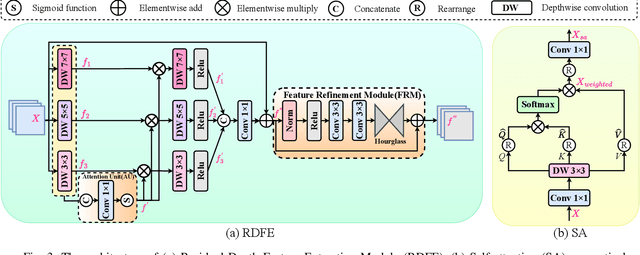

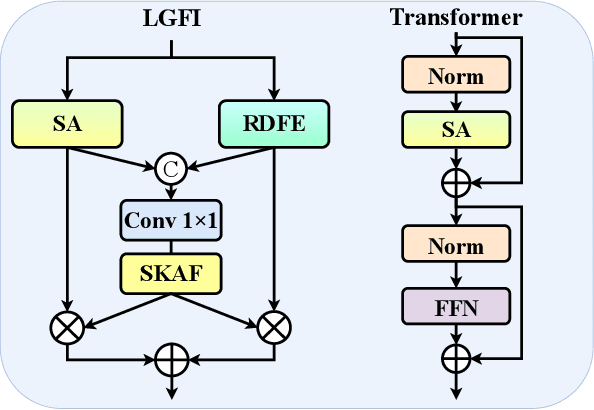

Abstract:Recently, CNN and Transformer hybrid networks demonstrated excellent performance in face super-resolution (FSR) tasks. Since numerous features at different scales in hybrid networks, how to fuse these multi-scale features and promote their complementarity is crucial for enhancing FSR. However, existing hybrid network-based FSR methods ignore this, only simply combining the Transformer and CNN. To address this issue, we propose an attention-guided Multi-scale interaction network (AMINet), which contains local and global feature interactions as well as encoder-decoder phases feature interactions. Specifically, we propose a Local and Global Feature Interaction Module (LGFI) to promote fusions of global features and different receptive fields' local features extracted by our Residual Depth Feature Extraction Module (RDFE). Additionally, we propose a Selective Kernel Attention Fusion Module (SKAF) to adaptively select fusions of different features within LGFI and encoder-decoder phases. Our above design allows the free flow of multi-scale features from within modules and between encoder and decoder, which can promote the complementarity of different scale features to enhance FSR. Comprehensive experiments confirm that our method consistently performs well with less computational consumption and faster inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge