Xue Wan

StepNav: Structured Trajectory Priors for Efficient and Multimodal Visual Navigation

Feb 01, 2026Abstract:Visual navigation is fundamental to autonomous systems, yet generating reliable trajectories in cluttered and uncertain environments remains a core challenge. Recent generative models promise end-to-end synthesis, but their reliance on unstructured noise priors often yields unsafe, inefficient, or unimodal plans that cannot meet real-time requirements. We propose StepNav, a novel framework that bridges this gap by introducing structured, multimodal trajectory priors derived from variational principles. StepNav first learns a geometry-aware success probability field to identify all feasible navigation corridors. These corridors are then used to construct an explicit, multi-modal mixture prior that initializes a conditional flow-matching process. This refinement is formulated as an optimal control problem with explicit smoothness and safety regularization. By replacing unstructured noise with physically-grounded candidates, StepNav generates safer and more efficient plans in significantly fewer steps. Experiments in both simulation and real-world benchmarks demonstrate consistent improvements in robustness, efficiency, and safety over state-of-the-art generative planners, advancing reliable trajectory generation for practical autonomous navigation. The code has been released at https://github.com/LuoXubo/StepNav.

LuSNAR:A Lunar Segmentation, Navigation and Reconstruction Dataset based on Muti-sensor for Autonomous Exploration

Jul 09, 2024

Abstract:With the complexity of lunar exploration missions, the moon needs to have a higher level of autonomy. Environmental perception and navigation algorithms are the foundation for lunar rovers to achieve autonomous exploration. The development and verification of algorithms require highly reliable data support. Most of the existing lunar datasets are targeted at a single task, lacking diverse scenes and high-precision ground truth labels. To address this issue, we propose a multi-task, multi-scene, and multi-label lunar benchmark dataset LuSNAR. This dataset can be used for comprehensive evaluation of autonomous perception and navigation systems, including high-resolution stereo image pairs, panoramic semantic labels, dense depth maps, LiDAR point clouds, and the position of rover. In order to provide richer scene data, we built 9 lunar simulation scenes based on Unreal Engine. Each scene is divided according to topographic relief and the density of objects. To verify the usability of the dataset, we evaluated and analyzed the algorithms of semantic segmentation, 3D reconstruction, and autonomous navigation. The experiment results prove that the dataset proposed in this paper can be used for ground verification of tasks such as autonomous environment perception and navigation, and provides a lunar benchmark dataset for testing the accessibility of algorithm metrics. We make LuSNAR publicly available at: https://github.com/autumn999999/LuSNAR-dataset.

JointLoc: A Real-time Visual Localization Framework for Planetary UAVs Based on Joint Relative and Absolute Pose Estimation

May 13, 2024Abstract:Unmanned aerial vehicles (UAVs) visual localization in planetary aims to estimate the absolute pose of the UAV in the world coordinate system through satellite maps and images captured by on-board cameras. However, since planetary scenes often lack significant landmarks and there are modal differences between satellite maps and UAV images, the accuracy and real-time performance of UAV positioning will be reduced. In order to accurately determine the position of the UAV in a planetary scene in the absence of the global navigation satellite system (GNSS), this paper proposes JointLoc, which estimates the real-time UAV position in the world coordinate system by adaptively fusing the absolute 2-degree-of-freedom (2-DoF) pose and the relative 6-degree-of-freedom (6-DoF) pose. Extensive comparative experiments were conducted on a proposed planetary UAV image cross-modal localization dataset, which contains three types of typical Martian topography generated via a simulation engine as well as real Martian UAV images from the Ingenuity helicopter. JointLoc achieved a root-mean-square error of 0.237m in the trajectories of up to 1,000m, compared to 0.594m and 0.557m for ORB-SLAM2 and ORB-SLAM3 respectively. The source code will be available at https://github.com/LuoXubo/JointLoc.

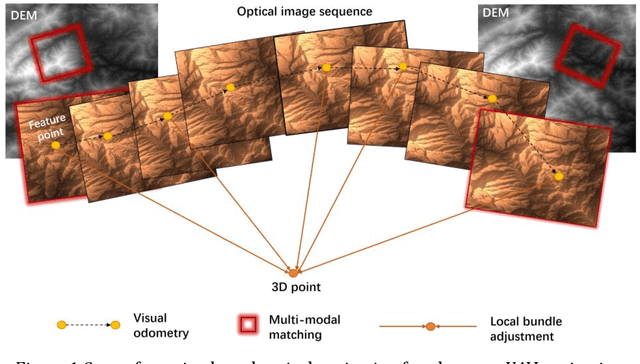

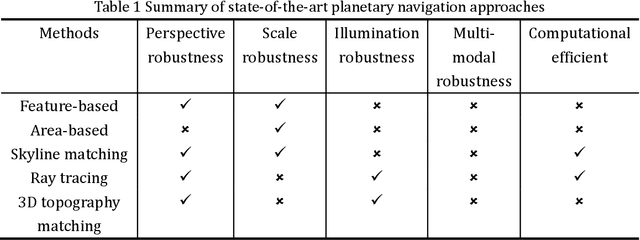

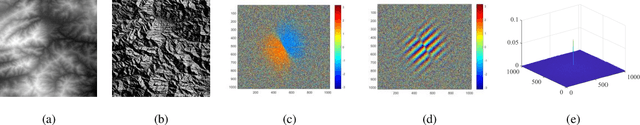

Planetary UAV localization based on Multi-modal Registration with Pre-existing Digital Terrain Model

Jun 24, 2021

Abstract:The autonomous real-time optical navigation of planetary UAV is of the key technologies to ensure the success of the exploration. In such a GPS denied environment, vision-based localization is an optimal approach. In this paper, we proposed a multi-modal registration based SLAM algorithm, which estimates the location of a planet UAV using a nadir view camera on the UAV compared with pre-existing digital terrain model. To overcome the scale and appearance difference between on-board UAV images and pre-installed digital terrain model, a theoretical model is proposed to prove that topographic features of UAV image and DEM can be correlated in frequency domain via cross power spectrum. To provide the six-DOF of the UAV, we also developed an optimization approach which fuses the geo-referencing result into a SLAM system via LBA (Local Bundle Adjustment) to achieve robust and accurate vision-based navigation even in featureless planetary areas. To test the robustness and effectiveness of the proposed localization algorithm, a new cross-source drone-based localization dataset for planetary exploration is proposed. The proposed dataset includes 40200 synthetic drone images taken from nine planetary scenes with related DEM query images. Comparison experiments carried out demonstrate that over the flight distance of 33.8km, the proposed method achieved average localization error of 0.45 meters, compared to 1.31 meters by ORB-SLAM, with the processing speed of 12hz which will ensure a real-time performance. We will make our datasets available to encourage further work on this promising topic.

Saliency based Semi-supervised Learning for Orbiting Satellite Tracking

Sep 09, 2019

Abstract:The trajectory and boundary of an orbiting satellite are fundamental information for on-orbit repairing and manipulation by space robots. This task, however, is challenging owing to the freely and rapidly motion of on-orbiting satellites, the quickly varying background and the sudden change in illumination conditions. Traditional tracking usually relies on a single bounding box of the target object, however, more detailed information should be provided by visual tracking such as binary mask. In this paper, we proposed a SSLT (Saliency-based Semi-supervised Learning for Tracking) algorithm that provides both the bounding box and segmentation binary mask of target satellites at 12 frame per second without requirement of annotated data. Our method, SSLT, improves the segmentation performance by generating a saliency map based semi-supervised on-line learning approach within the initial bounding box estimated by tracking. Once a customized segmentation model has been trained, the bounding box and satellite trajectory will be refined using the binary segmentation result. Experiment using real on-orbit rendezvous and docking video from NASA (Nation Aeronautics and Space Administration), simulated satellite animation sequence from ESA (European Space Agency) and image sequences of 3D printed satellite model took in our laboratory demonstrate the robustness, versatility and fast speed of our method compared to state-of-the-art tracking and segmentation methods. Our dataset will be released for academic use in future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge