Xuan Son Nguyen

Neural Networks on Symmetric Spaces of Noncompact Type

Jan 03, 2026Abstract:Recent works have demonstrated promising performances of neural networks on hyperbolic spaces and symmetric positive definite (SPD) manifolds. These spaces belong to a family of Riemannian manifolds referred to as symmetric spaces of noncompact type. In this paper, we propose a novel approach for developing neural networks on such spaces. Our approach relies on a unified formulation of the distance from a point to a hyperplane on the considered spaces. We show that some existing formulations of the point-to-hyperplane distance can be recovered by our approach under specific settings. Furthermore, we derive a closed-form expression for the point-to-hyperplane distance in higher-rank symmetric spaces of noncompact type equipped with G-invariant Riemannian metrics. The derived distance then serves as a tool to design fully-connected (FC) layers and an attention mechanism for neural networks on the considered spaces. Our approach is validated on challenging benchmarks for image classification, electroencephalogram (EEG) signal classification, image generation, and natural language inference.

Siegel Neural Networks

Nov 12, 2025Abstract:Riemannian symmetric spaces (RSS) such as hyperbolic spaces and symmetric positive definite (SPD) manifolds have become popular spaces for representation learning. In this paper, we propose a novel approach for building discriminative neural networks on Siegel spaces, a family of RSS that is largely unexplored in machine learning tasks. For classification applications, one focus of recent works is the construction of multiclass logistic regression (MLR) and fully-connected (FC) layers for hyperbolic and SPD neural networks. Here we show how to build such layers for Siegel neural networks. Our approach relies on the quotient structure of those spaces and the notation of vector-valued distance on RSS. We demonstrate the relevance of our approach on two applications, i.e., radar clutter classification and node classification. Our results successfully demonstrate state-of-the-art performance across all datasets.

Matrix Manifold Neural Networks++

May 29, 2024

Abstract:Deep neural networks (DNNs) on Riemannian manifolds have garnered increasing interest in various applied areas. For instance, DNNs on spherical and hyperbolic manifolds have been designed to solve a wide range of computer vision and nature language processing tasks. One of the key factors that contribute to the success of these networks is that spherical and hyperbolic manifolds have the rich algebraic structures of gyrogroups and gyrovector spaces. This enables principled and effective generalizations of the most successful DNNs to these manifolds. Recently, some works have shown that many concepts in the theory of gyrogroups and gyrovector spaces can also be generalized to matrix manifolds such as Symmetric Positive Definite (SPD) and Grassmann manifolds. As a result, some building blocks for SPD and Grassmann neural networks, e.g., isometric models and multinomial logistic regression (MLR) can be derived in a way that is fully analogous to their spherical and hyperbolic counterparts. Building upon these works, we design fully-connected (FC) and convolutional layers for SPD neural networks. We also develop MLR on Symmetric Positive Semi-definite (SPSD) manifolds, and propose a method for performing backpropagation with the Grassmann logarithmic map in the projector perspective. We demonstrate the effectiveness of the proposed approach in the human action recognition and node classification tasks.

Building Neural Networks on Matrix Manifolds: A Gyrovector Space Approach

May 08, 2023Abstract:Matrix manifolds, such as manifolds of Symmetric Positive Definite (SPD) matrices and Grassmann manifolds, appear in many applications. Recently, by applying the theory of gyrogroups and gyrovector spaces that is a powerful framework for studying hyperbolic geometry, some works have attempted to build principled generalizations of Euclidean neural networks on matrix manifolds. However, due to the lack of many concepts in gyrovector spaces for the considered manifolds, e.g., the inner product and gyroangles, techniques and mathematical tools provided by these works are still limited compared to those developed for studying hyperbolic geometry. In this paper, we generalize some notions in gyrovector spaces for SPD and Grassmann manifolds, and propose new models and layers for building neural networks on these manifolds. We show the effectiveness of our approach in two applications, i.e., human action recognition and knowledge graph completion.

GeomNet: A Neural Network Based on Riemannian Geometries of SPD Matrix Space and Cholesky Space for 3D Skeleton-Based Interaction Recognition

Nov 25, 2021

Abstract:In this paper, we propose a novel method for representation and classification of two-person interactions from 3D skeleton sequences. The key idea of our approach is to use Gaussian distributions to capture statistics on R n and those on the space of symmetric positive definite (SPD) matrices. The main challenge is how to parametrize those distributions. Towards this end, we develop methods for embedding Gaussian distributions in matrix groups based on the theory of Lie groups and Riemannian symmetric spaces. Our method relies on the Riemannian geometry of the underlying manifolds and has the advantage of encoding high-order statistics from 3D joint positions. We show that the proposed method achieves competitive results in two-person interaction recognition on three benchmarks for 3D human activity understanding.

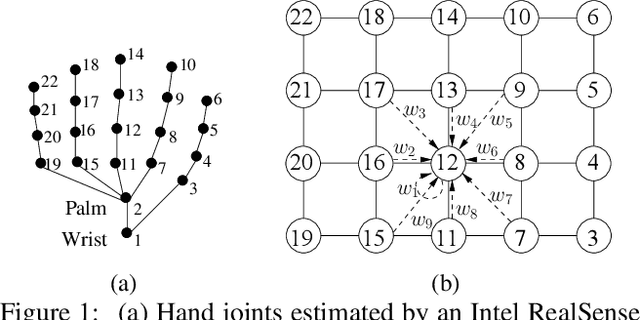

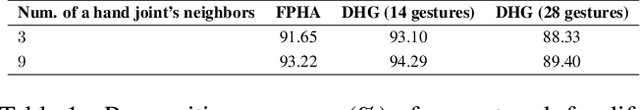

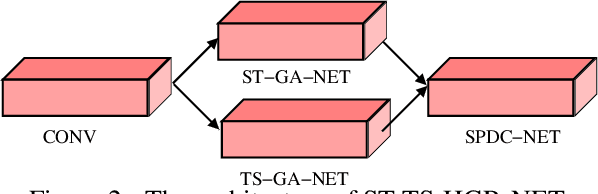

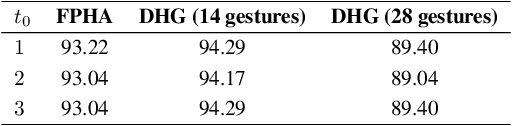

A neural network based on SPD manifold learning for skeleton-based hand gesture recognition

Apr 29, 2019

Abstract:This paper proposes a new neural network based on SPD manifold learning for skeleton-based hand gesture recognition. Given the stream of hand's joint positions, our approach combines two aggregation processes on respectively spatial and temporal domains. The pipeline of our network architecture consists in three main stages. The first stage is based on a convolutional layer to increase the discriminative power of learned features. The second stage relies on different architectures for spatial and temporal Gaussian aggregation of joint features. The third stage learns a final SPD matrix from skeletal data. A new type of layer is proposed for the third stage, based on a variant of stochastic gradient descent on Stiefel manifolds. The proposed network is validated on two challenging datasets and shows state-of-the-art accuracies on both datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge