Xinsheng Xuan

A New Ensemble Method for Concessively Targeted Multi-model Attack

Dec 19, 2019

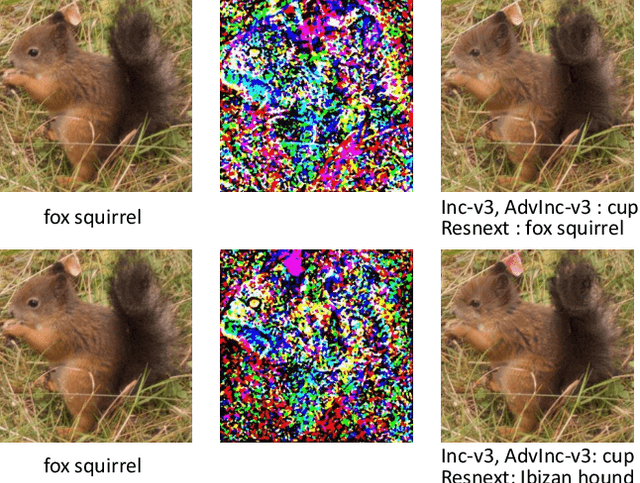

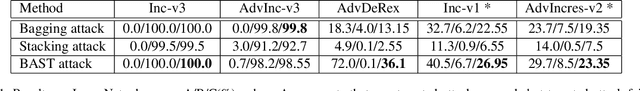

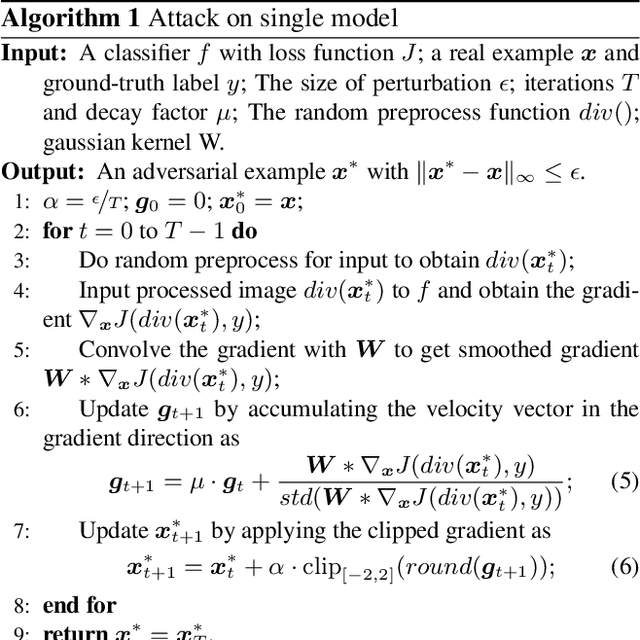

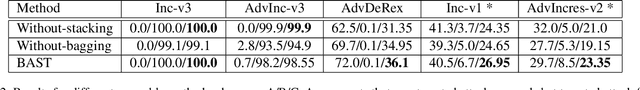

Abstract:It is well known that deep learning models are vulnerable to adversarial examples crafted by maliciously adding perturbations to original inputs. There are two types of attacks: targeted attack and non-targeted attack, and most researchers often pay more attention to the targeted adversarial examples. However, targeted attack has a low success rate, especially when aiming at a robust model or under a black-box attack protocol. In this case, non-targeted attack is the last chance to disable AI systems. Thus, in this paper, we propose a new attack mechanism which performs the non-targeted attack when the targeted attack fails. Besides, we aim to generate a single adversarial sample for different deployed models of the same task, e.g. image classification models. Hence, for this practical application, we focus on attacking ensemble models by dividing them into two groups: easy-to-attack and robust models. We alternately attack these two groups of models in the non-targeted or targeted manner. We name it a bagging and stacking ensemble (BAST) attack. The BAST attack can generate an adversarial sample that fails multiple models simultaneously. Some of the models classify the adversarial sample as a target label, and other models which are not attacked successfully may give wrong labels at least. The experimental results show that the proposed BAST attack outperforms the state-of-the-art attack methods on the new defined criterion that considers both targeted and non-targeted attack performance.

Scalable Fine-grained Generated Image Classification Based on Deep Metric Learning

Dec 10, 2019

Abstract:Recently, generated images could reach very high quality, even human eyes could not tell them apart from real images. Although there are already some methods for detecting generated images in current forensic community, most of these methods are used to detect a single type of generated images. The new types of generated images are emerging one after another, and the existing detection methods cannot cope well. These problems prompted us to propose a scalable framework for multi-class classification based on deep metric learning, which aims to classify the generated images finer. In addition, we have increased the scalability of our framework to cope with the constant emergence of new types of generated images, and through fine-tuning to make the model obtain better detection performance on the new type of generated data.

On the generalization of GAN image forensics

Feb 27, 2019

Abstract:Recently the GAN generated face images are more and more realistic with high-quality, even hard for human eyes to detect. On the other hand, the forensics community keeps on developing methods to detect these generated fake images and try to guarantee the credibility of visual contents. Although researchers have developed some methods to detect generated images, few of them explore the important problem of generalization ability of forensics model. As new types of GANs are emerging fast, the generalization ability of forensics models to detect new types of GAN images is absolutely an essential research topic. In this paper, we explore this problem and propose to use preprocessed images to train a forensic CNN model. By applying similar image level preprocessing to both real and fake training images, the forensics model is forced to learn more intrinsic features to classify the generated and real face images. Our experimental results also prove the effectiveness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge