Xingxing Yang

HiMem: Hierarchical Long-Term Memory for LLM Long-Horizon Agents

Jan 10, 2026Abstract:Although long-term memory systems have made substantial progress in recent years, they still exhibit clear limitations in adaptability, scalability, and self-evolution under continuous interaction settings. Inspired by cognitive theories, we propose HiMem, a hierarchical long-term memory framework for long-horizon dialogues, designed to support memory construction, retrieval, and dynamic updating during sustained interactions. HiMem constructs cognitively consistent Episode Memory via a Topic-Aware Event--Surprise Dual-Channel Segmentation strategy, and builds Note Memory that captures stable knowledge through a multi-stage information extraction pipeline. These two memory types are semantically linked to form a hierarchical structure that bridges concrete interaction events and abstract knowledge, enabling efficient retrieval without sacrificing information fidelity. HiMem supports both hybrid and best-effort retrieval strategies to balance accuracy and efficiency, and incorporates conflict-aware Memory Reconsolidation to revise and supplement stored knowledge based on retrieval feedback. This design enables continual memory self-evolution over long-term use. Experimental results on long-horizon dialogue benchmarks demonstrate that HiMem consistently outperforms representative baselines in accuracy, consistency, and long-term reasoning, while maintaining favorable efficiency. Overall, HiMem provides a principled and scalable design paradigm for building adaptive and self-evolving LLM-based conversational agents. The code is available at https://github.com/jojopdq/HiMem.

DeepSPG: Exploring Deep Semantic Prior Guidance for Low-light Image Enhancement with Multimodal Learning

Apr 27, 2025

Abstract:There has long been a belief that high-level semantics learning can benefit various downstream computer vision tasks. However, in the low-light image enhancement (LLIE) community, existing methods learn a brutal mapping between low-light and normal-light domains without considering the semantic information of different regions, especially in those extremely dark regions that suffer from severe information loss. To address this issue, we propose a new deep semantic prior-guided framework (DeepSPG) based on Retinex image decomposition for LLIE to explore informative semantic knowledge via a pre-trained semantic segmentation model and multimodal learning. Notably, we incorporate both image-level semantic prior and text-level semantic prior and thus formulate a multimodal learning framework with combinatorial deep semantic prior guidance for LLIE. Specifically, we incorporate semantic knowledge to guide the enhancement process via three designs: an image-level semantic prior guidance by leveraging hierarchical semantic features from a pre-trained semantic segmentation model; a text-level semantic prior guidance by integrating natural language semantic constraints via a pre-trained vision-language model; a multi-scale semantic-aware structure that facilitates effective semantic feature incorporation. Eventually, our proposed DeepSPG demonstrates superior performance compared to state-of-the-art methods across five benchmark datasets. The implementation details and code are publicly available at https://github.com/Wenyuzhy/DeepSPG.

Credible plan-driven RAG method for Multi-hop Question Answering

Apr 23, 2025Abstract:Multi-hop question answering (QA) presents a considerable challenge for Retrieval-Augmented Generation (RAG), requiring the structured decomposition of complex queries into logical reasoning paths and the generation of dependable intermediate results. However, deviations in reasoning paths or errors in intermediate results, which are common in current RAG methods, may propagate and accumulate throughout the reasoning process, diminishing the accuracy of the answer to complex queries. To address this challenge, we propose the Plan-then-Act-and-Review (PAR RAG) framework, which is organized into three key stages: planning, act, and review, and aims to offer an interpretable and incremental reasoning paradigm for accurate and reliable multi-hop question answering by mitigating error propagation.PAR RAG initially applies a top-down problem decomposition strategy, formulating a comprehensive plan that integrates multiple executable steps from a holistic viewpoint. This approach avoids the pitfalls of local optima common in traditional RAG methods, ensuring the accuracy of the entire reasoning path. Subsequently, PAR RAG incorporates a plan execution mechanism based on multi-granularity verification. By utilizing both coarse-grained similarity information and fine-grained relevant data, the framework thoroughly checks and adjusts intermediate results, ensuring process accuracy while effectively managing error propagation and amplification. Experimental results on multi-hop QA datasets demonstrate that the PAR RAG framework substantially outperforms existing state-of-the-art methods in key metrics, including EM and F1 scores.

Learning Physics-Informed Color-Aware Transforms for Low-Light Image Enhancement

Apr 16, 2025

Abstract:Image decomposition offers deep insights into the imaging factors of visual data and significantly enhances various advanced computer vision tasks. In this work, we introduce a novel approach to low-light image enhancement based on decomposed physics-informed priors. Existing methods that directly map low-light to normal-light images in the sRGB color space suffer from inconsistent color predictions and high sensitivity to spectral power distribution (SPD) variations, resulting in unstable performance under diverse lighting conditions. To address these challenges, we introduce a Physics-informed Color-aware Transform (PiCat), a learning-based framework that converts low-light images from the sRGB color space into deep illumination-invariant descriptors via our proposed Color-aware Transform (CAT). This transformation enables robust handling of complex lighting and SPD variations. Complementing this, we propose the Content-Noise Decomposition Network (CNDN), which refines the descriptor distributions to better align with well-lit conditions by mitigating noise and other distortions, thereby effectively restoring content representations to low-light images. The CAT and the CNDN collectively act as a physical prior, guiding the transformation process from low-light to normal-light domains. Our proposed PiCat framework demonstrates superior performance compared to state-of-the-art methods across five benchmark datasets.

ColorMamba: Towards High-quality NIR-to-RGB Spectral Translation with Mamba

Aug 15, 2024Abstract:Translating NIR to the visible spectrum is challenging due to cross-domain complexities. Current models struggle to balance a broad receptive field with computational efficiency, limiting practical use. Although the Selective Structured State Space Model, especially the improved version, Mamba, excels in generative tasks by capturing long-range dependencies with linear complexity, its default approach of converting 2D images into 1D sequences neglects local context. In this work, we propose a simple but effective backbone, dubbed ColorMamba, which first introduces Mamba into spectral translation tasks. To explore global long-range dependencies and local context for efficient spectral translation, we introduce learnable padding tokens to enhance the distinction of image boundaries and prevent potential confusion within the sequence model. Furthermore, local convolutional enhancement and agent attention are designed to improve the vanilla Mamba. Moreover, we exploit the HSV color to provide multi-scale guidance in the reconstruction process for more accurate spectral translation. Extensive experiments show that our ColorMamba achieves a 1.02 improvement in terms of PSNR compared with the state-of-the-art method. Our code is available at https://github.com/AlexYangxx/ColorMamba.

Multi-scale HSV Color Feature Embedding for High-fidelity NIR-to-RGB Spectrum Translation

Apr 25, 2024

Abstract:The NIR-to-RGB spectral domain translation is a formidable task due to the inherent spectral mapping ambiguities within NIR inputs and RGB outputs. Thus, existing methods fail to reconcile the tension between maintaining texture detail fidelity and achieving diverse color variations. In this paper, we propose a Multi-scale HSV Color Feature Embedding Network (MCFNet) that decomposes the mapping process into three sub-tasks, including NIR texture maintenance, coarse geometry reconstruction, and RGB color prediction. Thus, we propose three key modules for each corresponding sub-task: the Texture Preserving Block (TPB), the HSV Color Feature Embedding Module (HSV-CFEM), and the Geometry Reconstruction Module (GRM). These modules contribute to our MCFNet methodically tackling spectral translation through a series of escalating resolutions, progressively enriching images with color and texture fidelity in a scale-coherent fashion. The proposed MCFNet demonstrates substantial performance gains over the NIR image colorization task. Code is released at: https://github.com/AlexYangxx/MCFNet.

Multi-scale Progressive Feature Embedding for Accurate NIR-to-RGB Spectral Domain Translation

Dec 26, 2023

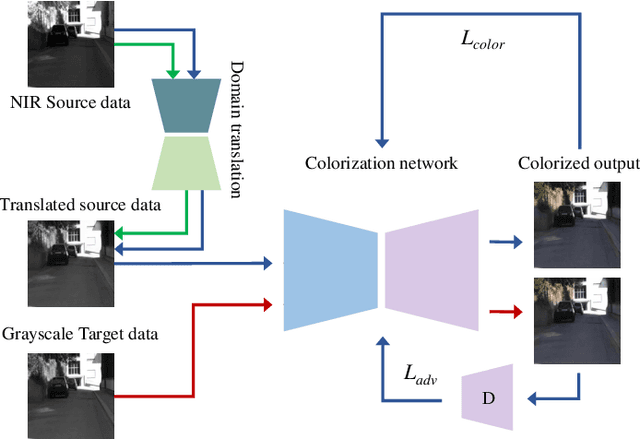

Abstract:NIR-to-RGB spectral domain translation is a challenging task due to the mapping ambiguities, and existing methods show limited learning capacities. To address these challenges, we propose to colorize NIR images via a multi-scale progressive feature embedding network (MPFNet), with the guidance of grayscale image colorization. Specifically, we first introduce a domain translation module that translates NIR source images into the grayscale target domain. By incorporating a progressive training strategy, the statistical and semantic knowledge from both task domains are efficiently aligned with a series of pixel- and feature-level consistency constraints. Besides, a multi-scale progressive feature embedding network is designed to improve learning capabilities. Experiments show that our MPFNet outperforms state-of-the-art counterparts by 2.55 dB in the NIR-to-RGB spectral domain translation task in terms of PSNR.

Hyperspectral Image Reconstruction via Combinatorial Embedding of Cross-Channel Spatio-Spectral Clues

Dec 18, 2023Abstract:Existing learning-based hyperspectral reconstruction methods show limitations in fully exploiting the information among the hyperspectral bands. As such, we propose to investigate the chromatic inter-dependencies in their respective hyperspectral embedding space. These embedded features can be fully exploited by querying the inter-channel correlations in a combinatorial manner, with the unique and complementary information efficiently fused into the final prediction. We found such independent modeling and combinatorial excavation mechanisms are extremely beneficial to uncover marginal spectral features, especially in the long wavelength bands. In addition, we have proposed a spatio-spectral attention block and a spectrum-fusion attention module, which greatly facilitates the excavation and fusion of information at both semantically long-range levels and fine-grained pixel levels across all dimensions. Extensive quantitative and qualitative experiments show that our method (dubbed CESST) achieves SOTA performance. Code for this project is at: https://github.com/AlexYangxx/CESST.

Cooperative Colorization: Exploring Latent Cross-Domain Priors for NIR Image Spectrum Translation

Aug 07, 2023Abstract:Near-infrared (NIR) image spectrum translation is a challenging problem with many promising applications. Existing methods struggle with the mapping ambiguity between the NIR and the RGB domains, and generalize poorly due to the limitations of models' learning capabilities and the unavailability of sufficient NIR-RGB image pairs for training. To address these challenges, we propose a cooperative learning paradigm that colorizes NIR images in parallel with another proxy grayscale colorization task by exploring latent cross-domain priors (i.e., latent spectrum context priors and task domain priors), dubbed CoColor. The complementary statistical and semantic spectrum information from these two task domains -- in the forms of pre-trained colorization networks -- are brought in as task domain priors. A bilateral domain translation module is subsequently designed, in which intermittent NIR images are generated from grayscale and colorized in parallel with authentic NIR images; and vice versa for the grayscale images. These intermittent transformations act as latent spectrum context priors for efficient domain knowledge exchange. We progressively fine-tune and fuse these modules with a series of pixel-level and feature-level consistency constraints. Experiments show that our proposed cooperative learning framework produces satisfactory spectrum translation outputs with diverse colors and rich textures, and outperforms state-of-the-art counterparts by 3.95dB and 4.66dB in terms of PNSR for the NIR and grayscale colorization tasks, respectively.

Attention-Guided NIR Image Colorization via Adaptive Fusion of Semantic and Texture Clues

Jul 20, 2021

Abstract:Near infrared (NIR) imaging has been widely applied in low-light imaging scenarios; however, it is difficult for human and algorithms to perceive the real scene in the colorless NIR domain. While Generative Adversarial Network (GAN) has been widely employed in various image colorization tasks, it is challenging for a direct mapping mechanism, such as a conventional GAN, to transform an image from the NIR to the RGB domain with correct semantic reasoning, well-preserved textures, and vivid color combinations concurrently. In this work, we propose a novel Attention-based NIR image colorization framework via Adaptive Fusion of Semantic and Texture clues, aiming at achieving these goals within the same framework. The tasks of texture transfer and semantic reasoning are carried out in two separate network blocks. Specifically, the Texture Transfer Block (TTB) aims at extracting texture features from the NIR image's Laplacian component and transferring them for subsequent color fusion. The Semantic Reasoning Block (SRB) extracts semantic clues and maps the NIR pixel values to the RGB domain. Finally, a Fusion Attention Block (FAB) is proposed to adaptively fuse the features from the two branches and generate an optimized colorization result. In order to enhance the network's learning capacity in semantic reasoning as well as mapping precision in texture transfer, we have proposed the Residual Coordinate Attention Block (RCAB), which incorporates coordinate attention into a residual learning framework, enabling the network to capture long-range dependencies along the channel direction and meanwhile precise positional information can be preserved along spatial directions. RCAB is also incorporated into FAB to facilitate accurate texture alignment during fusion. Both quantitative and qualitative evaluations show that the proposed method outperforms state-of-the-art NIR image colorization methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge