Xilu Wang

OWLed: Outlier-weighed Layerwise Pruning for Efficient Autonomous Driving Framework

Nov 12, 2024

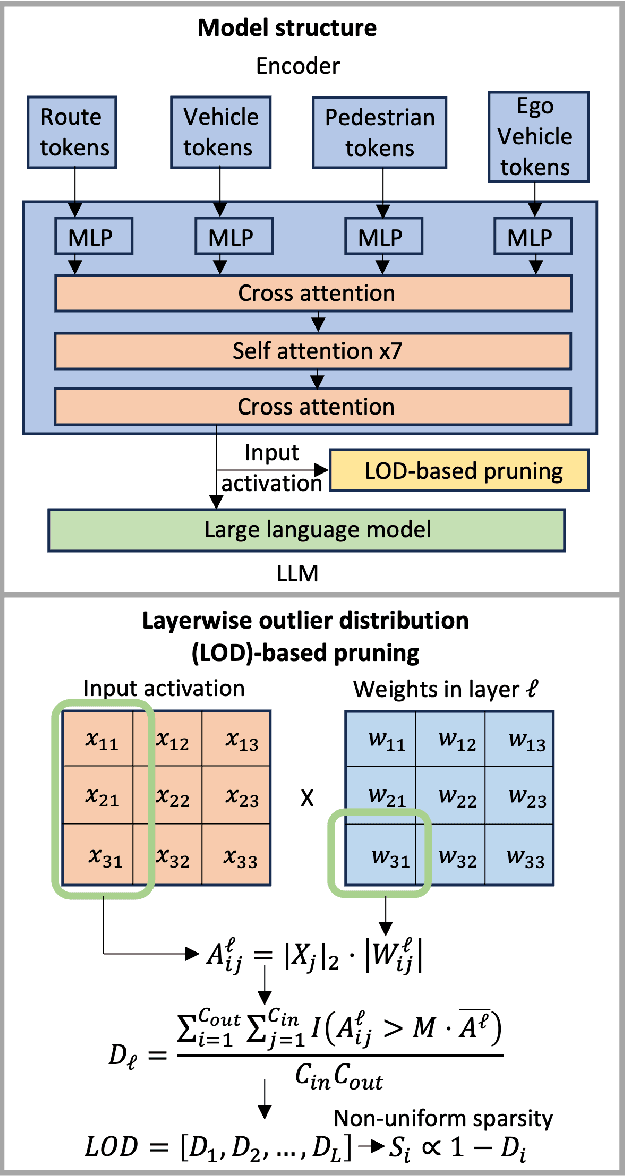

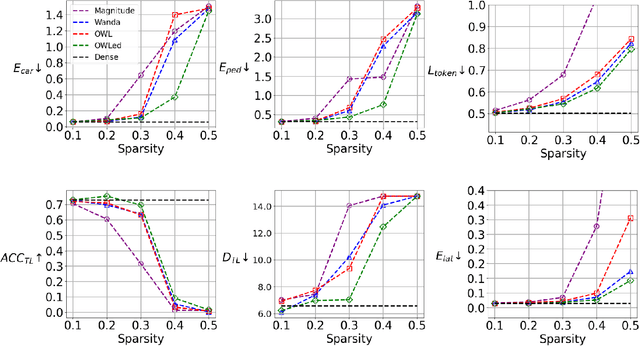

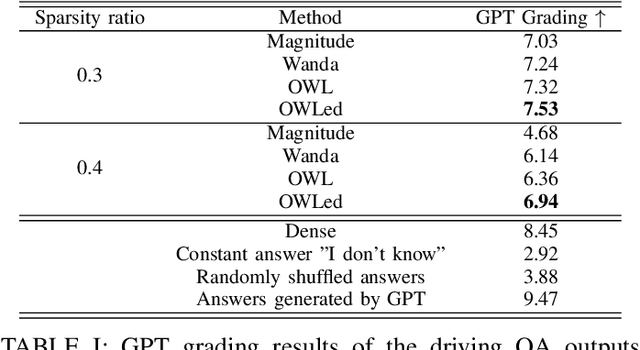

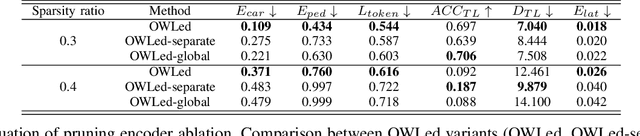

Abstract:The integration of Large Language Models (LLMs) into autonomous driving systems offers promising enhancements in environmental understanding and decision-making. However, the substantial computational demands of deploying LLMs locally on vehicles render this approach unfeasible for real-world automotive applications. To address this challenge, we introduce OWLed, the Outlier-Weighed Layerwise Pruning for Efficient Autonomous Driving Framework that leverages outlier-weighted layerwise sparsity for model compression. Our method assigns non-uniform sparsity ratios to different layers based on the distribution of outlier features, significantly reducing the model size without the need for fine-tuning. To ensure the compressed model adapts well to autonomous driving tasks, we incorporate driving environment data into both the calibration and pruning processes. Our empirical studies reveal that the encoder component is more sensitive to pruning than the LLM, highlighting its critical role in the system. Experimental results demonstrate that OWLed outperforms existing methods in perception, action prediction, and language understanding while substantially lowering computational requirements. These findings underscore the potential of combining advanced pruning techniques with LLMs to develop efficient and robust autonomous driving systems capable of handling complex scenarios. Code will be made publicly available.

Alleviating Search Bias in Bayesian Evolutionary Optimization with Many Heterogeneous Objectives

Aug 25, 2022

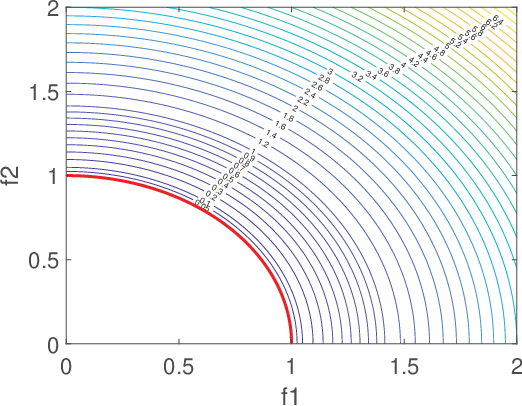

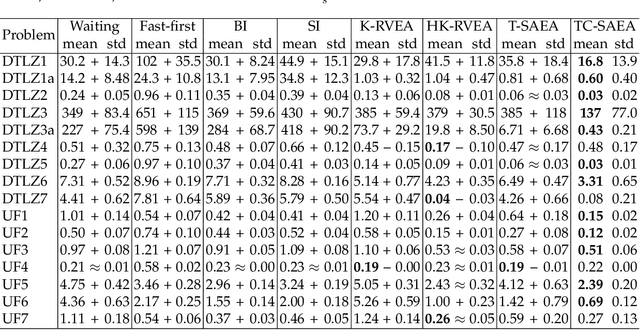

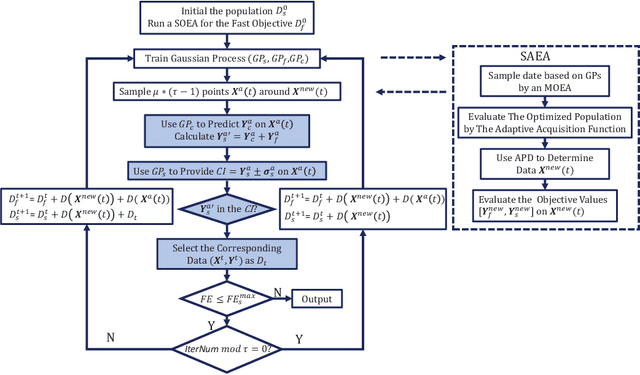

Abstract:Multi-objective optimization problems whose objectives have different evaluation costs are commonly seen in the real world. Such problems are now known as multi-objective optimization problems with heterogeneous objectives (HE-MOPs). So far, however, only a few studies have been reported to address HE-MOPs, and most of them focus on bi-objective problems with one fast objective and one slow objective. In this work, we aim to deal with HE-MOPs having more than two black-box and heterogeneous objectives. To this end, we develop a multi-objective Bayesian evolutionary optimization approach to HE-MOPs by exploiting the different data sets on the cheap and expensive objectives in HE-MOPs to alleviate the search bias caused by the heterogeneous evaluation costs for evaluating different objectives. To make the best use of two different training data sets, one with solutions evaluated on all objectives and the other with those only evaluated on the fast objectives, two separate Gaussian process models are constructed. In addition, a new acquisition function that mitigates search bias towards the fast objectives is suggested, thereby achieving a balance between convergence and diversity. We demonstrate the effectiveness of the proposed algorithm by testing it on widely used multi-/many-objective benchmark problems whose objectives are assumed to be heterogeneously expensive.

Recent Advances in Bayesian Optimization

Jun 07, 2022

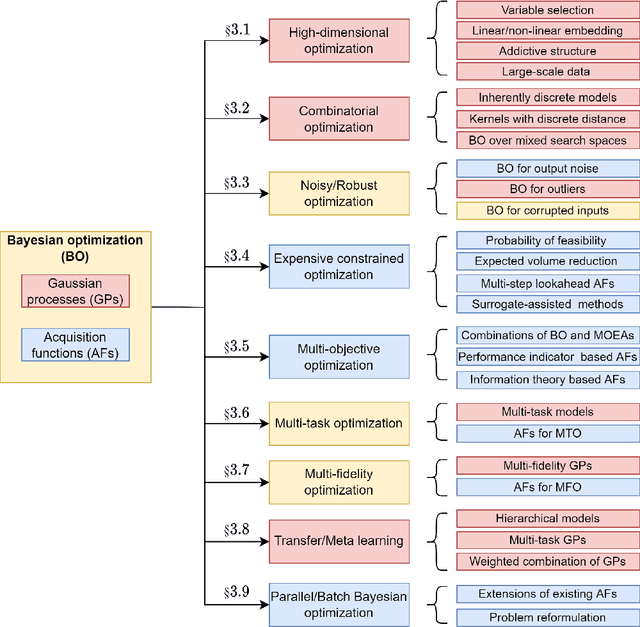

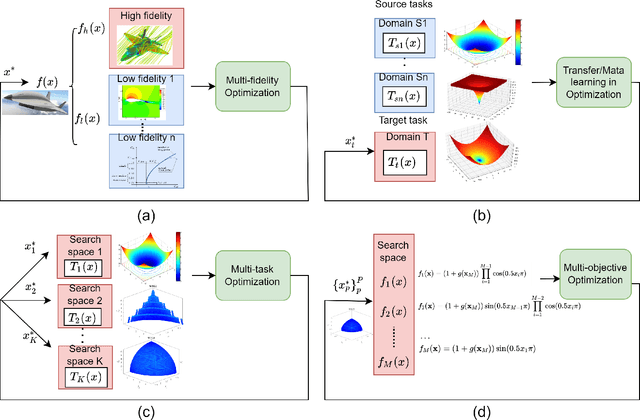

Abstract:Bayesian optimization has emerged at the forefront of expensive black-box optimization due to its data efficiency. Recent years have witnessed a proliferation of studies on the development of new Bayesian optimization algorithms and their applications. Hence, this paper attempts to provide a comprehensive and updated survey of recent advances in Bayesian optimization and identify interesting open problems. We categorize the existing work on Bayesian optimization into nine main groups according to the motivations and focus of the proposed algorithms. For each category, we present the main advances with respect to the construction of surrogate models and adaptation of the acquisition functions. Finally, we discuss the open questions and suggest promising future research directions, in particular with regard to heterogeneity, privacy preservation, and fairness in distributed and federated optimization systems.

Transfer Learning Based Co-surrogate Assisted Evolutionary Bi-objective Optimization for Objectives with Non-uniform Evaluation Times

Aug 30, 2021

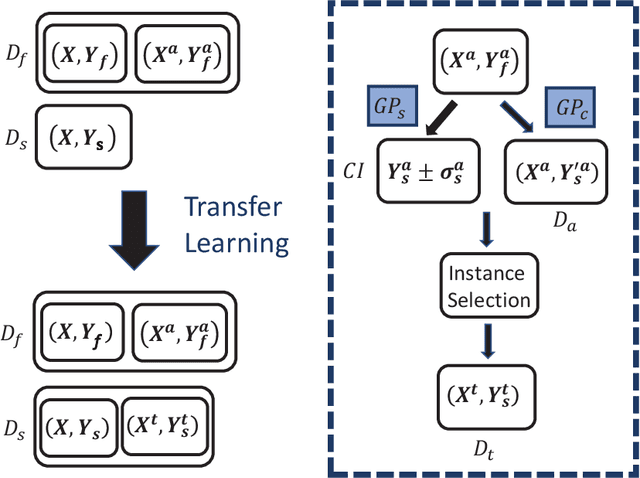

Abstract:Most existing multiobjetive evolutionary algorithms (MOEAs) implicitly assume that each objective function can be evaluated within the same period of time. Typically. this is untenable in many real-world optimization scenarios where evaluation of different objectives involves different computer simulations or physical experiments with distinct time complexity. To address this issue, a transfer learning scheme based on surrogate-assisted evolutionary algorithms (SAEAs) is proposed, in which a co-surrogate is adopted to model the functional relationship between the fast and slow objective functions and a transferable instance selection method is introduced to acquire useful knowledge from the search process of the fast objective. Our experimental results on DTLZ and UF test suites demonstrate that the proposed algorithm is competitive for solving bi-objective optimization where objectives have non-uniform evaluation times.

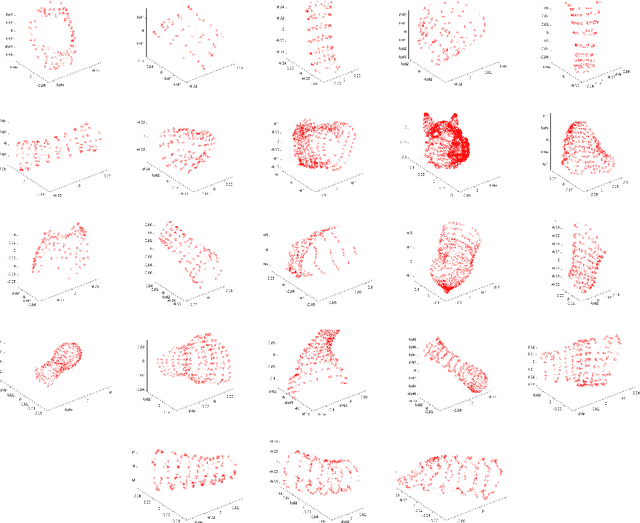

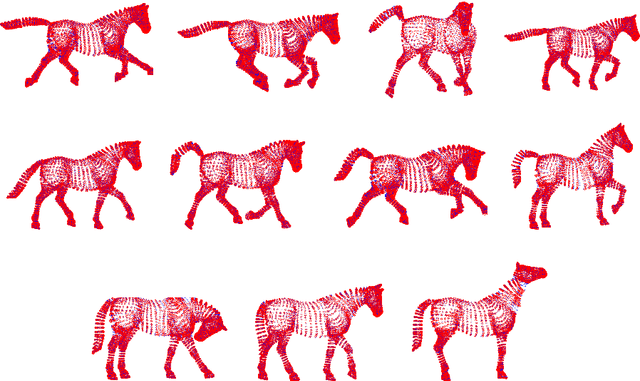

Learning pose variations within shape population by constrained mixtures of factor analyzers

Jun 07, 2020

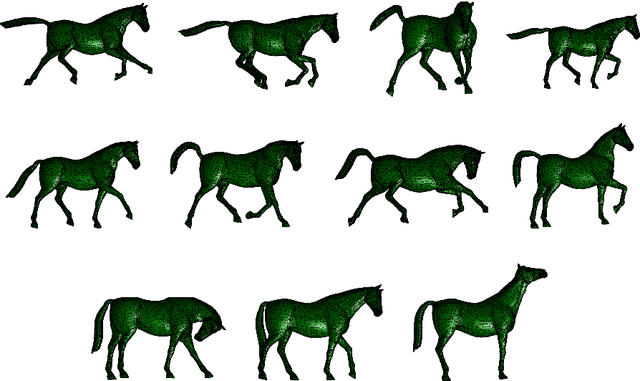

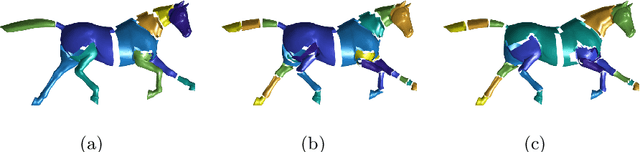

Abstract:Mining and learning the shape variability of underlying population has benefited the applications including parametric shape modeling, 3D animation, and image segmentation. The current statistical shape modeling method works well on learning unstructured shape variations without obvious pose changes (relative rotations of the body parts). Studying the pose variations within a shape population involves segmenting the shapes into different articulated parts and learning the transformations of the segmented parts. This paper formulates the pose learning problem as mixtures of factor analyzers. The segmentation is obtained by components posterior probabilities and the rotations in pose variations are learned by the factor loading matrices. To guarantee that the factor loading matrices are composed by rotation matrices, constraints are imposed and the corresponding closed form optimal solution is derived. Based on the proposed method, the pose variations are automatically learned from the given shape populations. The method is applied in motion animation where new poses are generated by interpolating the existing poses in the training set. The obtained results are smooth and realistic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge