Xiaoxiao Wen

Part-aware Panoptic Segmentation

Jun 11, 2021

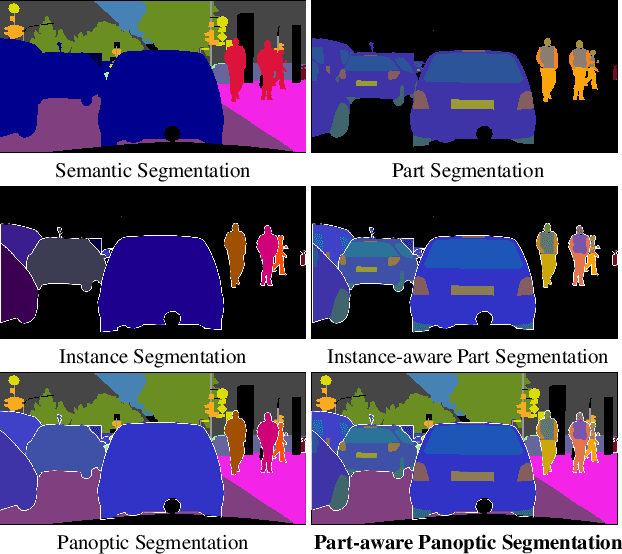

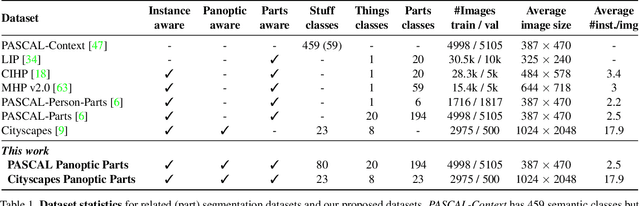

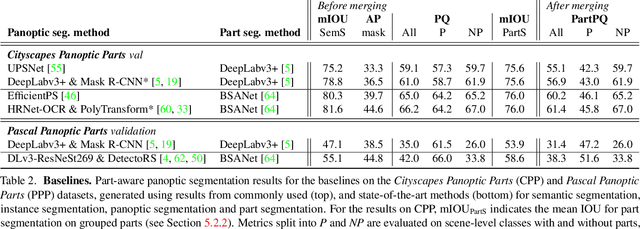

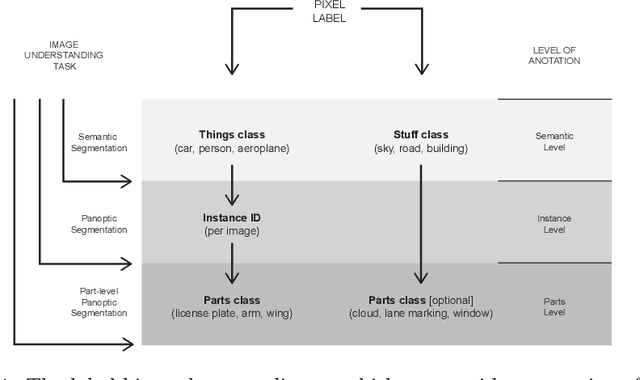

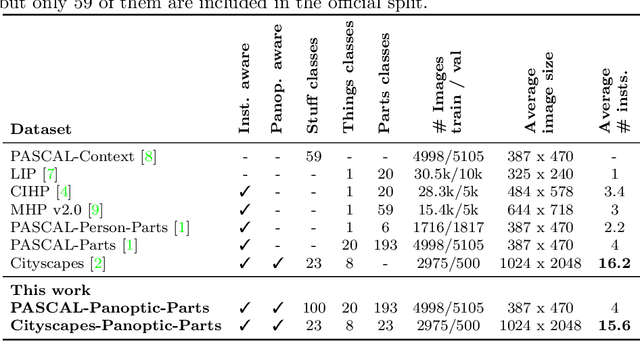

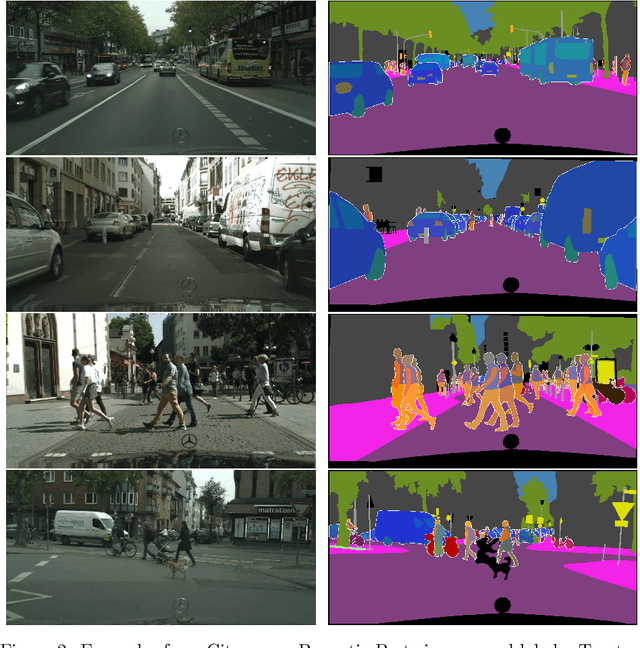

Abstract:In this work, we introduce the new scene understanding task of Part-aware Panoptic Segmentation (PPS), which aims to understand a scene at multiple levels of abstraction, and unifies the tasks of scene parsing and part parsing. For this novel task, we provide consistent annotations on two commonly used datasets: Cityscapes and Pascal VOC. Moreover, we present a single metric to evaluate PPS, called Part-aware Panoptic Quality (PartPQ). For this new task, using the metric and annotations, we set multiple baselines by merging results of existing state-of-the-art methods for panoptic segmentation and part segmentation. Finally, we conduct several experiments that evaluate the importance of the different levels of abstraction in this single task.

Cityscapes-Panoptic-Parts and PASCAL-Panoptic-Parts datasets for Scene Understanding

Apr 16, 2020

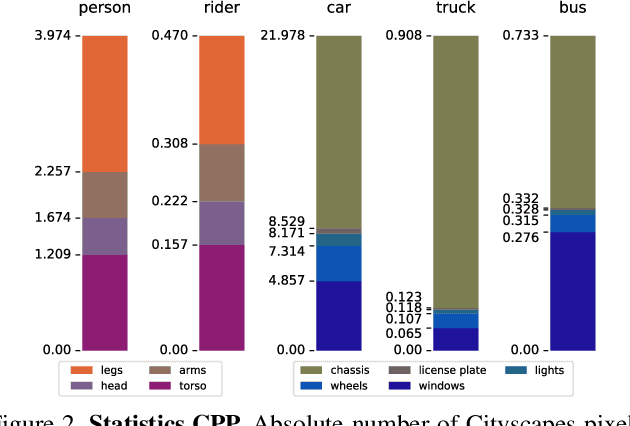

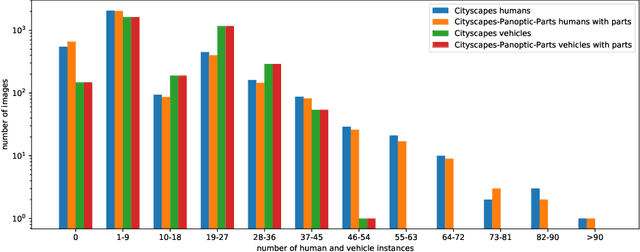

Abstract:In this technical report, we present two novel datasets for image scene understanding. Both datasets have annotations compatible with panoptic segmentation and additionally they have part-level labels for selected semantic classes. This report describes the format of the two datasets, the annotation protocols, the merging strategies, and presents the datasets statistics. The datasets labels together with code for processing and visualization will be published at https://github.com/tue-mps/panoptic_parts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge