Xiaoliang Liu

CRAVE: A Conflicting Reasoning Approach for Explainable Claim Verification Using LLMs

Apr 21, 2025Abstract:The rapid spread of misinformation, driven by digital media and AI-generated content, has made automatic claim verification essential. Traditional methods, which depend on expert-annotated evidence, are labor-intensive and not scalable. Although recent automated systems have improved, they still struggle with complex claims that require nuanced reasoning. To address this, we propose CRAVE, a Conflicting Reasoning Approach for explainable claim VErification, that verify the complex claims based on the conflicting rationales reasoned by large language models (LLMs). Specifically, CRAVE introduces a three-module framework. Ambiguity Elimination enchanced Evidence Retrieval module performs ambiguity elimination and entity-based search to gather relevant evidence related to claim verification from external sources like Wikipedia. Conflicting Perspective Reasoning and Preliminary Judgment module with LLMs adopts LLMs to reason rationales with conflicting stances about claim verification from retrieved evidence across four dimensions, i.e., direct evidence, semantic relationships, linguistic patterns, and logical reasoning and make a preliminary judgment. Finally, Small Language Model (SLM) based Judge module is fine-tuned to make use of preliminary judgment from LLMs to assess the confidence of the conflicting rationales and make a final authenticity judgment. This methodology allows CRAVE to capture subtle inconsistencies in complex claims, improving both the accuracy and transparency of claim verification. Extensive experiments on two public claim verification datasets demonstrate that our CRAVE model achieves much better performance than state-of-the-art methods and exhibits a superior capacity for finding relevant evidence and explaining the model predictions. The code is provided at https://github.com/8zym/CRAVE.

Region-Guided Attack on the Segment Anything Model (SAM)

Nov 05, 2024

Abstract:The Segment Anything Model (SAM) is a cornerstone of image segmentation, demonstrating exceptional performance across various applications, particularly in autonomous driving and medical imaging, where precise segmentation is crucial. However, SAM is vulnerable to adversarial attacks that can significantly impair its functionality through minor input perturbations. Traditional techniques, such as FGSM and PGD, are often ineffective in segmentation tasks due to their reliance on global perturbations that overlook spatial nuances. Recent methods like Attack-SAM-K and UAD have begun to address these challenges, but they frequently depend on external cues and do not fully leverage the structural interdependencies within segmentation processes. This limitation underscores the need for a novel adversarial strategy that exploits the unique characteristics of segmentation tasks. In response, we introduce the Region-Guided Attack (RGA), designed specifically for SAM. RGA utilizes a Region-Guided Map (RGM) to manipulate segmented regions, enabling targeted perturbations that fragment large segments and expand smaller ones, resulting in erroneous outputs from SAM. Our experiments demonstrate that RGA achieves high success rates in both white-box and black-box scenarios, emphasizing the need for robust defenses against such sophisticated attacks. RGA not only reveals SAM's vulnerabilities but also lays the groundwork for developing more resilient defenses against adversarial threats in image segmentation.

RADAP: A Robust and Adaptive Defense Against Diverse Adversarial Patches on Face Recognition

Nov 29, 2023

Abstract:Face recognition (FR) systems powered by deep learning have become widely used in various applications. However, they are vulnerable to adversarial attacks, especially those based on local adversarial patches that can be physically applied to real-world objects. In this paper, we propose RADAP, a robust and adaptive defense mechanism against diverse adversarial patches in both closed-set and open-set FR systems. RADAP employs innovative techniques, such as FCutout and F-patch, which use Fourier space sampling masks to improve the occlusion robustness of the FR model and the performance of the patch segmenter. Moreover, we introduce an edge-aware binary cross-entropy (EBCE) loss function to enhance the accuracy of patch detection. We also present the split and fill (SAF) strategy, which is designed to counter the vulnerability of the patch segmenter to complete white-box adaptive attacks. We conduct comprehensive experiments to validate the effectiveness of RADAP, which shows significant improvements in defense performance against various adversarial patches, while maintaining clean accuracy higher than that of the undefended Vanilla model.

NeRFTAP: Enhancing Transferability of Adversarial Patches on Face Recognition using Neural Radiance Fields

Nov 29, 2023Abstract:Face recognition (FR) technology plays a crucial role in various applications, but its vulnerability to adversarial attacks poses significant security concerns. Existing research primarily focuses on transferability to different FR models, overlooking the direct transferability to victim's face images, which is a practical threat in real-world scenarios. In this study, we propose a novel adversarial attack method that considers both the transferability to the FR model and the victim's face image, called NeRFTAP. Leveraging NeRF-based 3D-GAN, we generate new view face images for the source and target subjects to enhance transferability of adversarial patches. We introduce a style consistency loss to ensure the visual similarity between the adversarial UV map and the target UV map under a 0-1 mask, enhancing the effectiveness and naturalness of the generated adversarial face images. Extensive experiments and evaluations on various FR models demonstrate the superiority of our approach over existing attack techniques. Our work provides valuable insights for enhancing the robustness of FR systems in practical adversarial settings.

AugRmixAT: A Data Processing and Training Method for Improving Multiple Robustness and Generalization Performance

Jul 21, 2022

Abstract:Deep neural networks are powerful, but they also have shortcomings such as their sensitivity to adversarial examples, noise, blur, occlusion, etc. Moreover, ensuring the reliability and robustness of deep neural network models is crucial for their application in safety-critical areas. Much previous work has been proposed to improve specific robustness. However, we find that the specific robustness is often improved at the sacrifice of the additional robustness or generalization ability of the neural network model. In particular, adversarial training methods significantly hurt the generalization performance on unperturbed data when improving adversarial robustness. In this paper, we propose a new data processing and training method, called AugRmixAT, which can simultaneously improve the generalization ability and multiple robustness of neural network models. Finally, we validate the effectiveness of AugRmixAT on the CIFAR-10/100 and Tiny-ImageNet datasets. The experiments demonstrate that AugRmixAT can improve the model's generalization performance while enhancing the white-box robustness, black-box robustness, common corruption robustness, and partial occlusion robustness.

RSTAM: An Effective Black-Box Impersonation Attack on Face Recognition using a Mobile and Compact Printer

Jun 25, 2022

Abstract:Face recognition has achieved considerable progress in recent years thanks to the development of deep neural networks, but it has recently been discovered that deep neural networks are vulnerable to adversarial examples. This means that face recognition models or systems based on deep neural networks are also susceptible to adversarial examples. However, the existing methods of attacking face recognition models or systems with adversarial examples can effectively complete white-box attacks but not black-box impersonation attacks, physical attacks, or convenient attacks, particularly on commercial face recognition systems. In this paper, we propose a new method to attack face recognition models or systems called RSTAM, which enables an effective black-box impersonation attack using an adversarial mask printed by a mobile and compact printer. First, RSTAM enhances the transferability of the adversarial masks through our proposed random similarity transformation strategy. Furthermore, we propose a random meta-optimization strategy for ensembling several pre-trained face models to generate more general adversarial masks. Finally, we conduct experiments on the CelebA-HQ, LFW, Makeup Transfer (MT), and CASIA-FaceV5 datasets. The performance of the attacks is also evaluated on state-of-the-art commercial face recognition systems: Face++, Baidu, Aliyun, Tencent, and Microsoft. Extensive experiments show that RSTAM can effectively perform black-box impersonation attacks on face recognition models or systems.

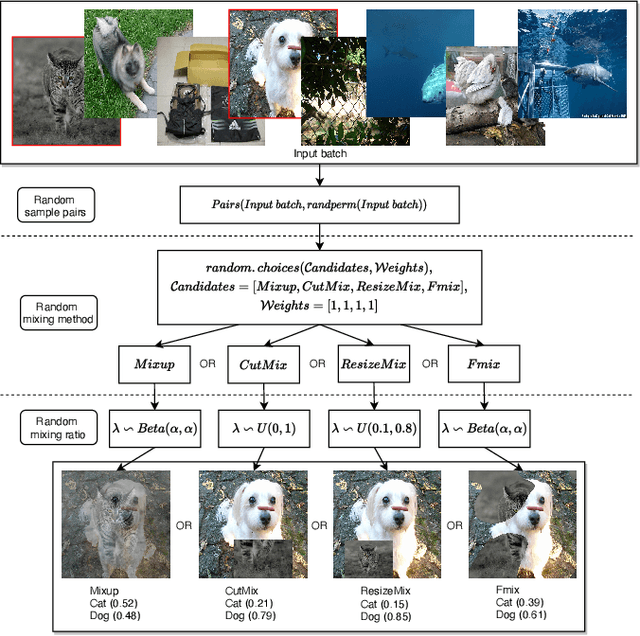

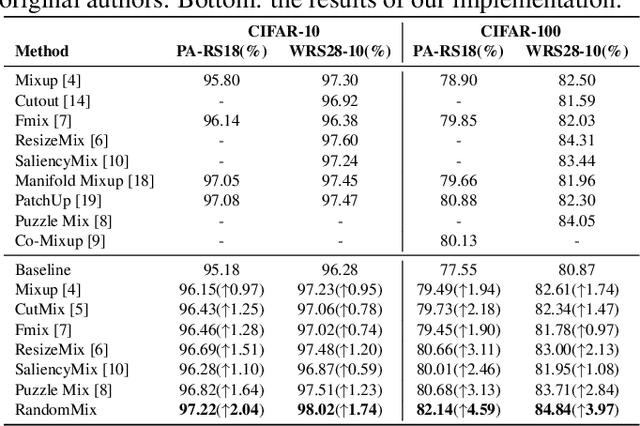

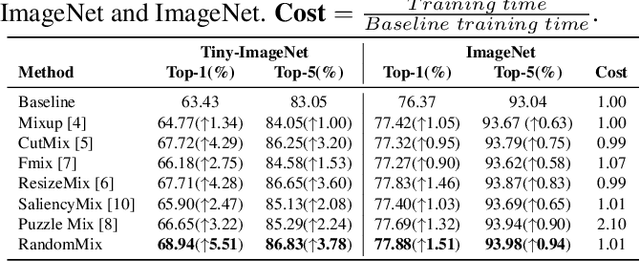

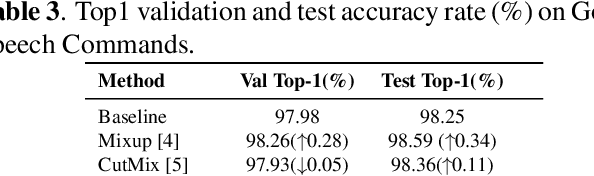

RandomMix: A mixed sample data augmentation method with multiple mixed modes

May 18, 2022

Abstract:Data augmentation is a very practical technique that can be used to improve the generalization ability of neural networks and prevent overfitting. Recently, mixed sample data augmentation has received a lot of attention and achieved great success. In order to enhance the performance of mixed sample data augmentation, a series of recent works are devoted to obtaining and analyzing the salient regions of the image, and using the saliency area to guide the image mixing. However, obtaining the salient information of an image requires a lot of extra calculations. Different from improving performance through saliency analysis, our proposed method RandomMix mainly increases the diversity of the mixed sample to enhance the generalization ability and performance of neural networks. Moreover, RandomMix can improve the robustness of the model, does not require too much additional calculation, and is easy to insert into the training pipeline. Finally, experiments on the CIFAR-10/100, Tiny-ImageNet, ImageNet, and Google Speech Commands datasets demonstrate that RandomMix achieves better performance than other state-of-the-art mixed sample data augmentation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge