Xiaoli Meng

BOTT: Box Only Transformer Tracker for 3D Object Tracking

Aug 17, 2023Abstract:Tracking 3D objects is an important task in autonomous driving. Classical Kalman Filtering based methods are still the most popular solutions. However, these methods require handcrafted designs in motion modeling and can not benefit from the growing data amounts. In this paper, Box Only Transformer Tracker (BOTT) is proposed to learn to link 3D boxes of the same object from the different frames, by taking all the 3D boxes in a time window as input. Specifically, transformer self-attention is applied to exchange information between all the boxes to learn global-informative box embeddings. The similarity between these learned embeddings can be used to link the boxes of the same object. BOTT can be used for both online and offline tracking modes seamlessly. Its simplicity enables us to significantly reduce engineering efforts required by traditional Kalman Filtering based methods. Experiments show BOTT achieves competitive performance on two largest 3D MOT benchmarks: 69.9 and 66.7 AMOTA on nuScenes validation and test splits, respectively, 56.45 and 59.57 MOTA L2 on Waymo Open Dataset validation and test splits, respectively. This work suggests that tracking 3D objects by learning features directly from 3D boxes using transformers is a simple yet effective way.

An RLS-Based Instantaneous Velocity Estimator for Extended Radar Tracking

Feb 24, 2020

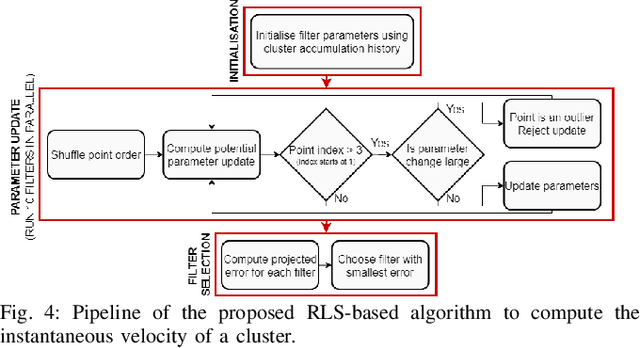

Abstract:Radar sensors have become an important part of the perception sensor suite due to their long range and their ability to work in adverse weather conditions. However, several shortcomings such as large amounts of noise and extreme sparsity of the point cloud result in them not being used to their full potential. In this paper, we present a novel Recursive Least Squares (RLS) based approach to estimate the instantaneous velocity of dynamic objects in real-time that is capable of handling large amounts of noise in the input data stream. We also present an end-to-end pipeline to track extended objects in real-time that uses the computed velocity estimates for data association and track initialisation. The approaches are evaluated using several real-world inspired driving scenarios that test the limits of these algorithms. It is also experimentally proven that our approaches run in real-time with frame execution time not exceeding 30 ms even in dense traffic scenarios, thus allowing for their direct implementation on autonomous vehicles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge