Xianbo Gao

Generating and Adapting to Diverse Ad-Hoc Cooperation Agents in Hanabi

Apr 30, 2020

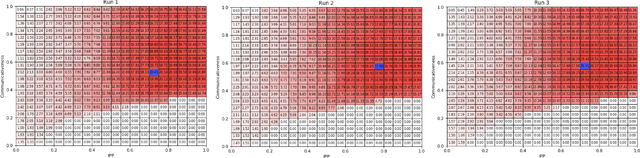

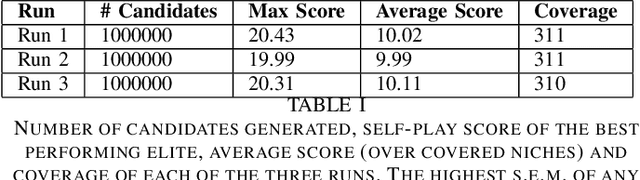

Abstract:Hanabi is a cooperative game that brings the problem of modeling other players to the forefront. In this game, coordinated groups of players can leverage pre-established conventions to great effect, but playing in an ad-hoc setting requires agents to adapt to its partner's strategies with no previous coordination. Evaluating an agent in this setting requires a diverse population of potential partners, but so far, the behavioral diversity of agents has not been considered in a systematic way. This paper proposes Quality Diversity algorithms as a promising class of algorithms to generate diverse populations for this purpose, and generates a population of diverse Hanabi agents using MAP-Elites. We also postulate that agents can benefit from a diverse population during training and implement a simple "meta-strategy" for adapting to an agent's perceived behavioral niche. We show this meta-strategy can work better than generalist strategies even outside the population it was trained with if its partner's behavioral niche can be correctly inferred, but in practice a partner's behavior depends and interferes with the meta-agent's own behavior, suggesting an avenue for future research in characterizing another agent's behavior during gameplay.

Evaluating the Rainbow DQN Agent in Hanabi with Unseen Partners

Apr 28, 2020

Abstract:Hanabi is a cooperative game that challenges exist-ing AI techniques due to its focus on modeling the mental states ofother players to interpret and predict their behavior. While thereare agents that can achieve near-perfect scores in the game byagreeing on some shared strategy, comparatively little progresshas been made in ad-hoc cooperation settings, where partnersand strategies are not known in advance. In this paper, we showthat agents trained through self-play using the popular RainbowDQN architecture fail to cooperate well with simple rule-basedagents that were not seen during training and, conversely, whenthese agents are trained to play with any individual rule-basedagent, or even a mix of these agents, they fail to achieve goodself-play scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge