Xavier Gibert

Domain Conditional Predictors for Domain Adaptation

Jun 25, 2021

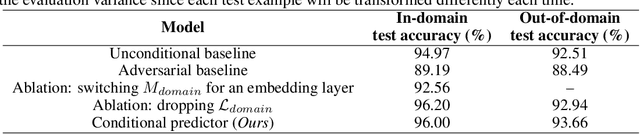

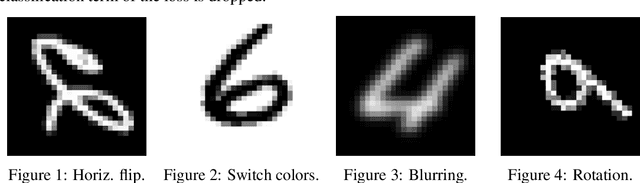

Abstract:Learning guarantees often rely on assumptions of i.i.d. data, which will likely be violated in practice once predictors are deployed to perform real-world tasks. Domain adaptation approaches thus appeared as a useful framework yielding extra flexibility in that distinct train and test data distributions are supported, provided that other assumptions are satisfied such as covariate shift, which expects the conditional distributions over labels to be independent of the underlying data distribution. Several approaches were introduced in order to induce generalization across varying train and test data sources, and those often rely on the general idea of domain-invariance, in such a way that the data-generating distributions are to be disregarded by the prediction model. In this contribution, we tackle the problem of generalizing across data sources by approaching it from the opposite direction: we consider a conditional modeling approach in which predictions, in addition to being dependent on the input data, use information relative to the underlying data-generating distribution. For instance, the model has an explicit mechanism to adapt to changing environments and/or new data sources. We argue that such an approach is more generally applicable than current domain adaptation methods since it does not require extra assumptions such as covariate shift and further yields simpler training algorithms that avoid a common source of training instabilities caused by minimax formulations, often employed in domain-invariant methods.

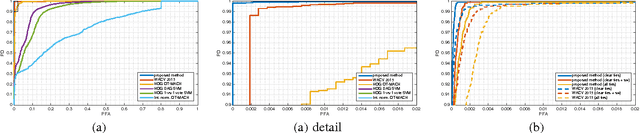

Sequential Score Adaptation with Extreme Value Theory for Robust Railway Track Inspection

Oct 20, 2015

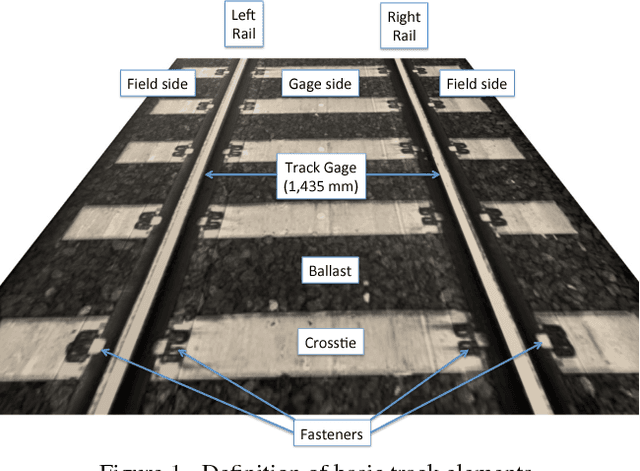

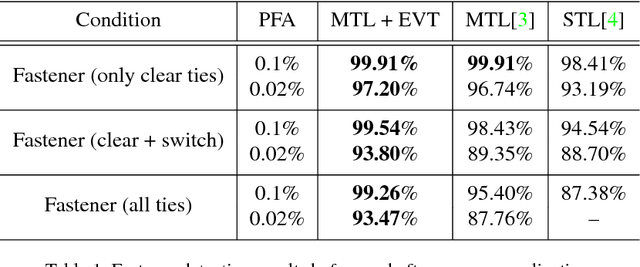

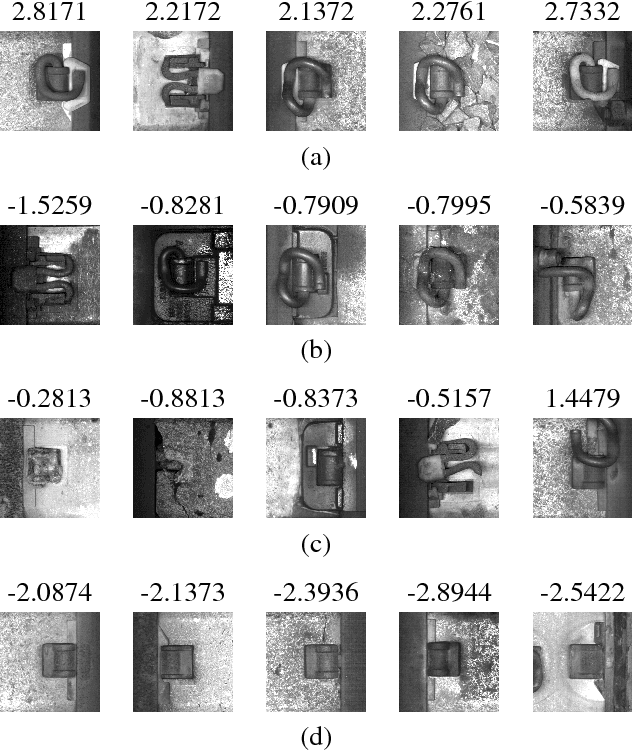

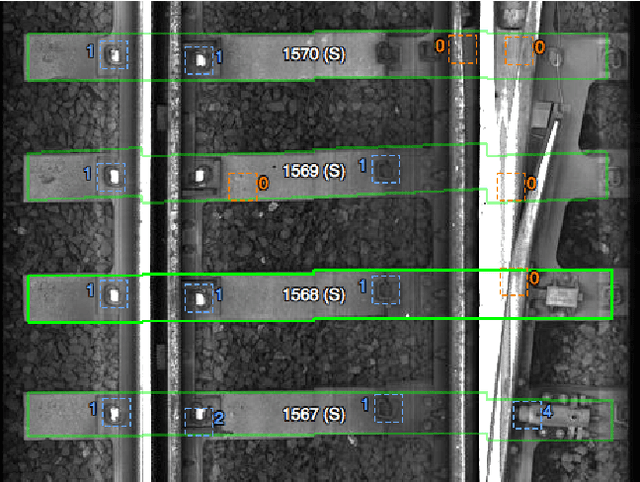

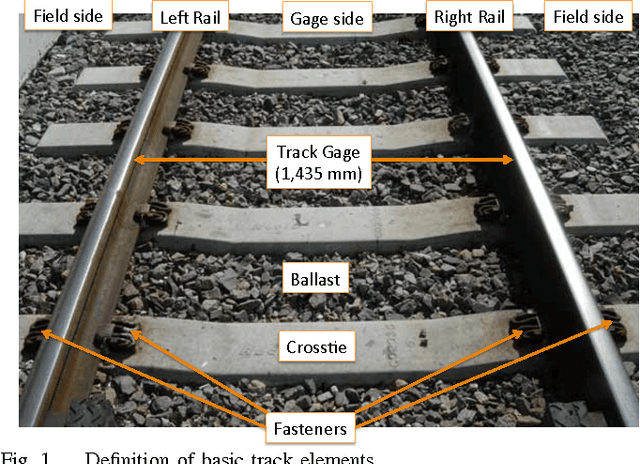

Abstract:Periodic inspections are necessary to keep railroad tracks in state of good repair and prevent train accidents. Automatic track inspection using machine vision technology has become a very effective inspection tool. Because of its non-contact nature, this technology can be deployed on virtually any railway vehicle to continuously survey the tracks and send exception reports to track maintenance personnel. However, as appearance and imaging conditions vary, false alarm rates can dramatically change, making it difficult to select a good operating point. In this paper, we use extreme value theory (EVT) within a Bayesian framework to optimally adjust the sensitivity of anomaly detectors. We show that by approximating the lower tail of the probability density function (PDF) of the scores with an Exponential distribution (a special case of the Generalized Pareto distribution), and using the Gamma conjugate prior learned from the training data, it is possible to reduce the variability in false alarm rate and improve the overall performance. This method has shown an increase in the defect detection rate of rail fasteners in the presence of clutter (at PFA 0.1%) from 95.40% to 99.26% on the 85-mile Northeast Corridor (NEC) 2012-2013 concrete tie dataset.

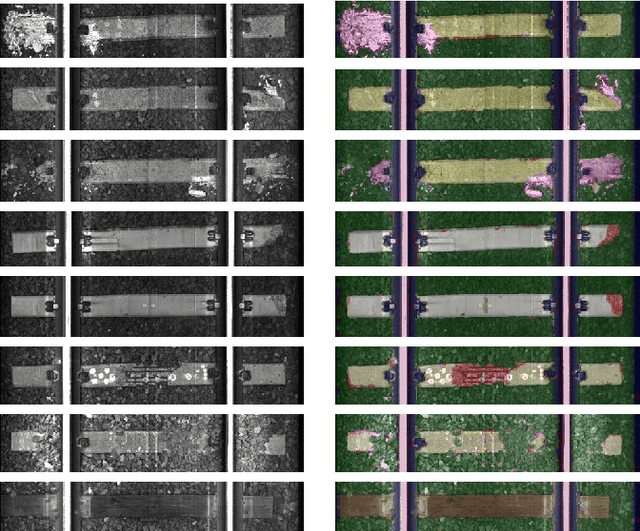

Deep Multi-task Learning for Railway Track Inspection

Sep 17, 2015

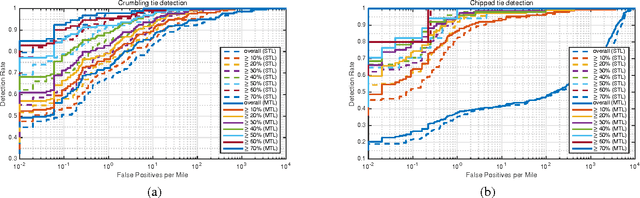

Abstract:Railroad tracks need to be periodically inspected and monitored to ensure safe transportation. Automated track inspection using computer vision and pattern recognition methods have recently shown the potential to improve safety by allowing for more frequent inspections while reducing human errors. Achieving full automation is still very challenging due to the number of different possible failure modes as well as the broad range of image variations that can potentially trigger false alarms. Also, the number of defective components is very small, so not many training examples are available for the machine to learn a robust anomaly detector. In this paper, we show that detection performance can be improved by combining multiple detectors within a multi-task learning framework. We show that this approach results in better accuracy in detecting defects on railway ties and fasteners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge