Wuraola Fisayo Oyewusi

Semantic Enrichment of Nigerian Pidgin English for Contextual Sentiment Classification

Mar 27, 2020

Abstract:Nigerian English adaptation, Pidgin, has evolved over the years through multi-language code switching, code mixing and linguistic adaptation. While Pidgin preserves many of the words in the normal English language corpus, both in spelling and pronunciation, the fundamental meaning of these words have changed significantly. For example,'ginger' is not a plant but an expression of motivation and 'tank' is not a container but an expression of gratitude. The implication is that the current approach of using direct English sentiment analysis of social media text from Nigeria is sub-optimal, as it will not be able to capture the semantic variation and contextual evolution in the contemporary meaning of these words. In practice, while many words in Nigerian Pidgin adaptation are the same as the standard English, the full English language based sentiment analysis models are not designed to capture the full intent of the Nigerian pidgin when used alone or code-mixed. By augmenting scarce human labelled code-changed text with ample synthetic code-reformatted text and meaning, we achieve significant improvements in sentiment scoring. Our research explores how to understand sentiment in an intrasentential code mixing and switching context where there has been significant word localization.This work presents a 300 VADER lexicon compatible Nigerian Pidgin sentiment tokens and their scores and a 14,000 gold standard Nigerian Pidgin tweets and their sentiments labels.

Improving Yorùbá Diacritic Restoration

Mar 23, 2020

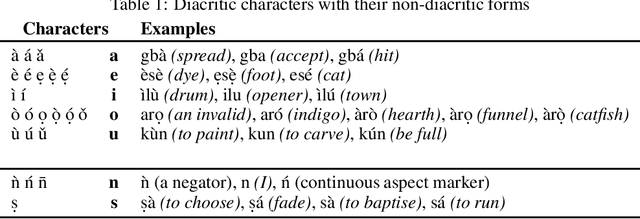

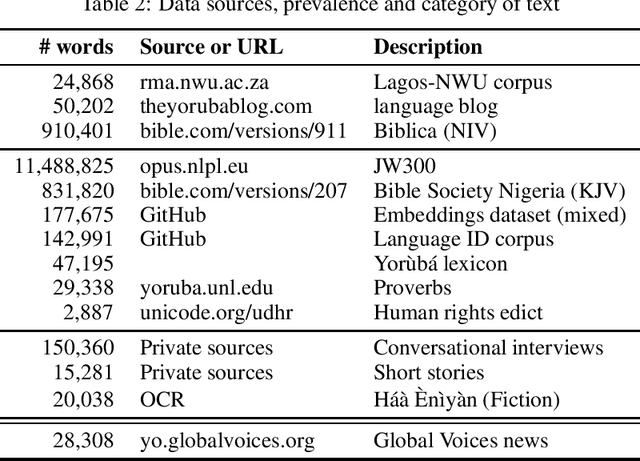

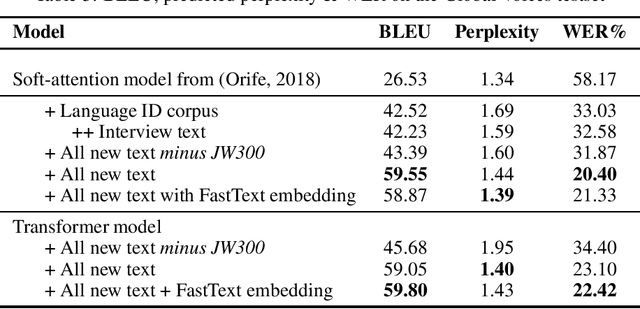

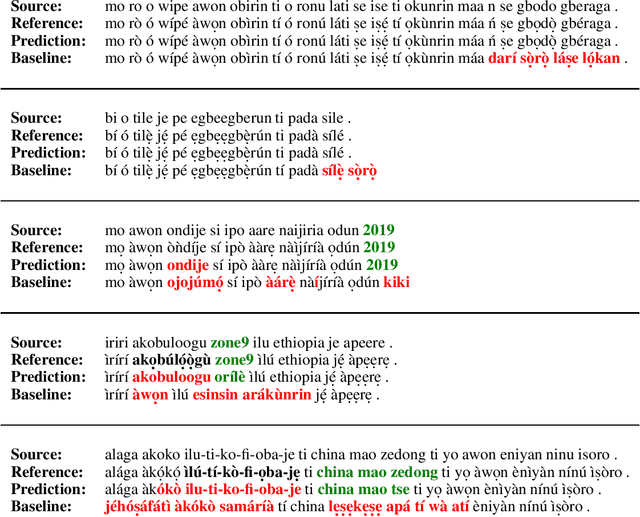

Abstract:Yor\`ub\'a is a widely spoken West African language with a writing system rich in orthographic and tonal diacritics. They provide morphological information, are crucial for lexical disambiguation, pronunciation and are vital for any computational Speech or Natural Language Processing tasks. However diacritic marks are commonly excluded from electronic texts due to limited device and application support as well as general education on proper usage. We report on recent efforts at dataset cultivation. By aggregating and improving disparate texts from the web and various personal libraries, we were able to significantly grow our clean Yor\`ub\'a dataset from a majority Bibilical text corpora with three sources to millions of tokens from over a dozen sources. We evaluate updated diacritic restoration models on a new, general purpose, public-domain Yor\`ub\'a evaluation dataset of modern journalistic news text, selected to be multi-purpose and reflecting contemporary usage. All pre-trained models, datasets and source-code have been released as an open-source project to advance efforts on Yor\`ub\'a language technology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge