Woo-Ri Ko

Nonverbal Social Behavior Generation for Social Robots Using End-to-End Learning

Nov 02, 2022

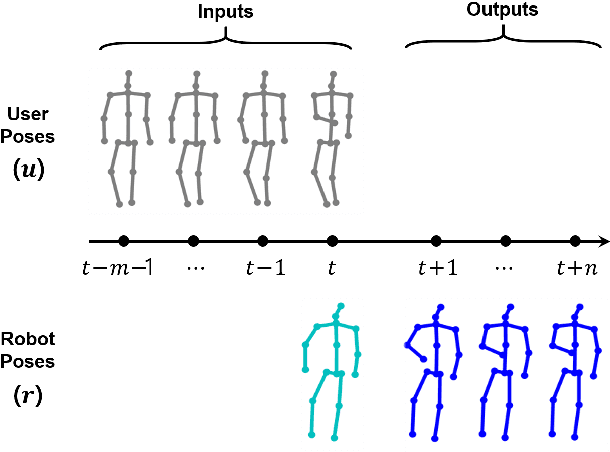

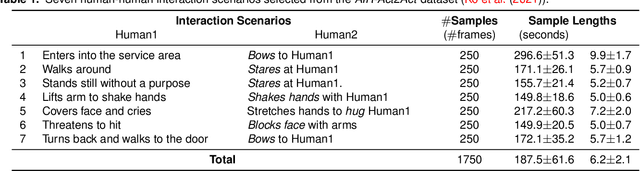

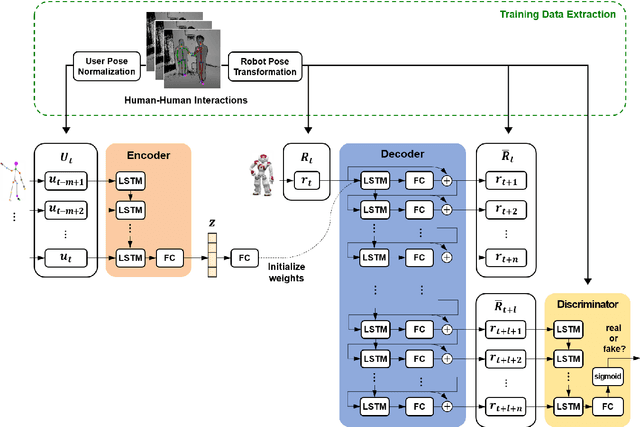

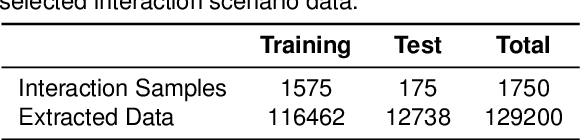

Abstract:To provide effective and enjoyable human-robot interaction, it is important for social robots to exhibit nonverbal behaviors, such as a handshake or a hug. However, the traditional approach of reproducing pre-coded motions allows users to easily predict the reaction of the robot, giving the impression that the robot is a machine rather than a real agent. Therefore, we propose a neural network architecture based on the Seq2Seq model that learns social behaviors from human-human interactions in an end-to-end manner. We adopted a generative adversarial network to prevent invalid pose sequences from occurring when generating long-term behavior. To verify the proposed method, experiments were performed using the humanoid robot Pepper in a simulated environment. Because it is difficult to determine success or failure in social behavior generation, we propose new metrics to calculate the difference between the generated behavior and the ground-truth behavior. We used these metrics to show how different network architectural choices affect the performance of behavior generation, and we compared the performance of learning multiple behaviors and that of learning a single behavior. We expect that our proposed method can be used not only with home service robots, but also for guide robots, delivery robots, educational robots, and virtual robots, enabling the users to enjoy and effectively interact with the robots.

AIR-Act2Act: Human-human interaction dataset for teaching non-verbal social behaviors to robots

Sep 04, 2020

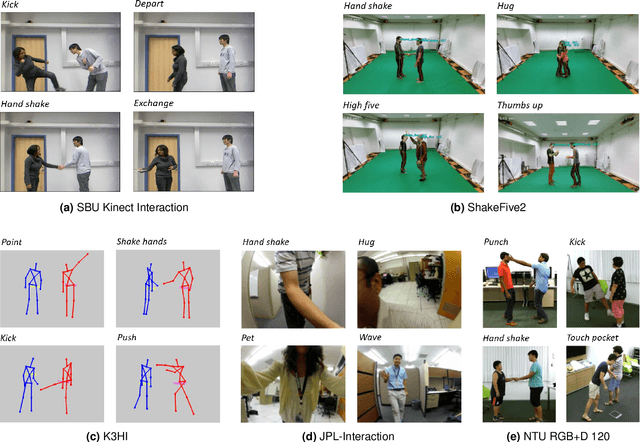

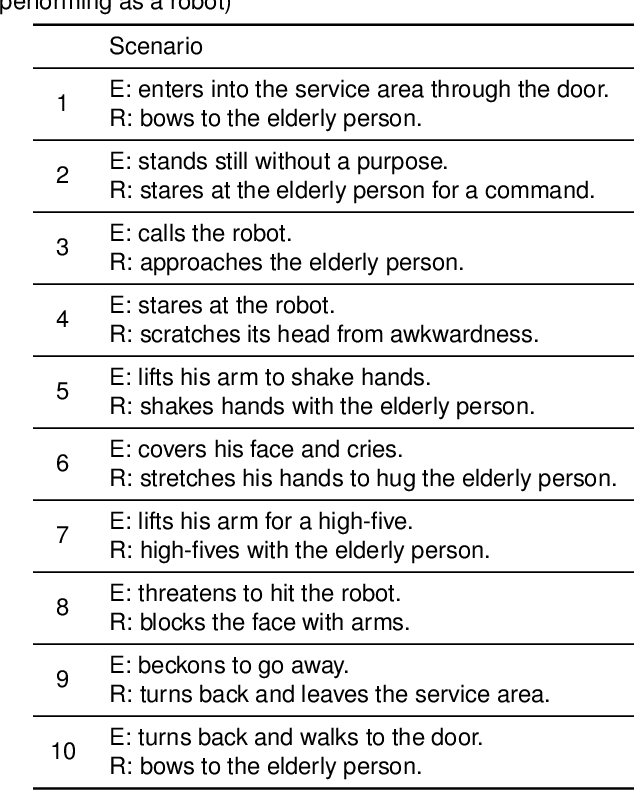

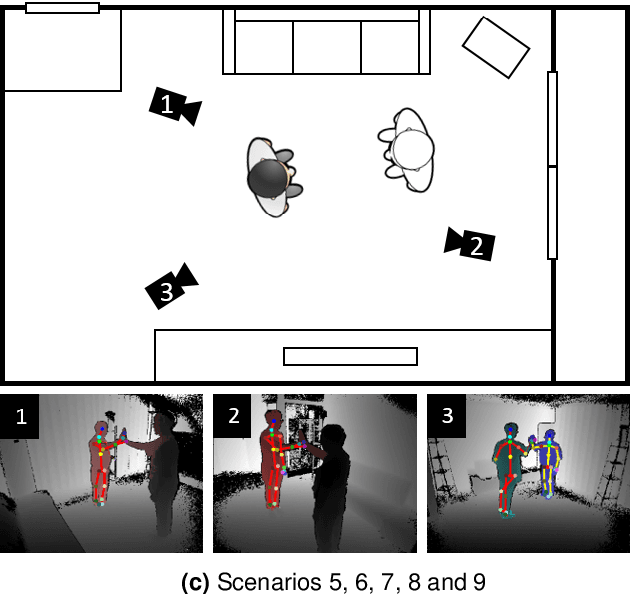

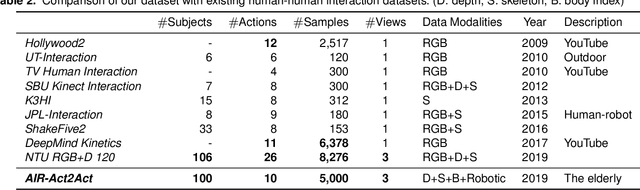

Abstract:To better interact with users, a social robot should understand the users' behavior, infer the intention, and respond appropriately. Machine learning is one way of implementing robot intelligence. It provides the ability to automatically learn and improve from experience instead of explicitly telling the robot what to do. Social skills can also be learned through watching human-human interaction videos. However, human-human interaction datasets are relatively scarce to learn interactions that occur in various situations. Moreover, we aim to use service robots in the elderly-care domain; however, there has been no interaction dataset collected for this domain. For this reason, we introduce a human-human interaction dataset for teaching non-verbal social behaviors to robots. It is the only interaction dataset that elderly people have participated in as performers. We recruited 100 elderly people and two college students to perform 10 interactions in an indoor environment. The entire dataset has 5,000 interaction samples, each of which contains depth maps, body indexes and 3D skeletal data that are captured with three Microsoft Kinect v2 cameras. In addition, we provide the joint angles of a humanoid NAO robot which are converted from the human behavior that robots need to learn. The dataset and useful python scripts are available for download at https://github.com/ai4r/AIR-Act2Act. It can be used to not only teach social skills to robots but also benchmark action recognition algorithms.

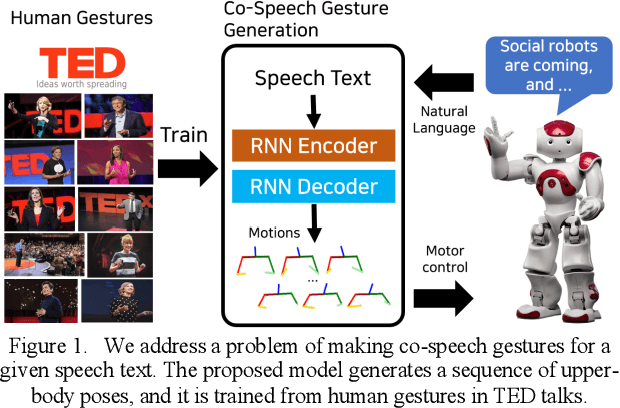

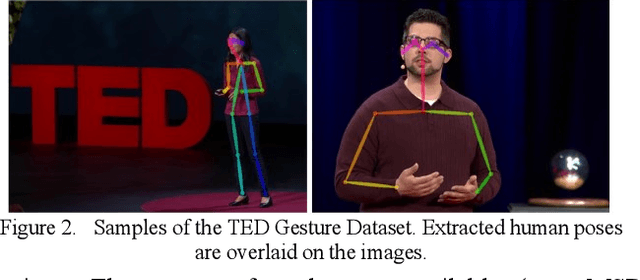

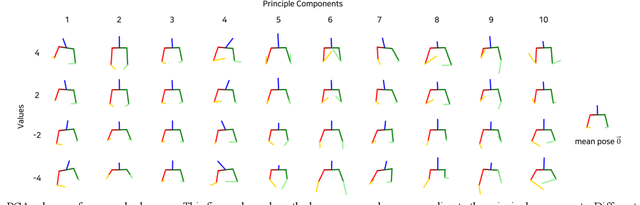

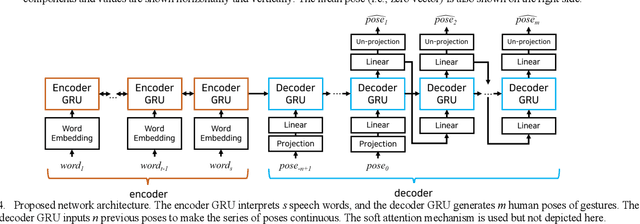

Robots Learn Social Skills: End-to-End Learning of Co-Speech Gesture Generation for Humanoid Robots

Oct 30, 2018

Abstract:Co-speech gestures enhance interaction experiences between humans as well as between humans and robots. Existing robots use rule-based speech-gesture association, but this requires human labor and prior knowledge of experts to be implemented. We present a learning-based co-speech gesture generation that is learned from 52 h of TED talks. The proposed end-to-end neural network model consists of an encoder for speech text understanding and a decoder to generate a sequence of gestures. The model successfully produces various gestures including iconic, metaphoric, deictic, and beat gestures. In a subjective evaluation, participants reported that the gestures were human-like and matched the speech content. We also demonstrate a co-speech gesture with a NAO robot working in real time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge