Wonhui Kim

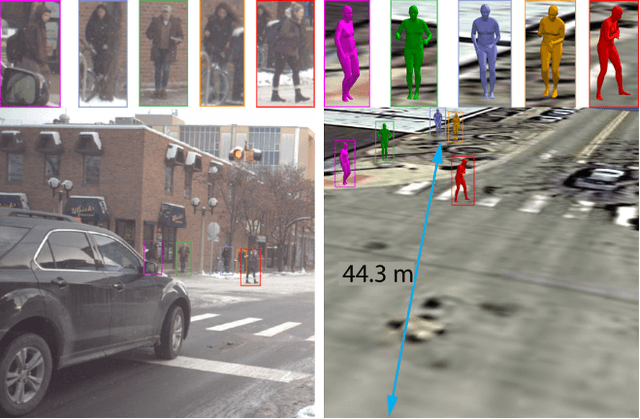

PedX: Benchmark Dataset for Metric 3D Pose Estimation of Pedestrians in Complex Urban Intersections

Sep 10, 2018

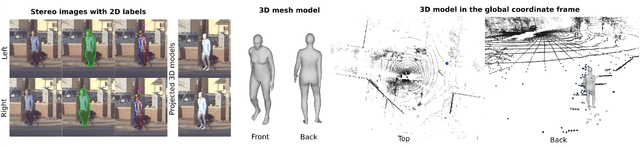

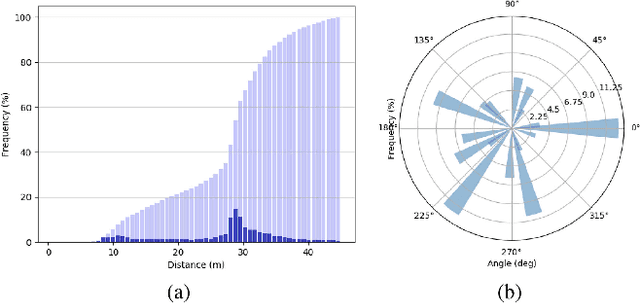

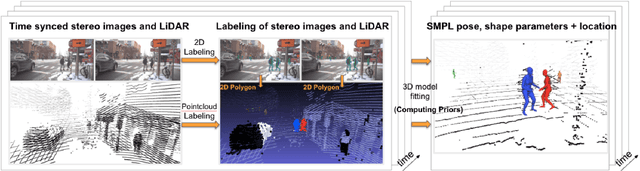

Abstract:This paper presents a novel dataset titled PedX, a large-scale multimodal collection of pedestrians at complex urban intersections. PedX consists of more than 5,000 pairs of high-resolution (12MP) stereo images and LiDAR data along with providing 2D and 3D labels of pedestrians. We also present a novel 3D model fitting algorithm for automatic 3D labeling harnessing constraints across different modalities and novel shape and temporal priors. All annotated 3D pedestrians are localized into the real-world metric space, and the generated 3D models are validated using a mocap system configured in a controlled outdoor environment to simulate pedestrians in urban intersections. We also show that the manual 2D labels can be replaced by state-of-the-art automated labeling approaches, thereby facilitating automatic generation of large scale datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge