Wolfgang Ertel

Expected Similarity Estimation for Large-Scale Batch and Streaming Anomaly Detection

Jun 06, 2016

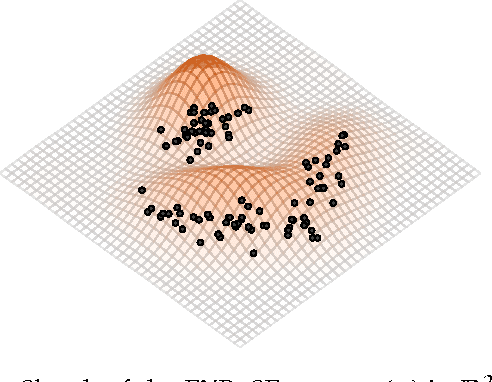

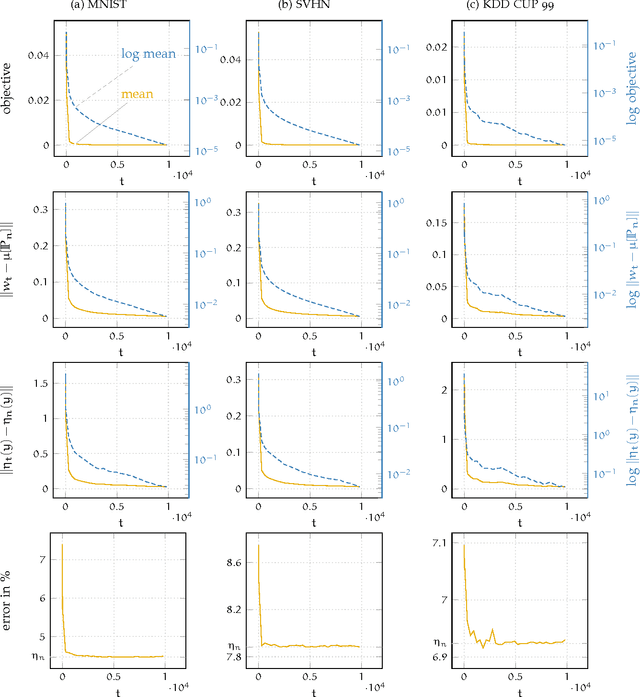

Abstract:We present a novel algorithm for anomaly detection on very large datasets and data streams. The method, named EXPected Similarity Estimation (EXPoSE), is kernel-based and able to efficiently compute the similarity between new data points and the distribution of regular data. The estimator is formulated as an inner product with a reproducing kernel Hilbert space embedding and makes no assumption about the type or shape of the underlying data distribution. We show that offline (batch) learning with EXPoSE can be done in linear time and online (incremental) learning takes constant time per instance and model update. Furthermore, EXPoSE can make predictions in constant time, while it requires only constant memory. In addition, we propose different methodologies for concept drift adaptation on evolving data streams. On several real datasets we demonstrate that our approach can compete with state of the art algorithms for anomaly detection while being an order of magnitude faster than most other approaches.

Constant Time EXPected Similarity Estimation using Stochastic Optimization

Nov 17, 2015

Abstract:A new algorithm named EXPected Similarity Estimation (EXPoSE) was recently proposed to solve the problem of large-scale anomaly detection. It is a non-parametric and distribution free kernel method based on the Hilbert space embedding of probability measures. Given a dataset of $n$ samples, EXPoSE needs only $\mathcal{O}(n)$ (linear time) to build a model and $\mathcal{O}(1)$ (constant time) to make a prediction. In this work we improve the linear computational complexity and show that an $\epsilon$-accurate model can be estimated in constant time, which has significant implications for large-scale learning problems. To achieve this goal, we cast the original EXPoSE formulation into a stochastic optimization problem. It is crucial that this approach allows us to determine the number of iteration based on a desired accuracy $\epsilon$, independent of the dataset size $n$. We will show that the proposed stochastic gradient descent algorithm works in general (possible infinite-dimensional) Hilbert spaces, is easy to implement and requires no additional step-size parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge