Willams de Lima Costa

A New Dataset and Framework for Robust Road Surface Classification via Camera-IMU Fusion

Jan 29, 2026Abstract:Road surface classification (RSC) is a key enabler for environment-aware predictive maintenance systems. However, existing RSC techniques often fail to generalize beyond narrow operational conditions due to limited sensing modalities and datasets that lack environmental diversity. This work addresses these limitations by introducing a multimodal framework that fuses images and inertial measurements using a lightweight bidirectional cross-attention module followed by an adaptive gating layer that adjusts modality contributions under domain shifts. Given the limitations of current benchmarks, especially regarding lack of variability, we introduce ROAD, a new dataset composed of three complementary subsets: (i) real-world multimodal recordings with RGB-IMU streams synchronized using a gold-standard industry datalogger, captured across diverse lighting, weather, and surface conditions; (ii) a large vision-only subset designed to assess robustness under adverse illumination and heterogeneous capture setups; and (iii) a synthetic subset generated to study out-of-distribution generalization in scenarios difficult to obtain in practice. Experiments show that our method achieves a +1.4 pp improvement over the previous state-of-the-art on the PVS benchmark and an +11.6 pp improvement on our multimodal ROAD subset, with consistently higher F1-scores on minority classes. The framework also demonstrates stable performance across challenging visual conditions, including nighttime, heavy rain, and mixed-surface transitions. These findings indicate that combining affordable camera and IMU sensors with multimodal attention mechanisms provides a scalable, robust foundation for road surface understanding, particularly relevant for regions where environmental variability and cost constraints limit the adoption of high-end sensing suites.

Indoor scene recognition from images under visual corruptions

Aug 23, 2024Abstract:The classification of indoor scenes is a critical component in various applications, such as intelligent robotics for assistive living. While deep learning has significantly advanced this field, models often suffer from reduced performance due to image corruption. This paper presents an innovative approach to indoor scene recognition that leverages multimodal data fusion, integrating caption-based semantic features with visual data to enhance both accuracy and robustness against corruption. We examine two multimodal networks that synergize visual features from CNN models with semantic captions via a Graph Convolutional Network (GCN). Our study shows that this fusion markedly improves model performance, with notable gains in Top-1 accuracy when evaluated against a corrupted subset of the Places365 dataset. Moreover, while standalone visual models displayed high accuracy on uncorrupted images, their performance deteriorated significantly with increased corruption severity. Conversely, the multimodal models demonstrated improved accuracy in clean conditions and substantial robustness to a range of image corruptions. These results highlight the efficacy of incorporating high-level contextual information through captions, suggesting a promising direction for enhancing the resilience of classification systems.

VWise: A novel benchmark for evaluating scene classification for vehicular applications

Jun 05, 2024

Abstract:Current datasets for vehicular applications are mostly collected in North America or Europe. Models trained or evaluated on these datasets might suffer from geographical bias when deployed in other regions. Specifically, for scene classification, a highway in a Latin American country differs drastically from an Autobahn, for example, both in design and maintenance levels. We propose VWise, a novel benchmark for road-type classification and scene classification tasks, in addition to tasks focused on external contexts related to vehicular applications in LatAm. We collected over 520 video clips covering diverse urban and rural environments across Latin American countries, annotated with six classes of road types. We also evaluated several state-of-the-art classification models in baseline experiments, obtaining over 84% accuracy. With this dataset, we aim to enhance research on vehicular tasks in Latin America.

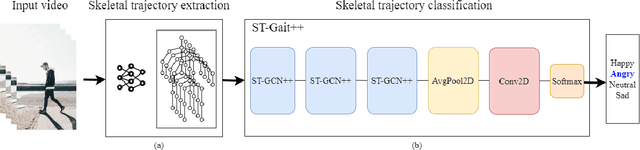

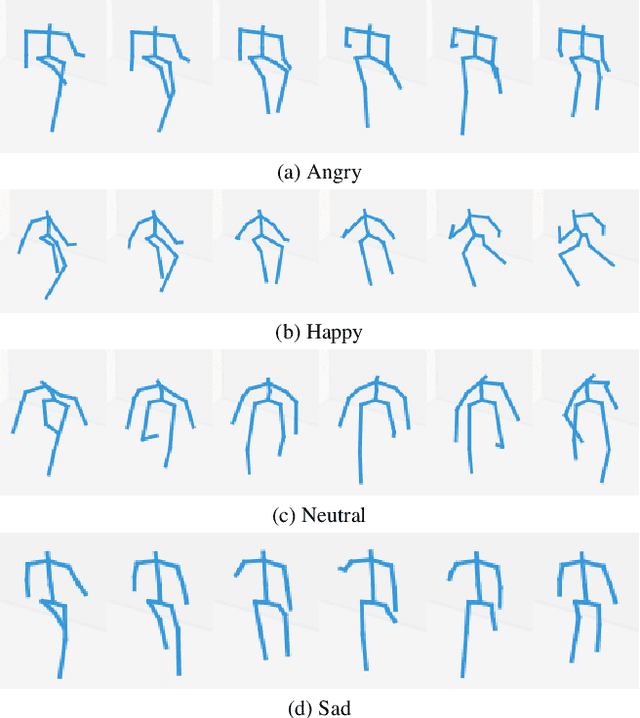

ST-Gait++: Leveraging spatio-temporal convolutions for gait-based emotion recognition on videos

May 22, 2024

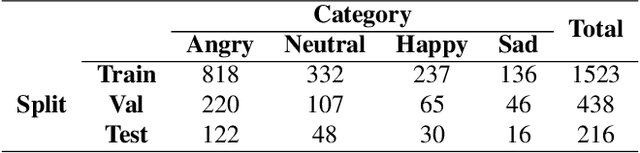

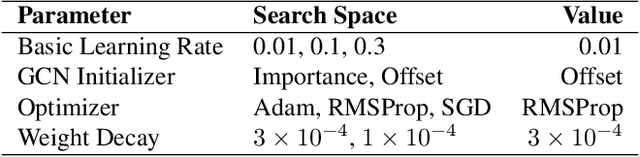

Abstract:Emotion recognition is relevant for human behaviour understanding, where facial expression and speech recognition have been widely explored by the computer vision community. Literature in the field of behavioural psychology indicates that gait, described as the way a person walks, is an additional indicator of emotions. In this work, we propose a deep framework for emotion recognition through the analysis of gait. More specifically, our model is composed of a sequence of spatial-temporal Graph Convolutional Networks that produce a robust skeleton-based representation for the task of emotion classification. We evaluate our proposed framework on the E-Gait dataset, composed of a total of 2177 samples. The results obtained represent an improvement of approximately 5% in accuracy compared to the state of the art. In addition, during training we observed a faster convergence of our model compared to the state-of-the-art methodologies.

High-Level Context Representation for Emotion Recognition in Images

May 05, 2023

Abstract:Emotion recognition is the task of classifying perceived emotions in people. Previous works have utilized various nonverbal cues to extract features from images and correlate them to emotions. Of these cues, situational context is particularly crucial in emotion perception since it can directly influence the emotion of a person. In this paper, we propose an approach for high-level context representation extraction from images. The model relies on a single cue and a single encoding stream to correlate this representation with emotions. Our model competes with the state-of-the-art, achieving an mAP of 0.3002 on the EMOTIC dataset while also being capable of execution on consumer-grade hardware at approximately 90 frames per second. Overall, our approach is more efficient than previous models and can be easily deployed to address real-world problems related to emotion recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge