Wenqi Bai

Exploring the Generalizability of Geomagnetic Navigation: A Deep Reinforcement Learning approach with Policy Distillation

Feb 07, 2025

Abstract:The advancement in autonomous vehicles has empowered navigation and exploration in unknown environments. Geomagnetic navigation for autonomous vehicles has drawn increasing attention with its independence from GPS or inertial navigation devices. While geomagnetic navigation approaches have been extensively investigated, the generalizability of learned geomagnetic navigation strategies remains unexplored. The performance of a learned strategy can degrade outside of its source domain where the strategy is learned, due to a lack of knowledge about the geomagnetic characteristics in newly entered areas. This paper explores the generalization of learned geomagnetic navigation strategies via deep reinforcement learning (DRL). Particularly, we employ DRL agents to learn multiple teacher models from distributed domains that represent dispersed navigation strategies, and amalgamate the teacher models for generalizability across navigation areas. We design a reward shaping mechanism in training teacher models where we integrate both potential-based and intrinsic-motivated rewards. The designed reward shaping can enhance the exploration efficiency of the DRL agent and improve the representation of the teacher models. Upon the gained teacher models, we employ multi-teacher policy distillation to merge the policies learned by individual teachers, leading to a navigation strategy with generalizability across navigation domains. We conduct numerical simulations, and the results demonstrate an effective transfer of the learned DRL model from a source domain to new navigation areas. Compared to existing evolutionary-based geomagnetic navigation methods, our approach provides superior performance in terms of navigation length, duration, heading deviation, and success rate in cross-domain navigation.

Long-distance Geomagnetic Navigation in GNSS-denied Environments with Deep Reinforcement Learning

Oct 21, 2024

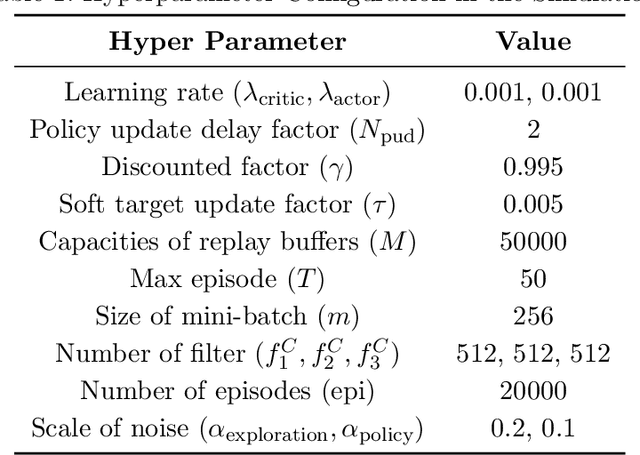

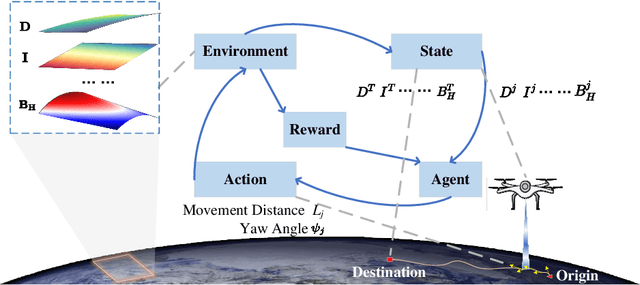

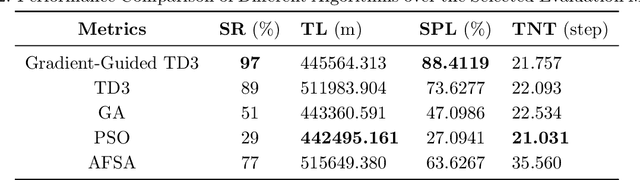

Abstract:Geomagnetic navigation has drawn increasing attention with its capacity in navigating through complex environments and its independence from external navigation services like global navigation satellite systems (GNSS). Existing studies on geomagnetic navigation, i.e., matching navigation and bionic navigation, rely on pre-stored map or extensive searches, leading to limited applicability or reduced navigation efficiency in unexplored areas. To address the issues with geomagnetic navigation in areas where GNSS is unavailable, this paper develops a deep reinforcement learning (DRL)-based mechanism, especially for long-distance geomagnetic navigation. The designed mechanism trains an agent to learn and gain the magnetoreception capacity for geomagnetic navigation, rather than using any pre-stored map or extensive and expensive searching approaches. Particularly, we integrate the geomagnetic gradient-based parallel approach into geomagnetic navigation. This integration mitigates the over-exploration of the learning agent by adjusting the geomagnetic gradient, such that the obtained gradient is aligned towards the destination. We explore the effectiveness of the proposed approach via detailed numerical simulations, where we implement twin delayed deep deterministic policy gradient (TD3) in realizing the proposed approach. The results demonstrate that our approach outperforms existing metaheuristic and bionic navigation methods in long-distance missions under diverse navigation conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge