Wendson A. S. Barbosa

Controlling Chaotic Maps using Next-Generation Reservoir Computing

Jul 07, 2023Abstract:In this work, we combine nonlinear system control techniques with next-generation reservoir computing, a best-in-class machine learning approach for predicting the behavior of dynamical systems. We demonstrate the performance of the controller in a series of control tasks for the chaotic H\'enon map, including controlling the system between unstable fixed-points, stabilizing the system to higher order periodic orbits, and to an arbitrary desired state. We show that our controller succeeds in these tasks, requires only 10 data points for training, can control the system to a desired trajectory in a single iteration, and is robust to noise and modeling error.

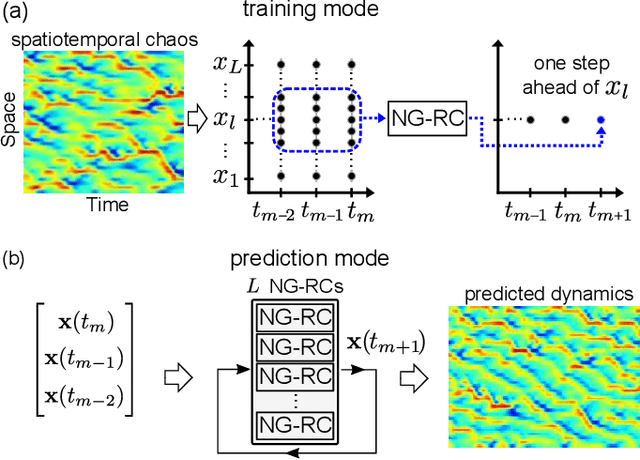

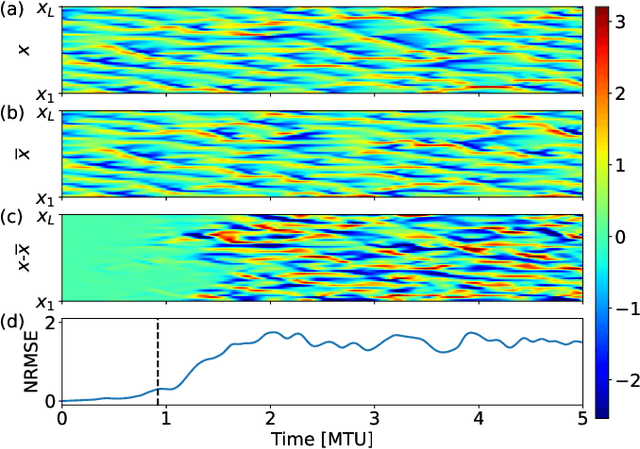

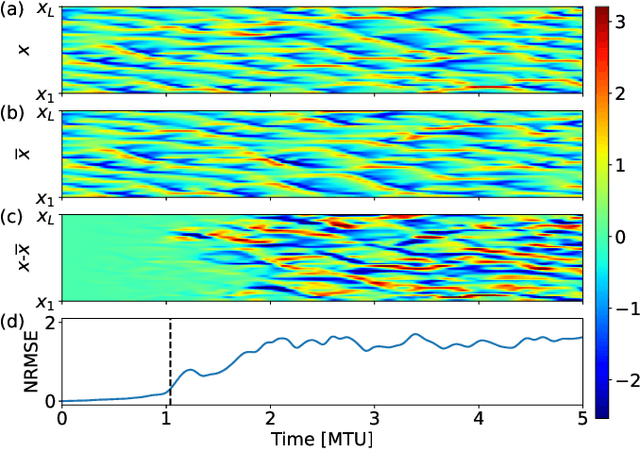

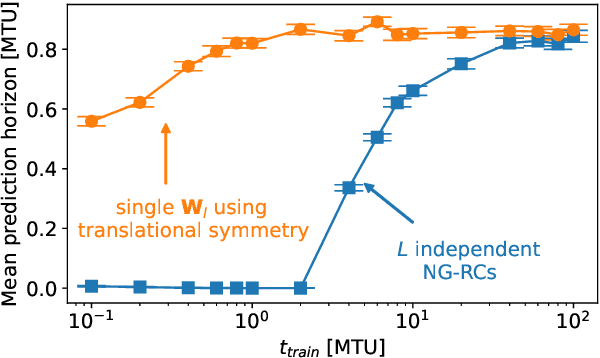

Learning Spatiotemporal Chaos Using Next-Generation Reservoir Computing

Mar 24, 2022

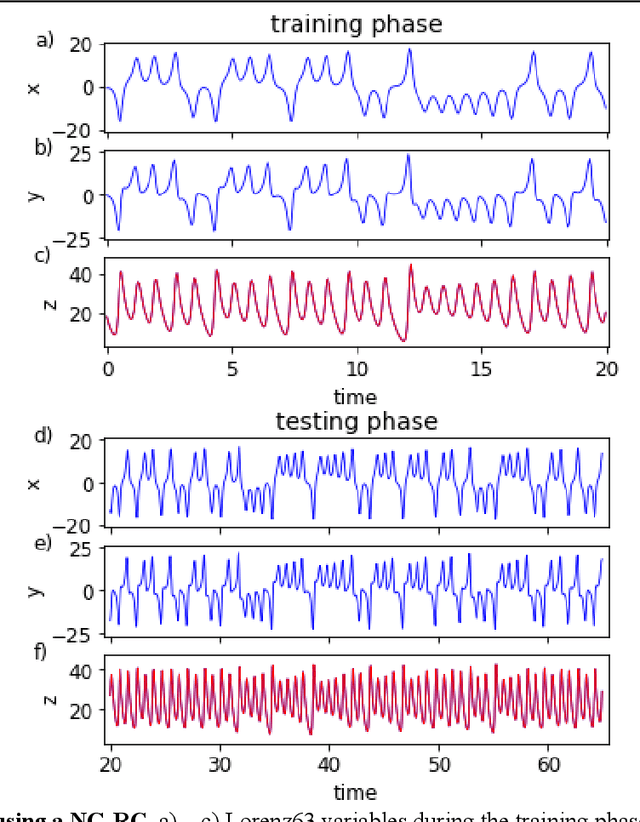

Abstract:Forecasting the behavior of high-dimensional dynamical systems using machine learning (ML) requires efficient methods to learn the underlying physical model. We demonstrate spatiotemporal chaos prediction of a heuristic atmospheric weather model using an ML architecture that, when combined with a next-generation reservoir computer, displays state-of-the-art performance with a training time $10^3-10^4$ times faster and training data set $\sim 10^2$ times smaller than other ML algorithms. We also take advantage of the translational symmetry of the model to further reduce the computational cost and training data, each by a factor of $\sim$10.

Next Generation Reservoir Computing

Jun 14, 2021

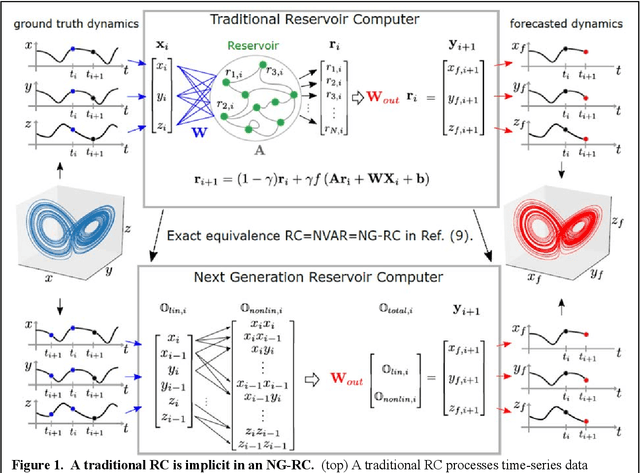

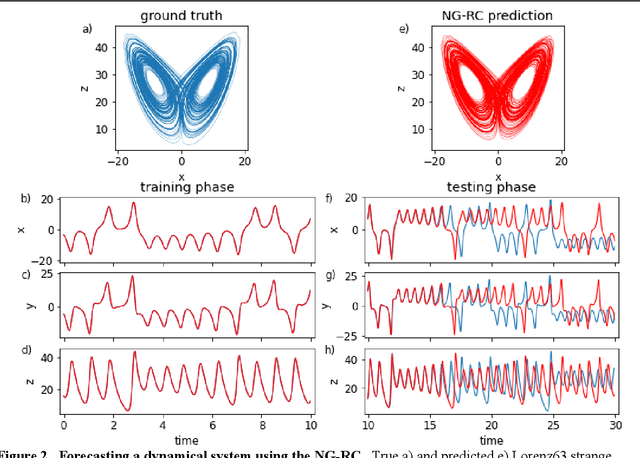

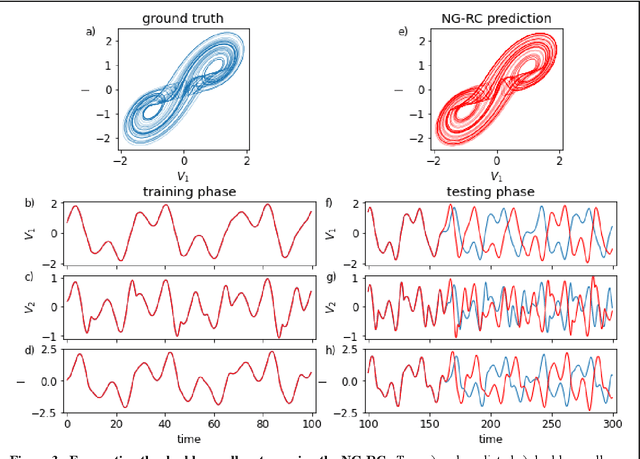

Abstract:Reservoir computing is a best-in-class machine learning algorithm for processing information generated by dynamical systems using observed time-series data. Importantly, it requires very small training data sets, uses linear optimization, and thus requires minimal computing resources. However, the algorithm uses randomly sampled matrices to define the underlying recurrent neural network and has a multitude of metaparameters that must be optimized. Recent results demonstrate the equivalence of reservoir computing to nonlinear vector autoregression, which requires no random matrices, fewer metaparameters, and provides interpretable results. Here, we demonstrate that nonlinear vector autoregression excels at reservoir computing benchmark tasks and requires even shorter training data sets and training time, heralding the next generation of reservoir computing.

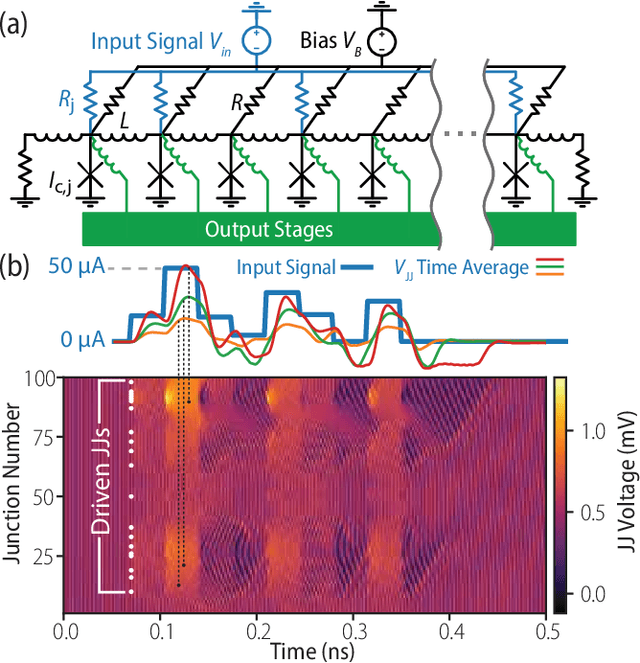

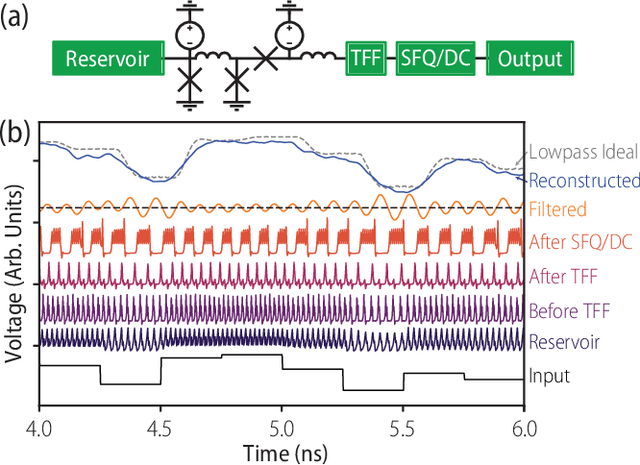

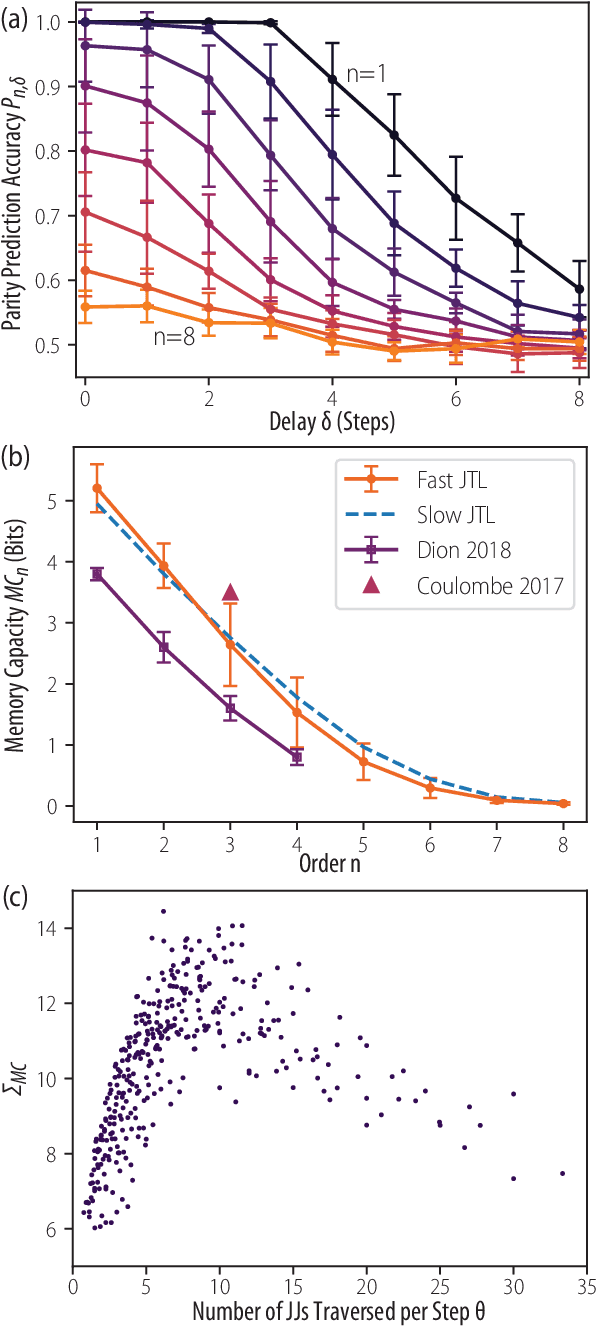

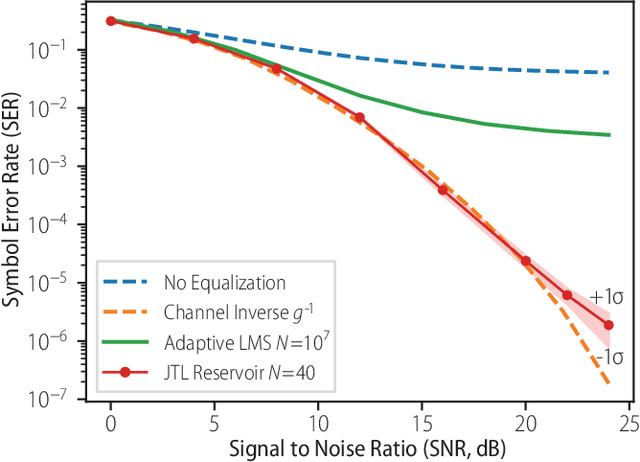

Reservoir Computing with Superconducting Electronics

Mar 03, 2021

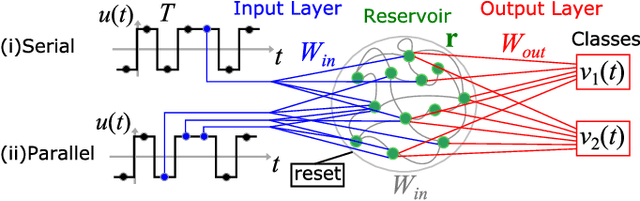

Abstract:The rapidity and low power consumption of superconducting electronics makes them an ideal substrate for physical reservoir computing, which commandeers the computational power inherent to the evolution of a dynamical system for the purposes of performing machine learning tasks. We focus on a subset of superconducting circuits that exhibit soliton-like dynamics in simple transmission line geometries. With numerical simulations we demonstrate the effectiveness of these circuits in performing higher-order parity calculations and channel equalization at rates approaching 100 Gb/s. The availability of a proven superconducting logic scheme considerably simplifies the path to a fully integrated reservoir computing platform and makes superconducting reservoirs an enticing substrate for high rate signal processing applications.

Symmetry-Aware Reservoir Computing

Jan 30, 2021

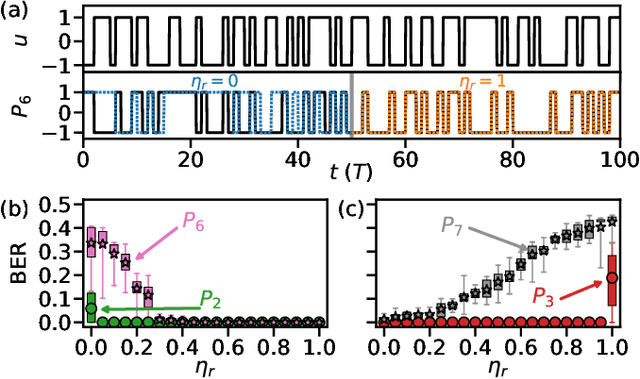

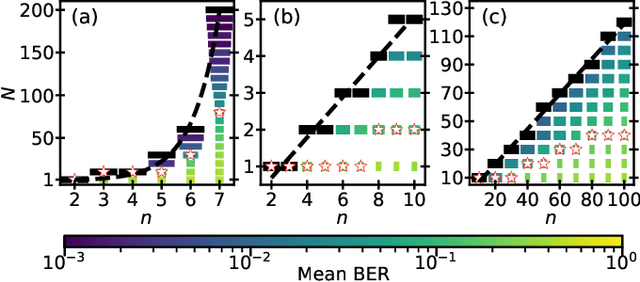

Abstract:We demonstrate that matching the symmetry properties of a reservoir computer (RC) to the data being processed can dramatically increase its processing power. We apply our method to the parity task, a challenging benchmark problem, which highlights the benefits of symmetry matching. Our method outperforms all other approaches on this task, even artificial neural networks (ANN) hand crafted for this problem. The symmetry-aware RC can obtain zero error using an exponentially reduced number of artificial neurons and training data, greatly speeding up the time-to-result. We anticipate that generalizations of our procedure will have widespread applicability in information processing with ANNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge