Wen-Huan Chiang

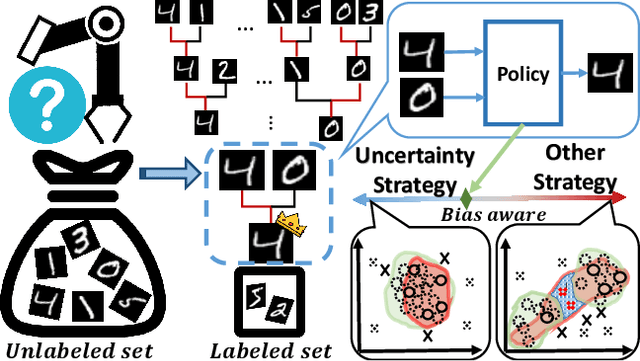

Bias-Aware Heapified Policy for Active Learning

Nov 18, 2019

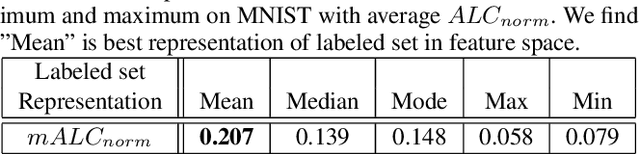

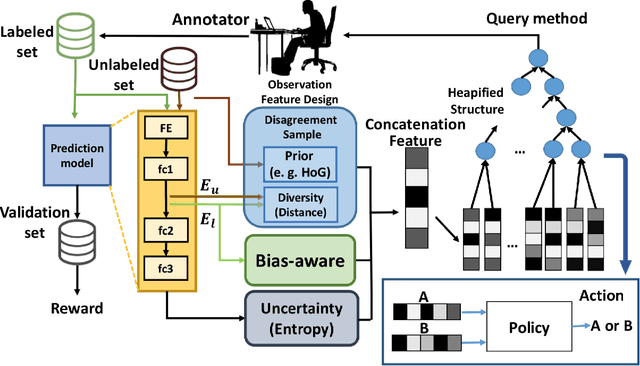

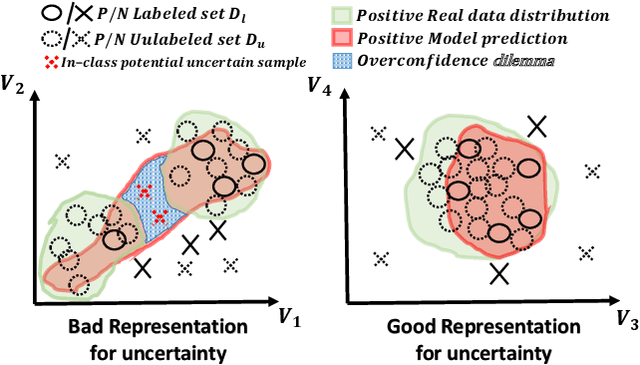

Abstract:The data efficiency of learning-based algorithms is more and more important since high-quality and clean data is expensive as well as hard to collect. In order to achieve high model performance with the least number of samples, active learning is a technique that queries the most important subset of data from the original dataset. In active learning domain, one of the mainstream research is the heuristic uncertainty-based method which is useful for the learning-based system. Recently, a few works propose to apply policy reinforcement learning (PRL) for querying important data. It seems more general than heuristic uncertainty-based method owing that PRL method depends on data feature which is reliable than human prior. However, there have two problems - sample inefficiency of policy learning and overconfidence, when applying PRL on active learning. To be more precise, sample inefficiency of policy learning occurs when sampling within a large action space, in the meanwhile, class imbalance can lead to the overconfidence. In this paper, we propose a bias-aware policy network called Heapified Active Learning (HAL), which prevents overconfidence, and improves sample efficiency of policy learning by heapified structure without ignoring global inforamtion(overview of the whole unlabeled set). In our experiment, HAL outperforms other baseline methods on MNIST dataset and duplicated MNIST. Last but not least, we investigate the generalization of the HAL policy learned on MNIST dataset by directly applying it on MNIST-M. We show that the agent can generalize and outperform directly-learned policy under constrained labeled sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge